Computer architecture is the foundation of modern computing. It's the blueprint that brings together all the components of a computer to create a functional system.

The design of a computer's architecture is influenced by its intended use, with different architectures suited for various applications. For example, a supercomputer is designed for complex calculations and simulations, while a mobile device has a more power-efficient architecture.

A computer's architecture can be divided into three main layers: the hardware, the firmware, and the software. The hardware layer consists of the physical components, such as the central processing unit (CPU) and memory. The firmware layer includes the basic input/output system (BIOS) and other low-level software. The software layer, on the other hand, includes the operating system and applications.

Understanding computer architecture is crucial for optimizing system performance, improving power efficiency, and reducing costs. By grasping the intricacies of computer design, developers can create more efficient and effective systems.

Expand your knowledge: Ai Architecture Software

Computer Architecture Basics

Computer architecture is a specification that describes how computer software and hardware connect and interact to create a computer network. It determines the structure and function of computers and the technologies they are compatible with.

The basic components of computer architecture include the CPU and memory, which work together to process and store data. You're probably familiar with these components, but do you know how they work together?

Computer architecture is crucial for both computer scientists and enthusiasts, as it forms the basis for designing innovative and efficient computing solutions. Understanding computer architecture helps programmers write software that can take full advantage of a computer's capabilities.

Here are the key components that make up the structure of a computer:

- CPU (Central Processing Unit)

- Data processors

- Multiprocessors

- Memory controllers

- Direct memory access

The instruction set architecture (ISA) is any software that makes a computer run, including the CPU's functions and capabilities, programming languages, data formats, processor register types, and instructions used by programmers. This is what makes a computer run, and it's essential for designing efficient computing solutions.

CPU Components

The CPU is the heart of any computer architecture, and it's made up of several important components. The Arithmetic Logic Unit (ALU) is the heart of the CPU operation, performing operations like addition, subtraction, AND, OR, and NOT on binary bits.

The ALU takes values in registers and performs operations on them, making it a crucial part of the CPU. Modern processors often have multiple ALUs to handle different operations simultaneously.

The Control Unit (CU) controls all the components of basic computer architecture, transferring data to and from the ALU to dictate how each component behaves. It's like the conductor in an orchestra, giving instructions to the different parts to work together in harmony.

The CPU has two main types of registers: those for integer calculations and those for floating-point calculations. Floating-point registers handle numbers with decimal places in binary form, and are different from integer registers.

Here are the main components of the CPU, in a simplified block diagram:

The CPU's components work together to execute instructions read from memory, making it the core of any computer architecture. Without a functioning CPU, the other components are still there, but there's no computing.

Memory and Storage

Computer architecture is all about how the different components of a computer system work together. Memory and storage are crucial components of a computer system, and they're never empty.

A computer's memory is primarily used for storing data, whether it's instructions from the CPU or larger amounts of permanent data. Traditionally, this component can be broken into primary and secondary storage.

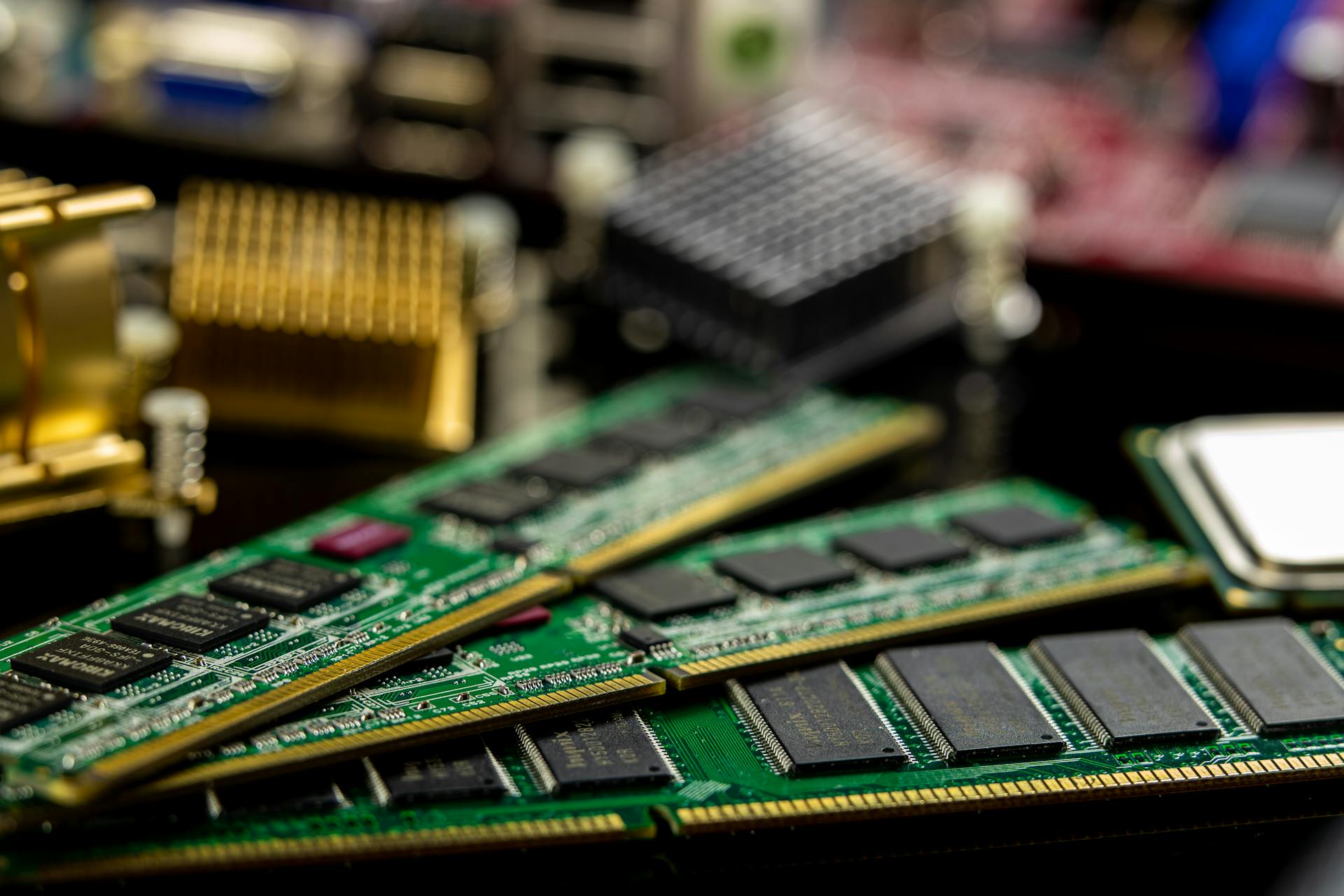

Primary memory, also known as RAM, stores only programs and data currently in use. RAM is like short-term memory and only stores data as long as the machine is on and data is being used.

The most common type of primary memory is RAM, which is equivalent to short-term memory. ROM, on the other hand, stores the data used to operate the system, even when you turn off the computer.

A computer's memory hierarchy is essential for minimizing the time it takes to access the memory units. The most common memory hierarchy consists of four levels: processor registers, cache memory, primary memory, and secondary memory.

Here's a breakdown of the four levels of memory hierarchy:

Cache memory is a crucial component of a computer system, and its performance directly impacts the overall performance of the system. The cache replacement policies used to better process big data applications include FIFO, LRU, and LFU.

Instruction Set and Execution

The Instruction Set Architecture (ISA) defines the instructions a processor can read and act upon, deciding which software can be installed and how efficiently it can perform tasks.

There are three types of ISA: stack-based, accumulator-based, and register-based. The register-based ISA is most commonly used in modern machines.

The register-based ISA includes popular examples like x86 and MC68000 for CISC architecture, and SPARC, MIPS, and ARM for RISC architecture.

Executing a single instruction consists of a particular cycle of events: fetching, decoding, executing, and storing. This process is essential for tasks like adding two numbers together, which requires fetching the instruction, decoding what it means, executing the addition, and storing the result.

Here's a breakdown of the execution process:

- Fetch: get the instruction from memory into the processor.

- Decode: internally decode what it has to do.

- Execute: take the values from the registers, actually perform the operation.

- Store: store the result back into another register.

The Von Neumann architecture, named after John von Neumann, features a single memory space for both data and instructions, which are fetched and executed sequentially. This creates a bottleneck where the CPU can't fetch instructions and data simultaneously, known as the Von Neumann bottleneck.

Fetch, Execute, Store

The Fetch, Execute, Store cycle is a crucial part of how our computers execute instructions. It's a four-step process that happens in a specific order.

First, the CPU fetches the instruction from memory into the processor. This is where the instruction is retrieved and brought into the CPU for execution.

Decoding is the next step, where the CPU internally decodes what the instruction is telling it to do. This is where the instruction is broken down into its individual parts.

The Execute step is where the magic happens, where the CPU takes the values from the registers and performs the action specified by the instruction. This is where the actual calculation or operation is carried out.

Finally, the result is stored back into another register, and the instruction is considered complete. This is the Store step, where the outcome of the instruction is saved for future use.

Here's a breakdown of the Fetch, Execute, Store cycle in a more detailed way:

The Fetch, Execute, Store cycle is a fundamental concept in computer architecture, and understanding how it works can help you appreciate the complexity and beauty of modern computing.

Reordering

Reordering is a key concept in CPU execution, allowing the processor to reorder instructions within a pipeline while still maintaining program order. This means that as long as the instructions pop out the end of the pipeline in the same order they were given, the processor is free to reorder them.

The CPU can reorder instructions that have no dependency on each other, like instructions 2 and 3 in Figure 3-3, which operate on completely separate registers. This can improve pipeline efficiency by allowing the processor to do useful work while waiting for previous instructions to complete.

However, some instructions may require specific security about how operations are ordered, known as memory semantics. This can be due to requirements like acquire semantics, which ensures that the results of all previous instructions have been completed, or release semantics, which means that all instructions after this one must see the current result.

Memory barriers or memory fences are even stricter, requiring that operations have been committed to memory before continuing. On some architectures, these semantics are guaranteed by the processor, while on others they must be specified explicitly. Most programmers don't need to worry directly about these terms, but may see them in low-level code.

CPU Internals and Performance

The CPU is the heart of any computer architecture, responsible for executing instructions and controlling all surrounding components. It's like the conductor in an orchestra, without which the other components are useless.

The CPU has many sub-components that work together to perform tasks, much like a physical production line. The Arithmetic Logic Unit (ALU) is the heart of CPU operation, performing operations like addition, subtraction, and multiplication.

Here are some key components of the CPU and their functions:

- Arithmetic Logic Unit (ALU): performs arithmetic and logical operations

- Address Generation Unit (AGU): handles communication between cache and main memory

- Registers: store data temporarily while it's being processed

The CPU's performance is measured in various ways, including instructions per second (IPS), floating-point operations per second (FLOPS), and benchmarks. These metrics help designers optimize power consumption and performance in computer architecture.

Pipelining and Parallelism

Pipelining and parallelism are two powerful methods used to speed up processing in computer architecture. Pipelining involves overlapping multiple instructions and processing them simultaneously, similar to a factory assembly line.

This method significantly increases the number of processed instructions and comes in two types: instruction pipelines and arithmetic pipelines. Instruction pipelines are used for fixed-point multiplication, floating-point operations, and similar calculations, while arithmetic pipelines are used for reading consecutive instructions from memory.

Parallelism, on the other hand, entails using multiple processors or cores to process data simultaneously. This collaborative approach allows large amounts of data to be processed quickly.

Computer architecture employs two types of parallelism: data parallelism and task parallelism. Data parallelism involves executing the same task with multiple cores and different sets of data, while task parallelism involves performing different tasks with multiple cores and the same or different data.

Multicore processors are crucial for increasing the efficiency of parallelism as a method. By using multiple cores, computers can process data much faster and more efficiently.

CPU Internals

The CPU is the heart of any computer, and it's made up of many sub-components that work together to perform various tasks. These sub-components are like a production line, where each step has a specific task to perform.

The CPU has a register file, which is the collective name for the registers inside the CPU. Registers are like temporary storage spaces for data, and they're used to speed up processing. The CPU has two main types of registers: integer registers and floating-point registers.

The Arithmetic Logic Unit (ALU) is the heart of the CPU operation. It takes values in registers and performs any of the multitude of operations the CPU is capable of. All modern processors have a number of ALUs, so each can be working independently.

The Address Generation Unit (AGU) handles talking to cache and main memory to get values into the registers for the ALU to operate on and get values out of registers back into main memory. This is like a messenger service, making sure the right data is in the right place at the right time.

Here's a breakdown of the main CPU components:

The CPU's components work together to execute instructions, load values from memory, and store values in memory. Without a functioning CPU, the other components are still there, but there's no computing.

Branch Prediction

Branch prediction is a crucial aspect of CPU performance, and it's based on the idea that most branches are predictable. In fact, most branches are taken, which means the CPU can make an educated guess about what will happen next.

The CPU uses a branch prediction mechanism to guess whether a branch will be taken or not. This mechanism is based on the idea that if a branch is taken frequently, it's likely to be taken again in the future. If a branch is not taken frequently, it's likely to be not taken again in the future.

For example, in the case of a loop, the CPU can predict that the branch will be taken because the loop will be executed multiple times. This allows the CPU to start executing the instructions in the loop body before the branch is actually taken.

A unique perspective: How Will Ai Affect Computer Science Jobs

However, if the CPU predicts incorrectly, it can result in a pipeline flush, which can significantly slow down the CPU. This is why branch prediction is such a critical aspect of CPU performance.

The CPU also uses a technique called "predict taken" or "predict not taken" to make its predictions. This means that the CPU will assume a branch will be taken or not taken based on its past behavior, and then adjust its prediction accordingly.

Memory Hierarchy and I/O

A computer's memory is always storing data, whether it's instructions for the CPU or permanent data. This data is never empty, as the memory's main purpose is to store information.

The memory can be broken down into primary and secondary storage. This is a traditional way of thinking about memory, but it's still a useful concept to understand.

Let's take a closer look at the types of storage. Primary storage is where the computer's operating system and programs are loaded, while secondary storage is where data is stored for longer periods of time.

There are three main types of I/O systems: Programmed I/O, Interrupt-Driven I/O, and Direct Memory Access (DMA). Programmed I/O is where the CPU directly issues a command to the I/O module and waits for it to be executed.

Interrupt-Driven I/O is where the CPU moves on to other tasks after issuing a command to the I/O system. This is more efficient than Programmed I/O, as it allows the CPU to multitask.

Direct Memory Access (DMA) is where the data is transferred between the memory and I/O devices without passing through the CPU. This is the fastest type of I/O, as it doesn't rely on the CPU to transfer data.

The three standard I/O interfaces used for physically connecting hardware devices to a computer are Peripheral Component Interconnect (PCI), Small Computer System Interface (SATA), and Universal Serial Bus (USB). Here are some key characteristics of each interface:

CPU Architecture Types

There are four main types of computer architecture: Von Neumann architecture, Harvard architecture, Modified Harvard Architecture, and the RISC & CISC Architectures. The fundamentals of computer architecture remain the same despite rapid advancements in computing.

The central processing unit (CPU) is at the core of any computer architecture, controlling all its surrounding components with instructions written as binary bits. This hardware component is like the conductor in an orchestra, without which the other components are still there but waiting for instructions.

The CPU's components are so important that without a functioning CPU, the other components are useless. RISC & CISC Architectures are two different approaches to computer processors that determine how they handle instructions. RISC processors are designed with a set of basic, well-defined instructions that are typically fixed-length and easy for the processor to decode and execute quickly.

The RISC approach is beneficial because it allows for faster clock speeds and potentially lower power consumption, making it suitable for use in smartphones and tablets. CISC processors, on the other hand, have a wider range of instructions, including some very complex ones that can perform multiple operations in a single instruction. This can be more concise for programmers but can take the processor more time to decode and execute.

Modern architectures would be considered RISC architectures due to several advantages, including more space inside the chip for registers, which are the fastest type of memory. This leads to higher performance and makes pipelining possible, which requires streams of instructions being constantly fed into the processor.

The Four Types

Despite the rapid advancement of computing, the fundamentals of computer architecture remain the same.

There are four main types of computer architecture, and understanding them is essential for anyone looking to learn about CPU architecture.

The Von Neumann architecture is one of the most well-known types, but it's not the only one.

Harvard architecture is another type, which has a different setup of its components compared to Von Neumann architecture.

Modified Harvard Architecture is a variation of the Harvard architecture, with some key differences in its setup.

RISC & CISC Architectures are also two main types, which have distinct approaches to processing information.

These four types mostly share the same base components, but the setup of these components is what makes them differ.

RISC & CISC

RISC & CISC are two primary approaches to processor architecture. They're like two different cooking methods, each with its own strengths and weaknesses.

RISC (Reduced Instruction Set Computing) processors are designed with a set of basic, well-defined instructions that are typically fixed-length and easy for the processor to decode and execute quickly. This leads to faster clock speeds and potentially lower power consumption.

Examples of RISC processors include ARM processors commonly found in smartphones and tablets, and MIPS processors used in some embedded systems.

CISC (Complex Instruction Set Computing) processors, on the other hand, have a wider range of instructions, including some very complex ones that can perform multiple operations in a single instruction. This can be more concise for programmers but can take the processor more time to decode and execute.

Examples of CISC processors include Intel's x86 processors, which are used in most personal computers, and Motorola 68000 family of processors which are used in older Apple computers.

Here's a comparison of the two approaches:

RISC processors are designed to execute simple instructions efficiently, while CISC processors aim to provide a comprehensive set of instructions to handle a wide range of tasks. Ultimately, both approaches achieve the same goal, but in different ways.

Harvard

The Harvard architecture is a type of computer architecture that separates data and instructions, allocating separate data, addresses, and control buses for the separate memories.

This setup allows for concurrent fetching of data, minimizing idle time and reducing the chance of data corruption. The Harvard architecture was named after the IBM computer called “Harvard Mark I” located at Harvard University.

Separate buses in the Harvard architecture reduce the need for waiting for data and instructions to be processed, making it potentially faster than other architectures. However, this setup also requires a more complex architecture that can be challenging to develop and implement.

The Harvard architecture's separate memory units can be optimized for their specific purposes, such as read-only instruction memory and fast read/write data memory.

Power and Performance

Power consumption is a major consideration in computer architecture, as it directly affects the operating costs and lifespan of the machine. Failing to consider power consumption can lead to power dissipation.

Power dissipation can be mitigated by using techniques like Dynamic Voltage and Frequency Scaling (DVFS), which scales down the voltage based on the required performance. Clock gating and power gating are also effective methods, shutting off the clock signal and power to circuit blocks when they're not in use.

Performance is another crucial aspect of computer architecture, and it's measured in various ways. Instructions per second (IPS) is a common metric, measuring efficiency at any clock frequency.

FLOPS, or floating-point operations per second, measures numerical computing performance. Benchmarks, on the other hand, measure how long the computer takes to complete a series of test programs.

Here are some key performance metrics:

- Instructions per second (IPS)

- Floating-point operations per second (FLOPS)

- Benchmarks

Frequently Asked Questions

What are the 3 parts of the computer architecture?

Computer architecture consists of three main components: hardware, software, and communication systems. Understanding these components is essential to grasping how computers work and perform tasks.

What are the 5 basic units of computer architecture?

The 5 basic units of computer architecture are the Motherboard, Central Processing Unit (CPU), Graphical Processing Unit (GPU), Random Access Memory (RAM), and Storage device, which work together to enable a computer to process and store information. Understanding these components is essential for building and maintaining a computer system.

Sources

- https://www.cs.umd.edu/~meesh/411/CA-online/chapter/computer-architectureintroduction/index.html

- https://em360tech.com/tech-article/computer-architecture

- https://www.opit.com/magazine/computer-architecture/

- https://shop.elsevier.com/books/computer-architecture/hennessy/978-0-12-811905-1

- https://bottomupcs.sourceforge.net/csbu/c1453.htm

Featured Images: pexels.com