Machine learning is a subset of artificial intelligence that involves training algorithms to make predictions or decisions based on data. In the context of statistical learning, machine learning is a tool used to find patterns and relationships within data.

The Elements of Statistical Learning solutions provide a framework for understanding how to approach machine learning problems. This framework includes understanding the data, selecting the right algorithm, and tuning the model to achieve the best results.

A key concept in machine learning is the idea of overfitting, which occurs when a model is too complex and fits the noise in the training data rather than the underlying patterns. This can be mitigated by using regularization techniques, such as L1 and L2 regularization, to simplify the model.

Regularization can help prevent overfitting by adding a penalty term to the loss function, which encourages the model to be more generalizable.

Supervised Learning

Supervised learning is a type of machine learning where the algorithm is trained on labeled data, allowing it to learn from the relationships between inputs and outputs.

The Elements of Statistical Learning 2e provides a comprehensive overview of supervised learning, with a focus on linear methods, including linear regression and logistic regression.

Supervised learning is widely used in many applications, including image classification, speech recognition, and natural language processing.

The Elements of Statistical Learning 2e notes that supervised learning can be classified into two main categories: regression and classification, depending on the type of output variable.

Supervised learning algorithms can be trained using a variety of methods, including maximum likelihood estimation and cross-validation.

By understanding the principles of supervised learning, data scientists can develop accurate and reliable models that make predictions and classify data with high accuracy.

If this caught your attention, see: Elements in Statistical Learning

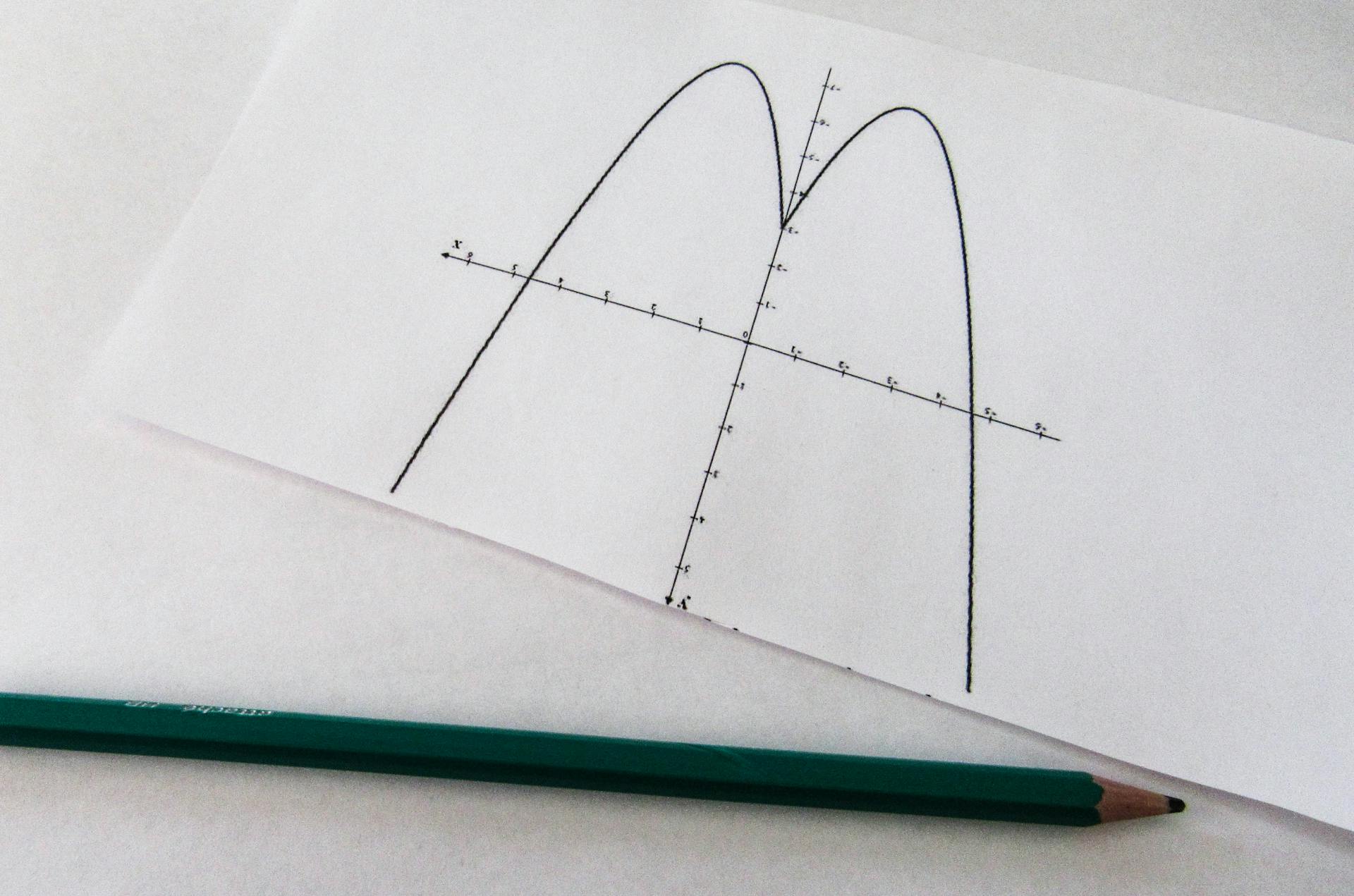

Linear Models

Linear models are a fundamental concept in statistical learning, and understanding them is crucial for making predictions and understanding relationships between variables.

Simple linear regression is a type of linear model that involves predicting a continuous outcome variable based on a single predictor variable. This can be a powerful tool for understanding relationships between variables, as seen in Chapter 3.

To implement simple linear regression, you need to calculate the regression coefficients, which can be done using a formula or software. In Chapter 3, you can learn how to calculate confidence intervals and perform hypothesis testing to ensure that your results are statistically significant.

Multiple linear regression is another type of linear model that involves predicting a continuous outcome variable based on multiple predictor variables. This can be a more powerful tool than simple linear regression, but it also requires more data and computational resources. In Chapter 3, you can learn how to interpret regression coefficients and select the best model for your data.

Linear model selection is an important aspect of linear modeling, as it allows you to choose the best model for your data based on a variety of criteria. In Chapter 6, you can learn about different methods for linear model selection, including best subset selection, forward stepwise selection, and backward stepwise selection.

Here are some common methods for linear model selection:

Regularization is a technique used to prevent overfitting in linear models by adding a penalty term to the model's loss function. In Chapter 6, you can learn about different types of regularization, including ridge regression and the lasso.

Shrinkage methods, such as ridge regression and the lasso, can be used to reduce the impact of individual predictors on the model. In Chapter 6, you can learn how to tune the parameters of these methods to achieve the best results.

Dimension reduction techniques, such as principal components regression and partial least squares, can be used to reduce the number of predictors in the model while preserving the most important information. In Chapter 6, you can learn how to implement these techniques and interpret the results.

Take a look at this: Applied Machine Learning Explainability Techniques

Sources

- https://tullo.ch/articles/elements-of-statistical-learning/

- https://www.r-bloggers.com/2014/09/in-depth-introduction-to-machine-learning-in-15-hours-of-expert-videos/

- https://dokumen.pub/the-elements-of-statistical-learning-2e.html

- https://books.google.com/books/about/The_Elements_of_Statistical_Learning.html

- https://www.slideshare.net/slideshow/a-solution-manual-and-notes-for-the-elements-of-statistical-learningpdf/259705472

Featured Images: pexels.com