Semisupervised learning is a type of machine learning that falls between supervised and unsupervised learning. It's a hybrid approach that uses both labeled and unlabeled data to train a model.

In a typical supervised learning scenario, a model is trained on a large dataset of labeled examples, where each example is associated with a specific output. However, collecting and labeling a large dataset can be time-consuming and expensive.

Semisupervised learning, on the other hand, uses a combination of labeled and unlabeled data to train a model. This approach can be more efficient than traditional supervised learning, as it can leverage the power of unlabeled data to improve model performance.

By using both labeled and unlabeled data, semisupervised learning can help to reduce the need for manual labeling, which can be a major bottleneck in traditional supervised learning.

Explore further: Applications of Supervised Learning

What is Semisupervised Learning?

Semi-supervised learning is a machine learning technique that uses a small portion of labeled data and lots of unlabeled data to train a predictive model. This approach bridges the gap between supervised and unsupervised learning, offering a more efficient and cost-effective way to train models.

Related reading: Supervised Learning Algorithms

Unlike supervised learning, semi-supervised learning uses a small amount of labeled data, which reduces expenses on manual annotation and cuts data preparation time. This is particularly useful when there is a large amount of unlabeled data available, but it's too expensive or difficult to label all of it.

Semi-supervised learning has a wide range of applications, including classification, regression, clustering, and association. It is particularly useful in scenarios where a large amount of unlabeled data is available, such as fraud detection, where a company with 10 million users can analyze a small portion of transactions to classify them as fraudulent or not.

The goal of semi-supervised learning is to learn a function that can accurately predict the output variable based on the input variables, similar to supervised learning. However, unlike supervised learning, the algorithm is trained on a dataset that contains both labeled and unlabeled data.

Here are some key advantages of semi-supervised learning:

- Uses a small amount of labeled data, reducing expenses on manual annotation and cutting data preparation time.

- Works for a variety of problems, including classification, regression, clustering, and association.

- Provides accurate results without sacrificing accuracy.

Overall, semi-supervised learning offers a powerful approach to machine learning that can be used to solve a wide range of problems.

Types of Semisupervised Learning

Semisupervised learning is a powerful technique that can be used when labeled data is scarce, but unlabeled data is abundant. Self-training is a simple example of semi-supervised learning, where a supervised model is modified to work with both labeled and unlabeled data.

The standard workflow for self-training involves training a base model with a small amount of labeled data, applying pseudo-labeling to generate labels for the unlabeled data, and then retraining the model with the combined labeled and pseudo-labeled data.

Co-training is an extension of self-training that uses multiple supervised classifiers to improve performance. It involves training two or more classifiers on the labeled data, adding their most confident predictions to the labeled dataset, and then retraining the classifiers with the updated data.

Pseudo-labeling is a key component of both self-training and co-training, where a model is used to generate approximate labels for the unlabeled data based on the labeled data.

Here are the main types of semi-supervised learning methods:

- Self-training: uses a supervised model to generate pseudo-labels for unlabeled data

- Co-training: uses multiple supervised classifiers to improve performance

- Pseudo-labeling: generates approximate labels for unlabeled data based on labeled data

- Intrinsically semi-supervised methods: directly optimize an objective function with components for labeled and unlabeled samples

These methods can be used to improve the performance of a model when labeled data is scarce, but unlabeled data is abundant. By combining labeled and unlabeled data, semi-supervised learning can be a powerful tool for improving the accuracy of a model.

Techniques Used in Semisupervised Learning

Semi-supervised learning is a powerful technique that can help you train a model on a large dataset without manually labeling every single data point. This approach is particularly useful when you have a small labeled dataset and a large unlabeled dataset.

Self-training is a technique where you train a model on a small labeled dataset and then use it to predict labels for the unlabeled data. This process is repeated until the model converges.

Pseudo-labelling is a form of self-training where you use the model's predictions as labels for the unlabeled data. This can be done by maximizing the margin between the predicted labels and the true labels.

If this caught your attention, see: Ai Self Learning

The smoothness assumption is a key concept in semi-supervised learning, which states that the predictive model should be robust to local perturbations in its input. This means that when you perturb a data point with a small amount of noise, the predictions for the noisy and clean inputs should be similar.

Perturbation-based methods are a type of semi-supervised learning that incorporate the smoothness assumption into the learning algorithm. One way to do this is by adding noise to the input data points and incorporating the difference between the clean and noisy predictions into the loss function.

Temporal ensembling is a technique that involves combining multiple perturbations of a network model to improve its robustness. This can be done by comparing the activations of the neural network at each epoch to the exponential moving average of the outputs of the network in previous epochs.

The mean teacher is a semi-supervised learning technique that involves using a moving average of the weights to improve the robustness of the model. This is done by calculating the exponential moving average of the weights at each training iteration and comparing the resulting final-layer activations to the final-layer activations when using the latest set of weights.

Advantages and Limitations

Semi-supervised learning offers several advantages over other approaches. Improved generalization is one of the key benefits, allowing the model to extract more information from labeled and unlabeled data and better understand the overall structure.

Semi-supervised learning can reduce the costs associated with labeling data, making it a more cost-effective option. Labeling data requires significant resources, both time and money, but semi-supervised learning can help alleviate this burden.

The flexibility and robustness of semi-supervised learning are also noteworthy. It provides flexibility in using different data types from different data sources, allowing models to adapt to various learning scenarios and be more robust to changes in the data distribution.

Some of the specific advantages of semi-supervised learning include:

- Improved generalization

- Cost optimization for labeling

- Flexibility and robustness

- Improved clustering quality

- Handling rare classes

- Combining prediction and discovery capabilities

Advantages

Semi-supervised learning offers several advantages over other training methods. Improved generalization is one of the key benefits, allowing models to extract more information from both labeled and unlabeled data and make more accurate predictions.

Semi-supervised learning can significantly reduce the costs associated with labeling data. By allowing the model to learn from unlabeled data, it can minimize the need for expensive and time-consuming labeling processes.

This approach also provides flexibility and robustness in using different data types from various sources. It enables models to adapt to various learning scenarios and become more resilient to changes in the data distribution.

Semi-supervised learning can improve clustering quality by using unlabeled data to refine the boundaries between clusters. This is particularly useful when dealing with rare classes, where only a small number of labeled examples are available.

By combining prediction and discovery capabilities, semi-supervised learning can enhance performance in various tasks, such as market analysis and anomaly detection.

Here are some of the key advantages of semi-supervised learning:

- Improved generalization

- Cost optimization for labeling

- Flexibility and robustness

- Improved clustering quality

- Handling rare classes

- Combining prediction and discovery capabilities

Limitations

Semi-supervised learning, while offering many benefits, also has its limitations. Choosing a suitable model architecture and tuning parameters can be complex, requiring more time and resources for experimentation and optimization.

This complexity can lead to increased time and costs, as well as potential overfitting on noisy or irrelevant data. Noise in data can negatively impact the model's performance, degrading its generalization ability.

Semi-supervised learning often relies on the consistency assumption, which states that close examples in feature space should have the same labels. However, this assumption can be violated in real-world data, leading to errors.

Here are some key limitations of semi-supervised learning:

- Increased time and complexity in choosing architecture and parameters

- Noise in data can negatively impact the model's performance

- Consistency error due to violation of the consistency assumption

- Computational complexity, requiring more resources and time to train models

- Uncertainty in performance evaluation due to the quality of unlabeled data

These limitations can make semi-supervised learning challenging to implement in real-world scenarios, especially when resources and time are limited.

Applications and Examples

Semi-supervised learning has a wide range of applications across various industries, including finance, medical diagnostics, and more.

In the finance sector, companies like PayPal use semi-supervised learning for fraud detection in financial transactions. This involves training machine learning models on a large number of transactions to recognize repetitive patterns and identify deviations that may indicate fraudulent actions. PayPal's use of semi-supervised learning helps reduce the risk of financial losses due to fraudulent activities.

Semi-supervised learning can also be applied to medical diagnostics, such as symptom detection, disease progression prediction, and medical image analysis for pathology detection. Companies like Zebra Medical Vision use this approach to improve the accuracy of medical diagnoses and develop personalized treatment plans based on large amounts of medical data.

Curious to learn more? Check out: Quantum Machine Learning Companies

Some of the key applications of semi-supervised learning include text classification, image classification, and anomaly detection. These applications can be seen in various industries, such as speech analysis, internet content classification, and protein sequence classification.

Here are some examples of semi-supervised learning applications in different industries:

These examples demonstrate the potential of semi-supervised learning to improve the accuracy and efficiency of various applications across different industries.

Best Practices and Preprocessing

To maximize the effectiveness and efficiency of semi-supervised learning approaches, consider the challenges you can face and implement best practices such as ensuring data quality and controlling model complexity.

Ensure data quality by applying preprocessing steps consistently to both labeled and unlabeled datasets to maintain data quality and consistency. You can implement robust data cleaning and filtering techniques to identify and handle noisy or erroneous data points.

Control model complexity by employing regularization methods such as entropy minimization and consistency regularization to encourage model smoothness and consistency across labeled and unlabeled data, preventing overfitting and improving generalization.

Preprocessing

Preprocessing is a crucial step in machine learning that can greatly impact the performance of your model. It involves cleaning and transforming your data to make it more suitable for analysis.

Ensuring data quality is essential, as poor-quality data can lead to model degradation or incorrect conclusions. This means implementing robust data cleaning and filtering techniques to identify and handle noisy or erroneous data points.

Consistency is key when preprocessing data. You should apply the same preprocessing steps to both labeled and unlabeled datasets to maintain data quality and consistency. This includes techniques such as rotation, translation, and noise injection to increase diversity and improve generalization.

Unsupervised preprocessing methods can also be used to extract useful features from the unlabelled data, pre-cluster the data, or determine the initial parameters of a supervised learning procedure in an unsupervised manner. These methods can be used with any supervised classifier and are covered in section 5.

For another approach, see: Machine Learning Unsupervised Clustering Falls under What Category

Here are some common unsupervised preprocessing methods:

- Feature extraction: extracting useful features from the unlabelled data

- Cluster-then-label: pre-clustering the data

- Pre-training: determining the initial parameters of a supervised learning procedure

By following these best practices and preprocessing techniques, you can ensure that your data is of high quality and suitable for analysis, leading to better model performance and more accurate results.

Choose and Evaluate a Model

Choosing the right model for semi-supervised learning can be a challenge, but selecting algorithms that are well-suited to the task is crucial.

Consider the dataset size and available computational resources when selecting a semi-supervised learning model. This will help ensure that the model can handle the data effectively.

Evaluate model performance using appropriate ML evaluation metrics, such as those used to assess model performance on both labeled and unlabeled data. This will give you a clear picture of how well the model is performing.

Use cross-validation techniques to assess model robustness and generalization across different subsets of the data, including labeled, unlabeled, and validation sets. This will help you identify any issues with the model's performance.

Comparing the model's performance against baseline supervised and unsupervised approaches will also help you understand its strengths and weaknesses.

Monitor Performance

Monitoring your model's performance is crucial for refining and updating it. This can be done by implementing monitoring and tracking mechanisms to assess model performance over time.

As you develop your SSL model, you'll want to keep an eye on its performance to detect any drifts or shifts in the data distribution that may require retraining or adaptation.

Iterative development allows for refining and updating your model based on performance feedback, new labeled data, or changes in the data distribution.

Advanced Techniques and Algorithms

In semi-supervised learning, advanced techniques and algorithms can greatly improve the accuracy of predictions.

Self-training is a popular method that involves using unlabeled data to improve the performance of a labeled dataset. This is done by training a model on the labeled data, then using the predictions from this model to generate more labeled data.

Graph-based methods use the structure of the data to improve the learning process. For example, in the case of image classification, a graph can be created where each image is a node connected to other images that are similar.

Active learning selects the most informative samples from the unlabeled dataset to be labeled by an expert. This approach can significantly reduce the amount of labeled data required for training.

Co-training involves training two models on different views of the data, and then using the predictions from one model to improve the performance of the other.

Graph-Based Methods and Inference

Graph-based methods are a type of semisupervised learning where labeled and unlabeled data points are represented as a graph. This graph is used to spread human-made annotations through the whole data network, making it a popular way to run semisupervised learning.

Label propagation is a graph-based transductive method that infers pseudo-labels for unlabeled data points by iteratively adopting the label of the majority of their neighbors based on the labeled data points. It assumes that all classes for the dataset are present in the labeled data, data points that are close have similar labels, and data points in the same cluster will likely have the same label.

The label propagation algorithm creates a fully connected graph where the nodes are all the labeled and unlabeled data points, with edges weighted based on the euclidean distance between nodes. A larger edge weight allows the label to "travel" easily in the model.

The algorithm works by assigning soft labels to all nodes based on the distribution of labels, propagating labels of a node to all nodes through edges, and updating each node's label iteratively based on the maximum number of nodes in its neighborhood. The algorithm stops when every node for the unlabeled data point has the majority label of its neighbor or the number of iterations defined is reached.

Graph construction is the most important aspect of graph-based methods, as the constructed graph must accurately capture local similarities for inference to work. This involves forming edges between nodes (yielding the adjacency matrix) and attaching weights to them (yielding the weight matrix), with the similarity measure governing the connectivity between nodes also used to construct the weight matrix.

Several approaches have been proposed to mitigate the problem of sensitivity to class imbalance in graph-based methods, including adjusting the classification threshold and altering the influence of labeled samples based on the label proportions. These approaches can be sensitive to noise in the true labels and may not naturally extend beyond binary classification.

Here are some key differences between graph-based methods and other types of semisupervised learning:

Future Perspectives

Semi-supervised learning is a rapidly evolving field, and its applications are vast and varied.

Several areas related to semi-supervised classification, such as semi-supervised regression and semi-supervised clustering, have also been explored.

Semi-supervised regression involves a real-valued label space, which is a key difference from semi-supervised classification.

Semi-supervised clustering is considered the counterpart of semi-supervised classification, and is covered in more detail later in this section.

Active learning, which involves querying the user for labels of previously unlabelled data points, is another field that is closely related to semi-supervised learning.

In active learning, the challenge lies in selecting unlabelled data points whose labels would be most informative, as labelling data is generally costly.

Learning from positive and unlabelled data, a special case of semi-supervised learning, involves accessing a set of unlabelled data points but only labelled data points belonging to a single class.

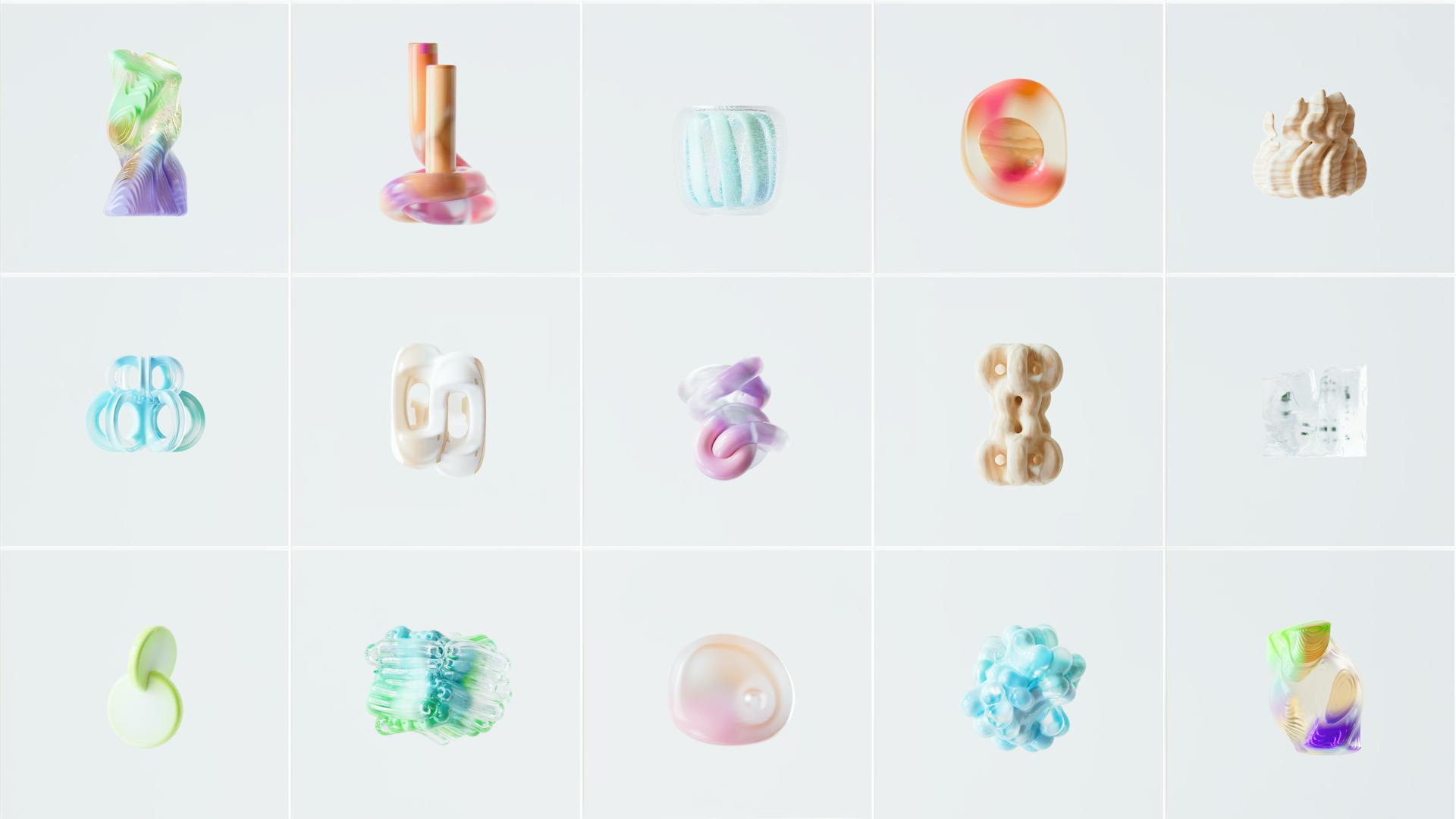

Featured Images: pexels.com