Latent variable models are a powerful tool that can be applied across various disciplines. These models help researchers and analysts uncover underlying patterns and relationships in complex data.

In psychology, latent variable models have been used to study personality traits, such as extraversion and neuroticism. By examining the relationships between different behaviors and characteristics, researchers can gain a deeper understanding of what drives human behavior.

Latent variable models have also been applied in marketing to identify consumer segments and preferences. By analyzing data on purchase behavior and demographics, businesses can tailor their marketing efforts to specific groups of customers.

In genetics, latent variable models have been used to study the genetic basis of complex traits, such as height and intelligence. By examining the relationships between different genetic variants and traits, researchers can identify the underlying genetic mechanisms that influence these complex characteristics.

On a similar theme: Model Drift vs Data Drift

Latent Variable Models

Latent Variable Models are a powerful tool in statistics and machine learning. They allow us to model complex data by introducing latent variables, which are unobserved variables that help explain the behavior of the observed data.

Latent variables can be thought of as a transformation of the data points into a continuous lower-dimensional space. This can help simplify the data and make it easier to analyze. For example, in a latent variable model, data points x that follow a probability distribution p(x) are mapped into latent variables z that follow a distribution p(z).

In a latent variable model, we have several key components, including the prior distribution p(z), the likelihood p(x|z), the joint distribution p(x,z), the marginal distribution p(x), and the posterior distribution p(z|x). These components work together to describe the behavior of the data and allow us to generate new data points.

Here are the key components of a latent variable model:

- Prior distribution p(z): models the behavior of the latent variables

- Likelihood p(x|z): defines how to map latent variables to the data points

- Joint distribution p(x,z): the multiplication of the likelihood and the prior

- Marginal distribution p(x): the distribution of the original data

- Posterior distribution p(z|x): describes the latent variables that can be produced by a specific data point

Psychology

Latent variables are created by factor analytic methods and represent shared variance, or the degree to which variables move together. This means that variables with no correlation cannot result in a latent construct based on the common factor model.

The Big Five personality traits have been inferred using factor analysis, which is a statistical method that helps identify underlying patterns in data. These traits include extraversion, spatial ability, and wisdom.

Two of the more predominant means of assessing wisdom include wisdom-related performance and latent variable measures. Wisdom is a complex trait that's still being studied and understood.

Spearman's g, or the general intelligence factor in psychometrics, is another example of a latent variable that's been identified through factor analysis. This concept is widely used in psychology to understand human intelligence.

Here's a brief summary of the types of latent variables we've discussed:

- The Big Five personality traits (extraversion, spatial ability, and wisdom)

- Spearman's g (general intelligence factor)

Variable Models

Latent variable models aim to model the probability distribution with latent variables, which are a transformation of the data points into a continuous lower-dimensional space. This transformation helps to describe or "explain" the data in a simpler way.

Latent variables are a mathematical representation of the underlying structure of the data, and they can be used to identify patterns and relationships that may not be apparent in the raw data.

In a stricter mathematical form, data points that follow a probability distribution p(x) are mapped into latent variables z that follow a distribution p(z). The joint distribution p(x,z) is the multiplication of the likelihood and the prior, and it essentially describes our model.

The marginal distribution p(x) is the distribution of the original data, and it's the ultimate goal of the model. The marginal distribution tells us how possible it is to generate a data point.

The posterior distribution p(z|x) describes the latent variables that can be produced by a specific data point.

Here's a list of different types of latent variable models:

- Linear mixed-effects models and nonlinear mixed-effects models

- Hidden Markov models

- Factor analysis

- Item response theory

These models can be used to identify patterns and relationships in the data, and they can be used to make predictions and inferences about the underlying structure of the data.

Types of Models

Latent variable models come in different forms, but they all aim to model the probability distribution with latent variables. These variables are a transformation of the data points into a continuous lower-dimensional space.

There are two main types of latent variable models: generative and discriminative. Generative models, such as the one described, aim to model the probability distribution of the data, including the likelihood of generating a data point from a latent variable. Discriminative models, on the other hand, focus on predicting the latent variable from the data point.

A key aspect of latent variable models is the concept of generation and inference. Generation refers to the process of computing the data point from the latent variable, while inference is the process of finding the latent variable from the data point. These processes are represented by the likelihood and posterior distributions, respectively.

Here's a breakdown of the key components of latent variable models:

- Prior distribution: models the behavior of the latent variables

- Likelihood: defines how to map latent variables to the data points

- Joint distribution: describes the model by multiplying the likelihood and prior

- Marginal distribution: the distribution of the original data

- Posterior distribution: describes the latent variables that can be produced by a specific data point

Economics

In economics, latent variables like quality of life can't be measured directly, so we use observable variables to infer their values. For example, quality of life is linked to observable variables like wealth, employment, and physical health.

These observable variables help us understand the concept of quality of life, which is a complex and multifaceted idea. Wealth is one of the observable variables used to measure quality of life, as it can indicate access to basic needs and comforts.

Business confidence is another latent variable in economics that can't be measured directly, but it can be inferred from observable variables like stock market performance and economic indicators. This helps economists understand the overall state of the economy.

Morale, happiness, and conservatism are also latent variables in economics that can be inferred from observable variables. For instance, morale can be linked to observable variables like job satisfaction and employee engagement.

Medicine

In medicine, latent-variable methodology is used in many branches of the field. This approach is particularly useful in longitudinal studies where the time scale is not synchronized with the trait being studied.

Longitudinal studies often involve tracking changes over time, but the time scale may not match the trait being studied, making it difficult to analyze. For example, in disease progression modeling, an unobserved time scale that is synchronized with the trait being studied can be modeled as a transformation of the observed time scale using latent variables.

Latent variables can be used to model growth, such as in the study of children's height over time. By using latent variables, researchers can account for the non-linear growth patterns and identify underlying factors that contribute to growth.

Discover more: Geophysics Velocity Model Prediciton Using Generative Ai

Generative Models

Generative models are a type of machine learning model that learn the probability distribution of the data. They aim to learn the probability density function p(x) that describes the behavior of the training data, enabling the generation of novel data by sampling from the distribution.

The goal of generative models is to learn a probability density function p(x) that is identical to the density of the data. They can be categorized into two main classes: explicit density models and implicit density models.

Explicit density models compute the density function p(x) explicitly, allowing them to output the likelihood of a data point after training. Implicit density models, on the other hand, do not compute p(x) explicitly but can sample from the underlying distribution after training.

Generative models can further be divided into explicit density models and implicit density models. Explicit density models can either compute the density function exactly or approximate it.

Take a look at this: Training an Ai Model

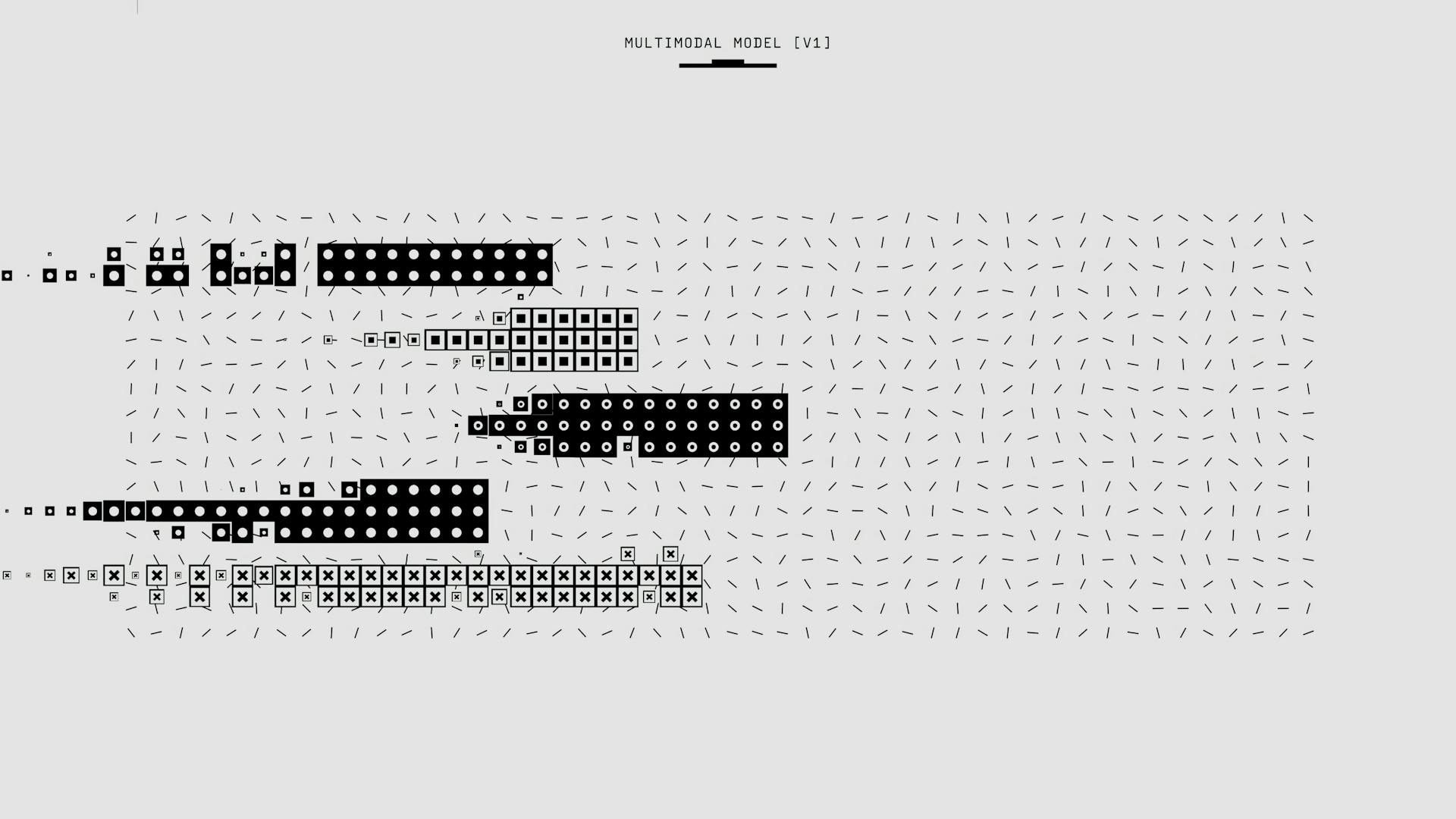

Here's a breakdown of the different types of generative models:

The main difference between explicit and implicit density models is how they approach the computation of the probability density function p(x). Explicit density models have the advantage of being able to output the likelihood of a data point after training, while implicit density models can sample from the underlying distribution after training.

Generative models have the potential to generate novel data by sampling from the learned probability distribution. They can be used in a variety of applications, including image and video generation, text generation, and more.

Confirmatory Factor Analysis

Confirmatory Factor Analysis (CFA) is a type of statistical analysis that helps us understand the underlying structure of a set of observed variables. It's a way to test whether a suite of indicator variables are generated by the same underlying process.

In CFA, we use multi-indicator latent variables to test the hypothesis that a set of observable variables are related to the same latent variable. This is also called testing the idea that the latent variable has given rise to emergent properties that are correlated.

The goal of CFA is to confirm whether a particular measurement model is a good representation of the underlying reality. This involves testing whether the observed variables are indeed related to the latent variable, and whether the relationships between the variables are consistent with the proposed model.

One key aspect of CFA is that it assumes that all latent variables are indicated by all observed variables. This is in contrast to exploratory factor analysis, which does not make this assumption.

Here are some key differences between CFA and exploratory factor analysis:

By using CFA, we can gain a better understanding of the underlying structure of our data and make more informed decisions about how to model and analyze it.

Table 1. Model Classes

Let's take a look at the different types of models, starting with the ones listed in Table 1. Model Classes.

The Linear Regression model is a type of supervised learning model that uses a linear equation to predict a continuous output.

Additional reading: Log Linear Models

It's a simple yet powerful model that can be used for a wide range of applications, from predicting house prices to forecasting stock prices.

The Decision Tree model is another type of supervised learning model that uses a tree-like structure to make predictions.

It's a popular choice for classification problems because it's easy to interpret and can handle missing data.

The Random Forest model is an ensemble model that combines multiple Decision Trees to improve the accuracy of predictions.

By averaging the predictions of multiple trees, Random Forest can reduce overfitting and improve the overall performance of the model.

The Support Vector Machine (SVM) model is a type of supervised learning model that uses a kernel function to transform the data into a higher-dimensional space.

It's a powerful model that can handle high-dimensional data and is often used for classification and regression tasks.

Recommended read: Towards Deep Learning Models Resistant to Adversarial Attacks

Inference

Inference is a crucial part of working with latent variable models, as it allows us to make predictions and decisions based on the data.

To infer a latent variable, we sample from the joint distribution of the observed and latent variables, and then sample from the conditional distribution of the latent variable given the observed variable. This is a fundamental problem in latent variable models.

The distributions involved in inference are interconnected due to Bayes' rule, which can make solving the problem quite challenging.

There are several model classes and methods that allow inference in the presence of latent variables, including linear mixed-effects models, Hidden Markov models, and Factor analysis.

Some common analysis and inference methods include Principal component analysis, Instrumented principal component analysis, and EM algorithms.

Markov Chain Monte Carlo methods and Variational Inference are two common approaches to approximate inference when the problem is intractable.

Specific Techniques

Latent variable models offer a range of techniques for analysis and inference. Some of these techniques include Principal Component Analysis (PCA), Instrumented PCA, and Partial Least Squares Regression.

These methods can be used to identify patterns and relationships in data, making them useful for understanding complex systems. For example, PCA can be used to reduce the dimensionality of high-dimensional data, while Instrumented PCA can be used to account for measurement error.

Some other techniques worth mentioning are Latent Semantic Analysis (LSA) and Probabilistic LSA, which are used for dimensionality reduction and analysis of text data. These methods can be used to identify underlying themes and relationships in large datasets.

Here's a brief summary of some of the techniques mentioned:

These techniques are just a few examples of the many methods available for working with latent variables. By understanding these techniques, you can better apply latent variable models to your own research and projects.

Maximum Likelihood Training

Maximum Likelihood Training is a technique used to estimate the parameters of a probability distribution so that it fits the observed data. It's a well-established method that involves maximizing a likelihood function.

A likelihood function measures the goodness of fit of a statistical model to a sample of data. It's formed from the joint probability distribution of the sample.

This technique can't be solved analytically, so we use an iterative approach such as gradient descent. Gradient descent is a common optimization algorithm used in machine learning.

In order to apply gradient descent, we need to calculate the gradient of the marginal log-likelihood function. This involves using simple calculus and the Bayes rule.

The Bayes rule is used to calculate the posterior distribution p(z∣x), which is essential for computing the gradient. This highlights the importance of inference in maximum likelihood training.

Maximum likelihood estimation is a standard optimization problem that can be solved using gradient descent. Once the problem is solved, we can derive the model parameters θ, which effectively model the desired probability distribution.

Explore further: Can I Generate Code Using Generative Ai Models

Reparameterization Trick

The reparameterization trick is a clever technique used to make backpropagation possible in stochastic operations. It involves "moving" the parameters of a probability distribution from the distribution space to the expectation space.

In essence, we want to rewrite the expectation so that the distribution is independent of the parameter θ. This allows us to take the gradient as we would with model parameters.

The trick can be formulated as transforming a sample from a fixed, known distribution to a sample from qϕ(z). If we consider the Gaussian distribution, we can express z with respect to a fixed ϵ, where ϵ follows the normal distribution N(0,1).

The epsilon term introduces the stochastic part and is not involved in the training process. This is a key insight, as it allows us to keep a fixed part stochastic with epsilon and train the mean and the standard deviation.

Here's a step-by-step breakdown of the reparameterization trick:

- Transform a sample from a fixed distribution to a sample from qϕ(z)

- Express z with respect to a fixed ϵ, where ϵ follows the normal distribution N(0,1)

- Keep a fixed part stochastic with epsilon and train the mean and the standard deviation

By using the reparameterization trick, we can compute the gradient and run backpropagation of ELBO with respect to the variational parameters.

Variational Autoencoders

Variational Autoencoders are a type of neural network that can learn to compress and reconstruct data in a meaningful way. They consist of two main components: an encoder and a decoder.

The encoder is a neural network that takes in a data point and outputs the mean and variance of the approximate posterior distribution. This is done using the reparameterization trick, which allows us to sample from the approximate posterior by first sampling from a standard normal distribution and then transforming it using the mean and variance.

Discover more: Velocity Model Prediciton Using Generative Ai

The decoder is another neural network that takes in a sample from the approximate posterior and outputs the likelihood of the data point. In the case of Variational Autoencoders, the decoder outputs the mean and variance of the likelihood, which is assumed to be a Gaussian distribution.

The two networks are trained jointly by maximizing the Evidence Lower Bound (ELBO), which is a lower bound on the marginal log-likelihood of the data. The ELBO is composed of two terms: the negative reconstruction error, which measures how well the VAE reconstructs a data point, and the KL divergence between the approximate posterior and the prior, which measures how close the approximate posterior is to the prior.

Here is a summary of the components of a Variational Autoencoder:

- Encoder: takes in a data point and outputs the mean and variance of the approximate posterior distribution

- Decoder: takes in a sample from the approximate posterior and outputs the likelihood of the data point

- ELBO: a lower bound on the marginal log-likelihood of the data, composed of two terms: negative reconstruction error and KL divergence

- Reparameterization trick: allows us to sample from the approximate posterior by first sampling from a standard normal distribution and then transforming it using the mean and variance

Lavaan

Lavaan is a powerful tool for latent variable modeling, especially when it comes to single indicator latent variables. It's able to almost exactly reproduce the output from hand-calculated values, with slight deviations due to optimization algorithms.

The operator =~ in lavaan indicates a latent variable, and fixing the error variance in x to the known error variance from repeated trials is a common practice. This allows for more accurate estimates of the parameters.

The standardized loading on xi is 0.895, very close to the value calculated as √(r) = 0.897. Similarly, the loading on η is λy = 1, which is the default in lavaan when the error variance is not supplied.

Here's a comparison of the estimated parameters in lavaan with hand-calculated values:

Latent variables are restricted to covariance-based SEM in lavaan, but it provides an easier and more robust framework that easily extends to multi-indicator latent variables.

Frequently Asked Questions

What is the latent variable model of regression?

The latent variable model of regression combines observed variables with underlying, unobservable factors (latent variables) and random error to predict outcomes. This model is widely applicable and can be used to analyze complex relationships in various fields.

What is the latent variable growth model?

The Latent Variable Growth Model (LGM) is a statistical model that tracks an individual's development over time, highlighting unique growth patterns and identifying key factors that influence development. By analyzing individual differences, the LGM helps researchers understand how various variables impact growth and development.

Featured Images: pexels.com