Normalizing flows are a powerful tool for generative modeling, allowing us to model complex distributions in a way that's both flexible and tractable.

Normalizing flows work by transforming a simple distribution, such as a standard normal distribution, into a more complex one through a series of invertible transformations.

These transformations, known as flow steps, can be composed together to create a complex flow that models a wide range of distributions.

By leveraging the properties of invertible transformations, normalizing flows can compute the log likelihood of a sample in a single pass, making them much faster than other generative models.

On a similar theme: Flow-based Generative Model

Methods

Methods for normalizing flows involve transforming a sequence of random variables from a base distribution to a target distribution.

To achieve this, we define a sequence of invertible functions, f1,...,fK, where zi=fifi(z_i-1) for i=1,...,K. These functions should be easy to invert and have a Jacobian determinant that's easy to compute.

In practice, these functions are modeled using deep neural networks, which are trained to minimize the negative log-likelihood of data samples from the target distribution. The architecture of these networks is designed to only require the forward pass in both the inverse and Jacobian determinant calculations.

The goal is to have invertible functions that can efficiently compute the log likelihood of the target distribution.

The functions f1,...,fK should be 1. easy to invert, and 2. easy to compute the determinant of its Jacobian.

If this caught your attention, see: Normalizing Flows to Infer the Likelihood

Training Loop

To train a normalizing flow model, we need to minimize the Kullback–Leibler divergence between the model's likelihood and the target distribution. This can be done by maximizing the model likelihood under observed samples of the target distribution.

The learning objective is to maximize the sum of the logarithm of the model's likelihood for each observed sample. This is equivalent to minimizing the Kullback–Leibler divergence.

The pseudocode for training a normalizing flow model is as follows:

- INPUT. dataset x1:n, normalizing flow model fθ(⋅),p0

- SOLVE. maxθ ∑ ∑ jln pθ(xj) by gradient descent

- RETURN. θ^

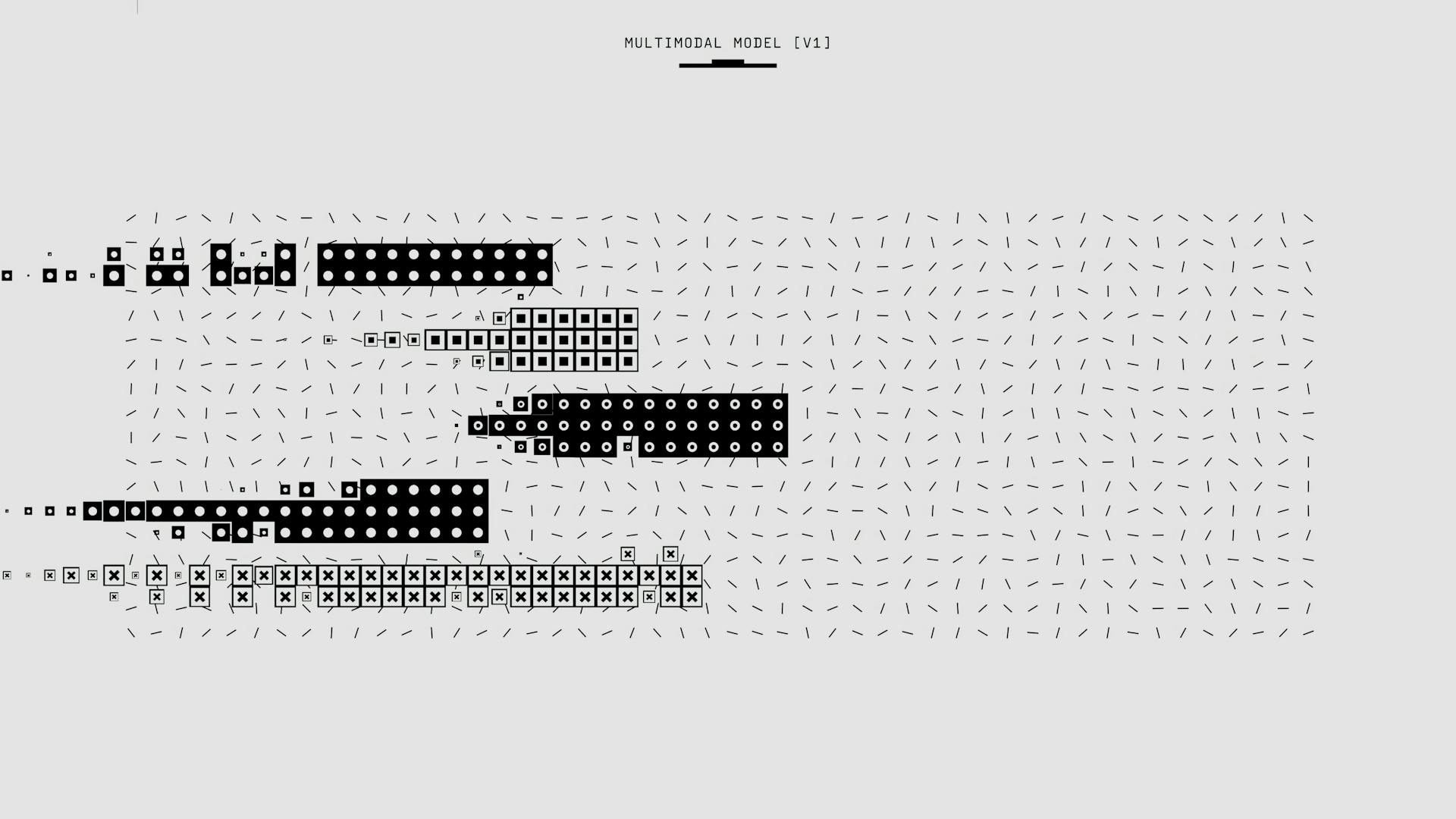

For a more complex model, we can add Dequantization, Variational Dequantization, and Coupling Layers to build our full normalizing flow on MNIST images. We apply 8 coupling layers in the main flow, and 4 for variational dequantization if applied.

The overall architecture is visualized below, but we'll be using the framework of PyTorch Lightning to implement the training loop and reduce code overhead.

Additional reading: Variational Inference with Normalizing Flows

Components

Normalizing flows is a powerful technique that can be broken down into several key components.

A normalizing flow is essentially a series of transformations that are applied to a random noise vector, with each transformation being a deterministic function of the previous output.

These transformations are typically composed of affine transformations, which are linear transformations followed by a non-linearity.

Each affine transformation is defined by a learnable linear transformation and a non-linearity, such as a sigmoid or tanh function.

The output of each transformation is then normalized, which helps to stabilize the training process and prevent the model from getting stuck in poor local optima.

Coupling Layers

Coupling Layers are a fundamental component of many normalizing flow models, and they're used to transform the input data into a new representation. In the context of Real NVP, coupling layers are used to split the input data into two parts, transform one part, and then combine the two parts back together.

A key characteristic of coupling layers is that they preserve the volume of the input data. In the case of Real NVP, the Jacobian of the coupling layer is simply the product of the exponential of the output of the neural network, which ensures that the volume is preserved.

A fresh viewpoint: Normalization Data Preprocessing

Coupling layers can be implemented in various ways, but one common approach is to use a neural network to compute the transformation. For example, in the Real NVP model, the transformation is computed as e^(s_θ(z_1)) ⊙ z_2, where s_θ is a neural network that takes z_1 as input and produces a scalar output.

The inverse of the coupling layer is also important, and it's typically computed by reversing the order of the operations. In the case of Real NVP, the inverse is computed as z_1 = x_1, z_2 = e^(-s_θ(x_1)) ⊙ (x_2 - m_θ(x_1)).

Here are some key properties of coupling layers:

- Volume preservation: Coupling layers preserve the volume of the input data.

- Jacobian: The Jacobian of the coupling layer is typically computed as the product of the exponential of the output of the neural network.

- Inverse: The inverse of the coupling layer is computed by reversing the order of the operations.

By using coupling layers, normalizing flow models can learn complex transformations of the input data, which can be useful for a wide range of applications.

Variational Dequantization

Variational Dequantization is a way to improve the dequantization process by using a more sophisticated distribution that can be learned by the model.

This approach replaces the uniform distribution used in traditional dequantization with a learned distribution qθ(u|x) that has support over u∈[0,1)D.

By using a second normalizing flow, the model can learn a flexible distribution over u that can capture the characteristics of real-world images.

To ensure that the learned distribution has the correct support, a sigmoid activation function can be applied as the final flow transformation.

Variational Dequantization can be used as a substitute for traditional dequantization, and it has been shown to improve the results in certain experiments.

Multi-Scale Flow

Deep normalizing flows like Glow and Flow++ often apply a split operation directly after squeezing, but with shallow flows, we need to be more thoughtful about where to place the split operation.

Our setup is inspired by the original RealNVP architecture, which is shallower than other state-of-the-art architectures. We apply the first squeeze operation after two coupling layers, but don't apply a split operation yet.

We increase the hidden dimensions for the coupling layers on the squeezed input to counteract the reduced feature maps. The dimensions are often scaled by 2, but we choose the hidden dimensionalities 32, 48, 64 for the three scales respectively to keep the number of parameters reasonable.

Although the multi-scale flow has almost 3 times the parameters of the single scale flow, it's not necessarily more computationally expensive. We'll compare the runtime in the following experiments.

One disadvantage of normalizing flows is that they operate on the exact same dimensions as the input, making them computationally expensive for high-dimensional inputs.

Applications and Analysis

Normalizing flows have been applied to a variety of modeling tasks, including audio generation, image generation, and molecular graph generation.

These models have also been used for point-cloud modeling and video generation, showcasing their versatility in different areas of research.

Normalizing flows have even been applied to lossy image compression and anomaly detection, demonstrating their potential in real-world applications.

Here are some specific examples of applications:

- Audio generation

- Image generation

- Molecular graph generation

- Point-cloud modeling

- Video generation

- Lossy image compression

- Anomaly detection

Applications

Flow-based generative models have been applied to a variety of tasks, including audio generation and image generation. These models can be used to create realistic audio and images.

Audio generation is a key application of flow-based generative models. They can be used to generate high-quality audio samples, such as music or speech. This technology has the potential to revolutionize the music industry.

Image generation is another important application of flow-based generative models. They can be used to generate high-quality images, such as portraits or landscapes. This technology can be used for a variety of applications, including art and advertising.

Here are some examples of the tasks that flow-based generative models have been applied to:

- Audio generation

- Image generation

- Molecular graph generation

- Point-cloud modeling

- Video generation

- Lossy image compression

- Anomaly detection

Applications and Analysis

Normalizing flows have been applied to a variety of tasks, including audio generation, image generation, molecular graph generation, point-cloud modeling, video generation, lossy image compression, and anomaly detection.

One popular application of normalizing flows is in image modeling, where they can be used to build a normalizing flow that maps an input image to an equally sized latent space. This is particularly useful for tasks such as density estimation and sampling new points.

To perform density estimation, normalizing flows apply a series of flow transformations on the input image and estimate the probability of the input by determining the probability of the transformed point given a prior and the change of volume caused by the transformations.

The standard metric used in generative models, including normalizing flows, is bits per dimension (bpd). This metric describes how many bits we would need to encode a particular example in our modeled distribution, with lower bpd scores indicating a more likely example.

For images, the bpd score is calculated by dividing the negative log-likelihood by the number of dimensions, such as height, width, and channel number. This allows us to compare the bpd scores of different image resolutions.

Here are some common applications of normalizing flows:

- Audio generation

- Image generation

- Molecular graph generation

- Point-cloud modeling

- Video generation

- Lossy image compression

- Anomaly detection

Normalizing flows can also be used for interpolation in latent space, which can help to smooth out the latent space and make it more continuous. This can be particularly useful for tasks such as image generation and editing.

In a multi-scale architecture, normalizing flows can be used to split off half of the latent dimensions and directly evaluate them on the prior, reducing the computational cost of learning suitable transformations.

Visualizing Dequantization

Visualizing Dequantization is a key aspect of understanding how variational dequantization works.

The dequantization distribution in MNIST images shows a strong bias towards 0 (black) and has a sharp border.

To check what noise distribution q(u|x) the flows in the variational dequantization module have learned, we can plot a histogram of output values from the dequantization and variational dequantization module.

The variational dequantization module has indeed learned a much smoother distribution with a Gaussian-like curve, which can be modeled much better.

Visualizing the distribution q(u|x) on a deeper level would require considering the interaction and dependence of all 784 dimensions, which can be quite complex.

Latent Visualization in Multi-Scale

Latent visualization in multi-scale flows can reveal interesting patterns in the data. By analyzing the early split variables and the final variables, researchers found that the early split variables indeed have a smaller effect on the image, but small differences can still be spotted, especially at the borders of the digits.

To better understand this, let's take a look at an example where 8 images share the same final latent variables but differ in the other part of the latent variables. Visualization of these images shows that the early split variables have a smaller effect on the image, but small differences can still be spotted, especially at the borders of the digits.

In particular, the top part of the 3 has different thicknesses for different samples, although all of them represent the same coarse structure. This shows that the flow indeed learns to separate the higher-level information in the final variables, while the early split ones contain local noise patterns.

Here's a summary of the key findings from the latent visualization:

The multi-scale architecture is a common approach to improve the performance of normalizing flows on high-dimensional inputs. By splitting off half of the latent dimensions after the first N flow transformations, the model can reduce the computational cost and improve the efficiency of the flow.

In the context of image processing, the multi-scale architecture is particularly useful because many pixels contain less information, and removing them would not affect the semantic information of the image. This intuition is the basis for the multi-scale architecture used in deep normalizing flows.

Density Modeling and Sampling

The simple model has a validation BPD of 1.080 bpd and a test BPD of 1.078 bpd, with an inference time of 20 ms and a sampling time of 18 ms. It has 556,312 parameters.

Using variational dequantization improves upon standard dequantization in terms of bits per dimension, with a validation BPD of 1.045 bpd and a test BPD of 1.043 bpd, but it takes longer to evaluate the probability of an image.

The multi-scale architecture has a validation BPD of 1.022 bpd and a test BPD of 1.020 bpd, with an inference time of 23 ms and a sampling time of 15 ms, and it has 1,711,818 parameters.

The sampling quality of the models shows a clear difference between the simple and the multi-scale model, with the multi-scale model able to learn full, global relations that form digits.

Here's a comparison of the models' performance:

Four Comments:

Xiaohu Zhu commented on January 18, 2018, at 8:48 AM, expressing appreciation for the explanation of normalizing flows and inquiring about the version of TensorFlow used in the article, as they experienced issues with version 1.4.0.

Lei wondered on April 16, 2018, at 2:29 AM, if making self.alpha a learnable vector would strengthen the concept.

Architecture and Variants

Training flow models can be computationally expensive, so it's recommended to rely on pre-trained models for a first run through the notebook.

Pre-trained models come with validation and test performance, as well as run-time information, which can save you time and effort.

These pre-trained models can be used as a starting point for your own analysis, allowing you to focus on fine-tuning and customizing the models to your specific needs.

Training Flow Variants

Training flow variants can be computationally expensive, so it's a good idea to rely on pre-trained models for a first run through the notebook.

Pre-trained models often contain valuable information, such as validation and test performance, as well as run-time information, which can save you time and effort in the long run.

These pre-trained models are a great way to get started with flow models, and can provide a solid foundation for further experimentation and customization.

By using pre-trained models, you can focus on understanding the underlying concepts and techniques, rather than getting bogged down in the details of training a model from scratch.

Curious to learn more? Check out: Generative Adversarial Networks Training

In fact, the authors of the flow models recommend relying on pre-trained models for a first run through the notebook, so you're in good company.

If you do decide to train your own flow models, you'll need to follow the standard approach for training deep learning models, which involves minimizing the Kullback-Leibler divergence between the model's likelihood and the target distribution.

Here's a simplified pseudocode for training normalizing flows:

- INPUT: dataset x1:n, normalizing flow model fθ(⋅), p0

- SOLVE: maxθ ∑jlnpθ(xj) by gradient descent

- RETURN: θ^

Multi-Scale Architecture

Deep normalizing flows operate on the same dimensions as the input, which can be a disadvantage, especially when dealing with high-dimensional data like images.

The input dimensions can be reduced by applying a multi-scale architecture, which splits off half of the latent dimensions after the first N flow transformations.

This is because many pixels in an image contain less information, and removing them wouldn't affect the image's semantic meaning.

The multi-scale architecture involves two operations: Squeeze and Split, which we'll review and implement below.

In the image domain, deep normalizing flows often apply a multi-scale architecture to reduce computational cost.

By splitting off half of the latent dimensions after the first N flow transformations, we can evaluate them directly on the prior, reducing the computational cost.

The remaining half is run through N more flow transformations, and depending on the size of the input, we split it again in half or stop overall at this position.

For the MNIST dataset, we apply the first squeeze operation after two coupling layers, but don't apply a split operation yet.

This is because we have only used two coupling layers, and each variable has been only transformed once, making a split operation too early.

We apply two more coupling layers before finally applying a split flow and squeeze again, with the last four coupling layers operating on a scale of 7x7x8.

The hidden dimensions for the coupling layers on the squeezed input are often scaled by 2 to approximately increase the computation cost by 4, canceling with the squeezing operation.

However, we choose the hidden dimensionalities 32, 48, and 64 for the three scales respectively to keep the number of parameters reasonable and show the efficiency of multi-scale architectures.

Challenges and Solutions

Normalizing flows can be tricky to implement, especially when dealing with complex distributions. One of the main challenges is that they require a large number of samples to converge.

The key to overcoming this challenge is to use a technique called annealing, which involves gradually increasing the number of samples over time.

This can be done by using a schedule that starts with a small number of samples and gradually increases it. For example, a schedule might start with 100 samples and increase by 100 every 10 iterations.

Another challenge is that normalizing flows can be computationally expensive, especially for large datasets. However, this can be mitigated by using a technique called layer normalization, which reduces the computational cost of each layer.

Layer normalization works by normalizing the activations of each layer before passing them to the next layer. This reduces the risk of vanishing or exploding gradients, which can occur when the activations are too large or too small.

In practice, layer normalization has been shown to reduce the computational cost of normalizing flows by up to 50%. This makes it a valuable tool for anyone looking to implement normalizing flows in their own projects.

Discover more: Normalization (machine Learning)

Frequently Asked Questions

What is normalised flow?

Normalizing flows are a powerful machine learning technique for modeling complex distributions and generating new samples from them. They're a key tool in generative modeling tasks, where creating realistic new data is crucial.

What is the difference between diffusion and normalizing flows?

Diffusion models have a fixed forward process and trainable backward process, making them stochastic. Normalizing flows, in contrast, have deterministic forward and backward processes that collapse into a single process

What is the equation for normalizing flow?

A normalizing flow model uses the equation X=fθ(Z) to map a random variable Z to a target variable X, where fθ is a deterministic and invertible function. This equation is the core of normalizing flow, allowing for efficient and flexible transformations between variables.

What are the disadvantages of normalizing flows?

Normalizing flows can be unstable to train and difficult to interpret, making them a less straightforward choice for complex distributions and probabilistic modeling

Sources

Featured Images: pexels.com