Normalizing flows are a type of generative model that can be used to model complex data distributions. They work by transforming a simple distribution, such as a standard normal distribution, into the target distribution using a series of invertible transformations.

This process can be visualized to better understand how the model is working. For example, a normalizing flow can be used to model the distribution of images, where each transformation in the flow corresponds to a different layer in a neural network.

By visualizing the transformations in a normalizing flow, we can gain insights into the structure of the data and how the model is capturing it. This can be especially useful for understanding the behavior of the model on complex data.

Visualizing normalizing flows can also help to identify issues with the model, such as mode collapse or poor convergence.

Expand your knowledge: Model Stacking

What are Normalizing Flows?

Normalizing flows are a type of probabilistic model that can be used for density estimation and generative modeling. They work by learning a sequence of invertible transformations that map a simple distribution to the complex data distribution.

The invertible transformations are composed of a series of affine coupling layers, which split the input data into two parts and then transform each part separately. This allows the model to learn complex relationships between the data.

Normalizing flows are invertible, meaning that the transformation can be easily reversed to obtain the original input data. This is a key property that makes them useful for tasks like density estimation and generative modeling.

They are also differentiable, which means that the model can be trained end-to-end using backpropagation. This makes them well-suited for tasks that require the ability to learn complex patterns in the data.

Consider reading: Flow-based Generative Model

Types of Normalizing Flows

Normalizing Flows come in two main types: planar flows and radial flows. Planar flows compress and expand around a reference point, while radial flows do the same but in a more spherical manner.

Planar flows can be thought of as "squishing" and "stretching" the data in different directions. They are invertible functions if their parameters are correctly constrained. This means that once we apply a planar flow to a distribution, we can always reverse the process to get back to the original distribution.

Radial flows, on the other hand, are like "balloons" that expand and contract around a central point. They also require correct parameter constraints to be invertible.

Both types of flows can be stacked together to create more complex transformations of the data. This is useful when we want to model complex distributions that can't be captured by a single flow.

Here are some examples of how different flows can be combined:

These different types of flows can be used to create a wide range of transformations, each with its own strengths and weaknesses. By combining them in different ways, we can create powerful models for visualizing and understanding complex data distributions.

Worth a look: Is Transfer Learning Different than Deep Learning

Implementing Normalizing Flows

Normalizing flows can be implemented using a variety of architectures, but one popular choice is the Glow model, which can be trained on datasets like MNIST.

To implement a normalizing flow, you'll need to define three modules: an encoder, a flow-model, and a decoder. The encoder takes in an input x and outputs the mean and log-std of the first variable in the flow of random variables, similar to a VAE encoder.

The flow-model is a stack of flow layers that transforms samples from the first distribution to the complex distribution. It uses the reparameterization trick to move stochasticity to samples from a standard Gaussian distribution, allowing the gradient to be passed to the encoder network.

The decoder takes in the latent variable Zₖ and models P(x|zₖ), similar to a VAE decoder. It can be implemented as a fully connected network, and its parameters are the only parameters of our model.

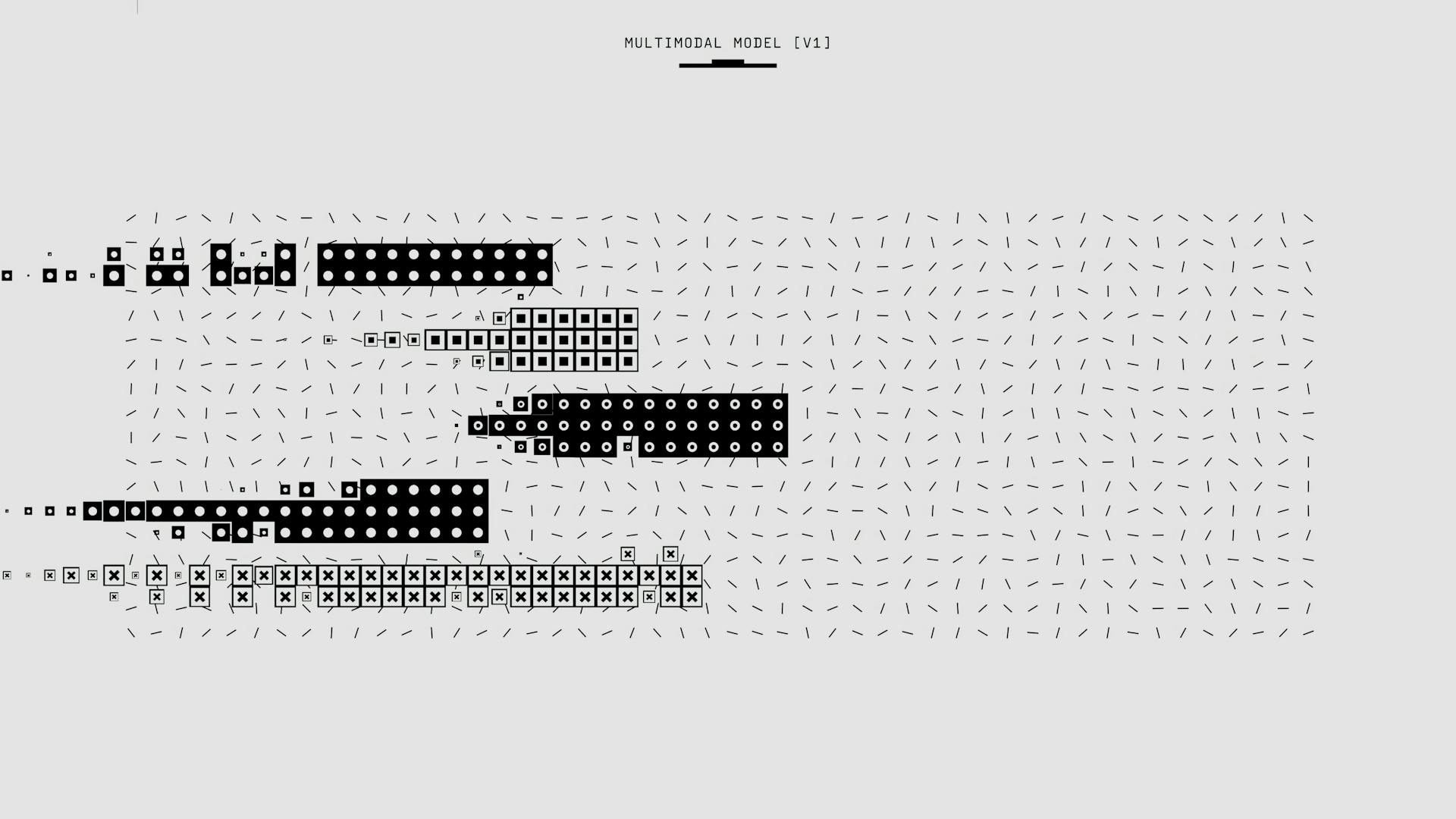

Here's a high-level overview of the architecture:

Implementing Normalizing Flows with GANs and VAEs

Normalizing flows are a type of generative model that can be used to learn the probability distribution of complex data. This is particularly useful in cases where we have vast amounts of unstructured and unlabeled data, such as in density estimation, outlier detection, and text summarization.

Normalizing flows were proposed to solve the issues with GANs and VAEs, including mode collapse and vanishing gradients. They achieve this by using reversible functions.

To implement a generative model based on normalizing flows, we can use a specific architecture that consists of three modules: an encoder, a flow-model, and a decoder.

The encoder takes the observed input x and outputs the mean (μ) and log-std (log(σ)) of the first variable in the flow of random variables, Z₀. This is similar to the encoders of variational auto-encoders.

The flow-model transforms the samples from the first distribution (Z₀) to the samples Zₖ from the complex distribution qₖ. We use the reparameterization trick to move the stochasticity to samples from another standard Gaussian distribution, ε.

The decoder takes the latent variable Zₖ and models P(x|zₖ), which is similar to the decoder of variational auto-encoders.

Here's an overview of the three modules:

- Encoder: μ, log(σ)

- Flow-model: Z₀ → Zₖ using reversible functions

- Decoder: P(x|zₖ)

By implementing these modules, we can learn the probability distribution of complex data and generate new samples that are similar to the original data.

Generating Samples

Generating samples is a crucial step in working with normalizing flows. The nflows library makes it incredibly easy to generate samples with just a single line of code.

The display function in nflows is useful for visualizing the generated samples in a square grid. This allows you to quickly see the results of your model.

With the Glow model, you can generate samples from both datasets. The article mentions generating 25 images from both datasets, which is a great way to test the model's performance.

Understanding Normalizing Flows

Normalizing flows are a type of technique used to learn the probability distribution of large datasets. They were proposed to solve issues with GANs and VAEs, such as mode collapse and vanishing gradients.

Normalizing flows use reversible functions, which allow for exact evaluation and inference of the probability distribution. This is a significant improvement over GANs and VAEs, which often lack this ability.

These techniques have applications in density estimation, outlier detection, text summarization, data clustering, bioinformatics, and DNA modeling.

Advantages of Normalizing

Normalizing flows offer several advantages over GANs and VAEs. They don't require putting noise on the output, allowing for more powerful local variance models.

One of the key benefits of normalizing flows is their stable training process. Unlike GANs, which require careful tuning of hyperparameters for both generators and discriminators, flow-based models are much easier to train.

Normalizing flows are also easier to converge compared to GANs and VAEs. This makes them a more reliable choice for learning complex data distributions.

Here are some specific advantages of normalizing flows:

- More powerful local variance models

- Stable training process

- Easier to converge

What's Happening?

Normalizing flows are a powerful tool for learning complex data distributions, and understanding how they work is crucial for implementing them effectively. We're going to take a closer look at what's happening behind the scenes when we use normalizing flows.

We start by initializing an empty list called transformations. This list will hold the sequence of transformations that we'll apply to our data.

We then use a list comprehension to create a sequence of AffineTransformation instances. This means we're creating a list containing five instances of the AffineTransformation class, which will be used to transform our data.

To generate 1000 samples from the base distribution, we use the sample method of the base_distribution object. This will give us a starting point for our data.

We then apply the sequence of transformations we created earlier to these samples. This is where the magic happens, and our data is transformed into something more complex.

The flow object represents the sequence of transformations we created, and we apply these transformations in sequence by passing the samples from the base distribution through the flow. This is a key step in using normalizing flows.

To remove any unnecessary dimensions from the generated samples, we use the squeeze() method. This is a common step when working with PyTorch tensors, and it ensures that the shape of our data matches the desired format.

Here's a step-by-step summary of the process:

- Initialize an empty list called transformations.

- Use a list comprehension to create a sequence of AffineTransformation instances.

- Generate 1000 samples from the base distribution using the sample method.

- Apply the sequence of transformations to the samples using the flow object.

- Remove any unnecessary dimensions from the generated samples using the squeeze() method.

Loss Functions

Loss Functions are a crucial part of training any machine learning model.

The negative ELBO is the objective function we will optimize, computed via a formula that involves expectations of log probabilities and a log determinant.

The log probability of the initial variational samples is one of the outputs of the flow-model, denoted as ln q₀(z₀).

The second term in the expectation, log p(x, zₖ), can be written as the sum of log p(x|zₖ) and log p(zₖ), where the first term is the normalized output of the decoder, and the second term is the log probability of zₖ.

The log determinant output of the flow-model is the third term in the expectation.

To estimate the expectation, we can take the average over the mini-batch, which is a common technique in machine learning.

In PyTorch, the final loss is computed using the following code, where D is the dimension of random variables:

Frequently Asked Questions

What is the disadvantage of normalizing flow?

Normalizing flows can be unstable to train and difficult to interpret, making them less suitable for complex distributions and certain applications. This can limit their use in certain scenarios, such as real-world data analysis.

How do you normalize flow?

Normalizing flows work by applying a series of invertible transformations to a probability density, allowing it to 'flow' through the sequence and construct complex distributions. This process leverages the rule for change of variables to transform the initial density

Sources

- https://grishmaprs.medium.com/normalizing-flows-5b5a713e45e2

- https://towardsdatascience.com/introduction-to-normalizing-flows-d002af262a4b

- https://siboehm.com/articles/19/normalizing-flow-network

- https://www.analyticsvidhya.com/blog/2023/09/the-creative-potential-of-normalizing-flows-in-generative-ai/

- https://towardsdatascience.com/variational-inference-with-normalizing-flows-on-mnist-9258bbcf8810

Featured Images: pexels.com