A confusion matrix is a table used to evaluate the performance of a classification model. It's a simple yet powerful tool that helps you understand how well your model is doing.

The confusion matrix is typically used in machine learning and data science to assess the accuracy of a model's predictions. It's a crucial step in the model-building process.

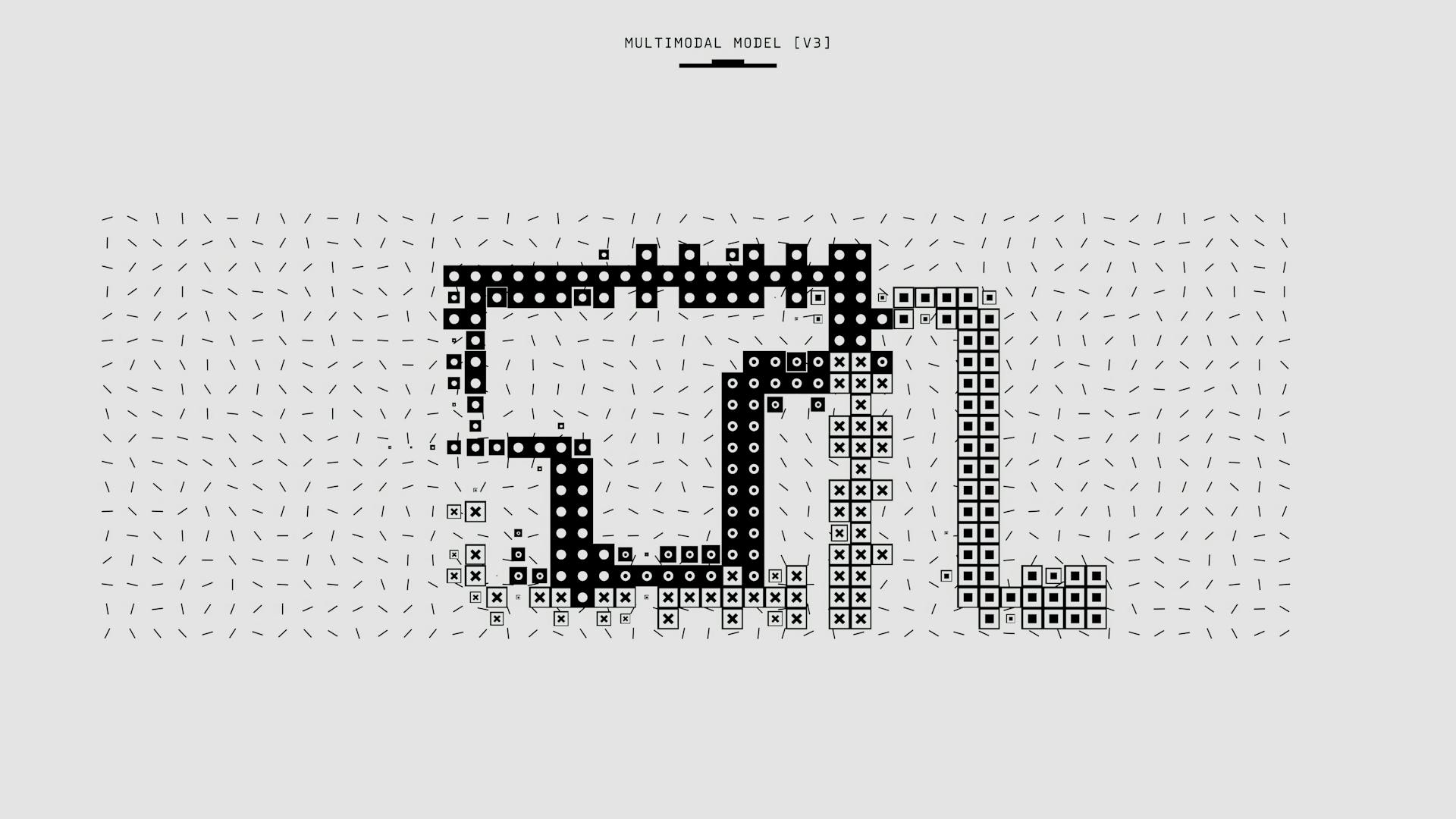

A standard confusion matrix consists of four quadrants: true positives, false positives, true negatives, and false negatives. These quadrants help you visualize the model's performance and identify areas for improvement.

The accuracy of a model is calculated by dividing the sum of true positives and true negatives by the total number of observations. This gives you a clear picture of how well your model is doing overall.

Recommended read: Calculating Accuracy from Confusion Matrix

What is a Confusion Matrix?

A confusion matrix is a table used to evaluate the performance of a binary classifier, like a spam filter. It helps you understand how well your model is doing by comparing its predictions to the actual outcomes.

The matrix has four possible outcomes for each output: true positive, false positive, true negative, and false negative. A true positive occurs when a spam email is correctly classified as spam, while a false positive happens when a legitimate email is misclassified as spam.

You can think of a confusion matrix like a scoreboard for your model's predictions. It shows you the number of true positives, false positives, true negatives, and false negatives in each row and column. This helps you identify areas where your model needs improvement.

Here's a breakdown of the four possible outcomes:

- True Positive (TP): A spam email correctly classified as spam

- False Positive (FP): A legitimate email misclassified as spam

- False Negative (FN): A spam email misclassified as legitimate

- True Negative (TN): A legitimate email correctly classified as legitimate

A confusion matrix can also help you detect imbalanced datasets, where one class has a significantly larger number of instances than the other. For example, if you have a dataset of photos and only a few are of a rare cloud type, your matrix will show a large imbalance between the two classes.

Purpose and Description

A confusion matrix is a table of results showing True Positive, False Positive, True Negative, and False Negative rates for each class modeled in an input model.

The Confusion Matrix function creates a table of results, but it's not just a simple table - it's a powerful tool that helps you understand how well your classification model is performing. It's like a report card for your model, showing you where it's getting things right and where it's getting things wrong.

Input models must be of type PLSDA, SVMDA, KNN, or SIMCA for the Confusion Matrix function to work its magic.

You can also use vectors of true class and predicted class instead of a model as input, which is a convenient option if you don't have a model handy.

The Confusion Matrix function calculates classification rates, which are defined as: True Positive Rate (TPR), False Positive Rate (FPR), True Negative Rate (TNR), and False Negative Rate (FNR).

Curious to learn more? Check out: Binary Categorization

Components and Outputs

A confusion matrix is a table that reports the number of true positives, false negatives, false positives, and true negatives. It's a powerful tool for evaluating the performance of a classifier.

The outputs of a confusion matrix include a confusion matrix itself, class names, and a text representation of the matrix. If there are only two classes, the Matthew's Correlation Coefficient value is also included.

The confusion matrix is a table with two rows and two columns that reports the number of true positives, false negatives, false positives, and true negatives. This allows for more detailed analysis than simply observing the proportion of correct classifications.

Here's a breakdown of the components of a confusion matrix:

This table shows the different components of a confusion matrix, including true positives, false negatives, false positives, and true negatives.

Threshold's Impact on Positives and Negatives

Different thresholds can significantly affect the number of true and false positives and negatives. The relationship between a threshold and the numbers of true and false positives and negatives is complex.

In a well-differentiated dataset, where positive examples and negative examples are generally well-separated, most positive examples have higher scores than negative examples. This is often the case when the data is well-understood and the model is well-trained.

Many datasets, however, are unseparated, where many positive examples have lower scores than negative examples, and many negative examples have higher scores than positive examples. This can happen when the data is noisy or the model is not well-suited for the task.

Datasets can also be imbalanced, containing only a few examples of the positive class. This can lead to issues with model performance and evaluation.

Here's a breakdown of the different types of datasets and their characteristics:

- Separated: Positive examples and negative examples are generally well-differentiated.

- Unseparated: Many positive examples have lower scores than negative examples, and many negative examples have higher scores than positive examples.

- Imbalanced: Contains only a few examples of the positive class.

Metrics

Metrics are a crucial part of evaluating a model's performance.

A good F1 score means you have low false positives and low false negatives, so you're correctly identifying real threats and not disturbed by false alarms.

An F1 score is considered perfect when it's 1, while the model is a total failure when it's 0.

All models are wrong, but some are useful, meaning they'll generate some false negatives, some false positives, and possibly both.

You'll need to optimize for the performance metrics that are most useful for your specific problem, often facing a tradeoff between decreasing false negatives and increasing false positives.

Check this out: When Should You Use a Confusion Matrix

Featured Images: pexels.com