An energy-based model is a type of machine learning algorithm that uses energy functions to learn and represent complex data distributions.

Energy functions are mathematical functions that assign a value to each possible configuration of a system, with lower energy values indicating more likely configurations.

These energy functions are often based on the concept of contrastive divergence, which is a method for training energy-based models by iteratively updating the model parameters to reduce the difference between the model's predictions and the actual data.

Energy-based models can be used for a variety of tasks, including density estimation, generative modeling, and clustering.

For another approach, see: Knowledge Based Genai

What is EBM?

An energy-based model, or EBM, is a type of probabilistic model used in machine learning to capture complex relationships within data.

EBMs assign a scalar energy to each configuration of the variables in the model, with lower energy for correct or desirable configurations and higher energy for incorrect or undesirable ones.

Broaden your view: Generative Ai Power Consumption

In this context, the term "energy" is borrowed from physics and represents a scalar value associated with the state of the system.

EBMs are used in various applications such as image recognition, natural language processing, and generative modeling.

They encompass a broad range of models, including Hopfield Networks, Boltzmann Machines, and Markov Random Fields.

EBM Approach

The EBM approach is a game-changer in the world of machine learning.

Instead of trying to classify x's to y's, we're looking for a y that fits perfectly with x. This is a more nuanced and accurate way of thinking about relationships between data points.

We can reframe the problem as finding a y that makes F(x,y) low, where F(x,y) is some function that measures how well x and y go together. For example, is y an accurate high-resolution image of x?

In essence, the EBM approach is about finding the best match for x, rather than forcing x into a predetermined category. This approach is particularly useful for tasks like image recognition and translation.

Here are some examples of how the EBM approach can be applied:

- Is text A a good translation of text B?

- Is y an accurate high-resolution image of x?

By focusing on finding the best match, we can unlock new insights and improve the accuracy of our models.

Training and Optimization

Training an energy-based model involves adjusting the parameters of the energy function so that the model assigns low energy to observed, correct examples and high energy to incorrect or unobserved examples.

This is often done using gradient-based optimization techniques, where the gradient is computed with respect to the parameters of the energy function. The partition function, a normalization constant required for the Gibbs distribution, is computationally intractable for many interesting models, but various approximation methods like contrastive divergence can address this issue.

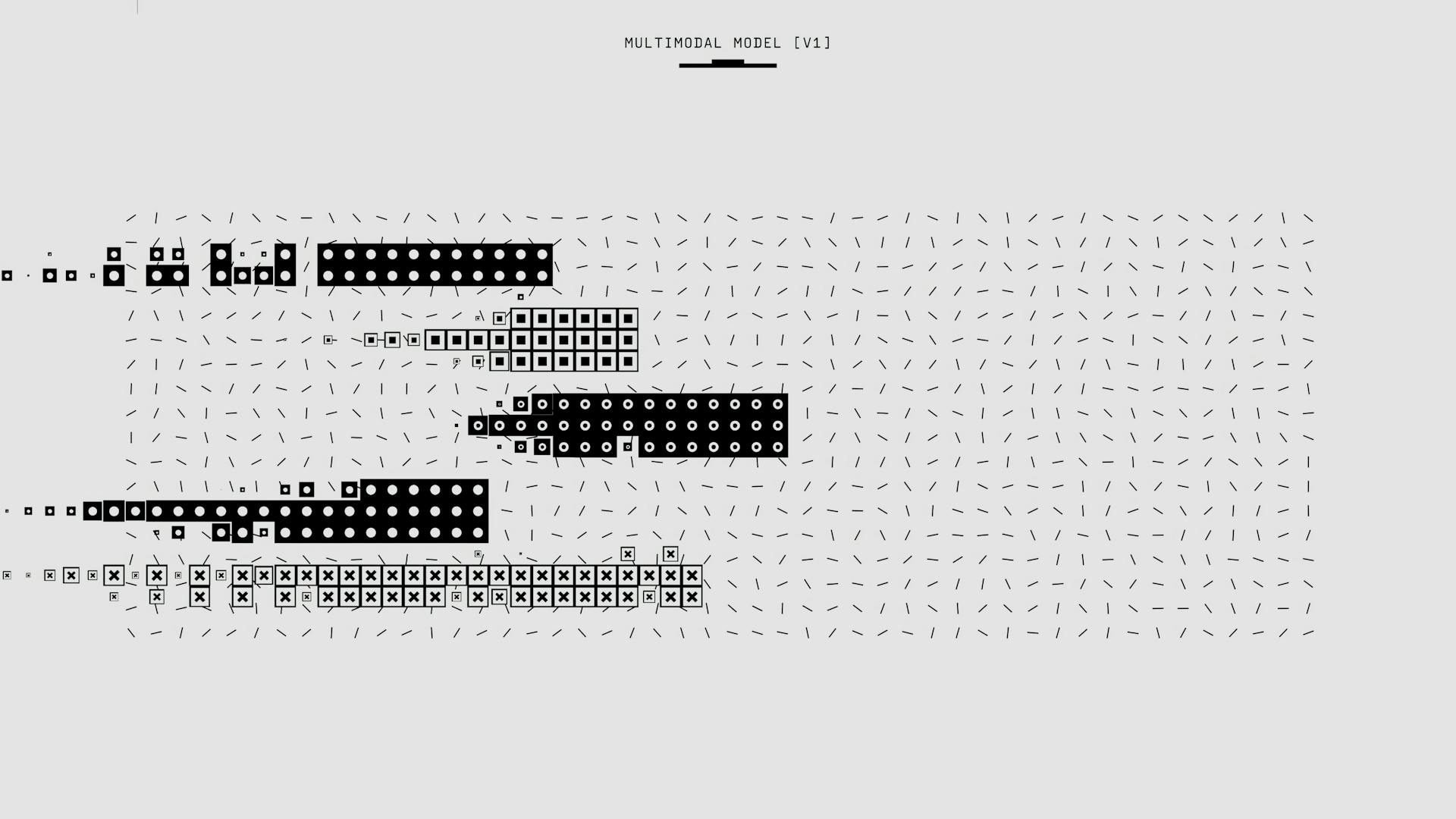

The training algorithm for an energy model on image modeling involves calculating the contrastive divergence objective using the energy model Eθ. A regularization loss is also added to ensure that the output values are in a reasonable range, typically around 0 for real data and slightly lower for fake data.

The training dynamic is implemented in a PyTorch Lightning module, where the loss function is carefully adjusted to account for the model's output being a negative energy function. The validation step is used to get an idea of the difference between energy/likelihood of random images to unseen examples of the dataset.

Related reading: Velocity Model Prediciton Using Generative Ai

Training Algorithm

Training an energy-based model involves adjusting its parameters to assign low energy to observed, correct examples and high energy to incorrect or unobserved examples.

This is often done using gradient-based optimization techniques, where the gradient is computed with respect to the parameters of the energy function.

To perform inference, we search the energy function using gradient descent to find compatible variables.

One of the challenges in training energy-based models is computing the partition function, which is computationally intractable for many interesting models.

Contrastive divergence is an approximation method developed to address this issue.

The training algorithm for an energy model involves sampling real and fake data, calculating the contrastive divergence objective, and adding a regularization loss to ensure output values are in a reasonable range.

The regularization loss is less important than the contrastive divergence loss, so a weight factor α is used to balance the two.

The training dynamic is implemented in a PyTorch Lightning module, where the model fθ(x) = -Eθ(x) is used to compute the loss function.

Energy-based generative models are usually not evaluated on a test set, but a validation step can be used to compare the energy/likelihood of random images to unseen examples of the dataset.

A different take: How Is Ai Used in Robotics

Boltzmann Machine

The Boltzmann Machine is a type of energy-based model that's been around for a while. It's used for modeling a binary random vector with D components.

The energy function used in BM is quite simple: E_G(x; W) = -1/2 x^T W x. This function decomposes into terms that represent the contribution of each pair of RVs to the whole energy function. The connection strength between RVs is represented by W_ij.

BM has a fully connected graph, which means there's only one maximal clique containing all RVs. This property makes BM an attractive model for certain types of data.

To learn BM, we use a general learning rule derived from the gradient of the energy function with respect to W. The learning rule is W := W - λ * (E_x~p_D[-x x^T] - E_x~Gibbs(p_W)[-x x^T]).

Gibbs sampling is used to implement BM, and the conditional density of each RV has a convenient and tractable form. This form makes the nodes behave like computation units, similar to neurons.

Here are some key differences between BM and RBM:

The conditionals for each visible node in RBM are independent of the other visible nodes, and the same is true for hidden nodes. This property makes RBM an attractive choice for practical implementation.

In RBM, we can iterate the following steps to perform Gibbs sampling: sample a hidden vector h^(t) ~ p(h | x^(t-1)), and then sample a visible vector x^(t) ~ p(x | h^(t)).

For more insights, see: Neural Network Hidden Layer

Characteristics and Applications

Energy-based models have several characteristics that make them useful for various applications. They are simple and stable, requiring only the design and training of a single object, the EBM itself.

EBMs can adapt to different computation times, generating sharp, diverse samples or coarse, less diverse samples, depending on the available time. This property is especially useful in tasks where speed is crucial.

One of the key advantages of EBMs is their flexibility. They can capture complex, high-order interactions between variables and can be applied to a wide range of data types and structures.

EBMs can be used for various applications, including image processing, sequence modeling, and reinforcement learning. They can also be used for tasks like classification, where the goal is to find the input that minimizes the output energy.

Here are some of the key characteristics of EBMs:

- Simplicity and stability

- Adaptive computation time

- Flexibility

- Adaptive generation

- Compositionality

Probabilistic

Probabilistic models are implicitely defined by energy functions over the whole state-space. This is shown in Eq.4, which is the sole connection between energy-space and probability-space.

The energy function used in Boltzmann Machines (BM) is possibly the simplest one you can imagine: \(E_{\mathcal{G}}(\mathbf{x}; W) = - \frac{1}{2} \mathbf{x}^T W \mathbf{x}\). This energy function has a fully connected graph and hence only one maximal clique (containing all RVs).

The probabilistic model implicitely defined by the energy functions over the whole state-space is extremely similar to the Boltzmann Distribution. The Boltzmann Distribution is a probability distribution or probability measure that gives the probability that a system will be in a certain state as a function of that state's energy.

For more insights, see: Version Space Learning

The Partition Function (denoted as \(Z\)) is the denominator of Eq.4, and it is quite difficult to compute in general because the summation grows exponentially with the space of \(\mathbf{X}\).

A hyper-parameter called “temperature” (denoted as \(\tau\)) is often introduced in Eq.4, which also has its roots in the original Boltzmann Distribution from Physics. A decrease in temperature gathers the probability mass near the lowest energy regions.

The conditionals for each visible node in a Restricted Boltzmann Machine (RBM) is independent of the other visible nodes and this is true for hidden nodes as well. This makes the formulation much simpler and the Gibbs sampling steps become super easy to compute.

The conditionals for each visible node in an RBM can be computed in parallel, as shown in the following equations:

\[

\begin{align}

p(h_k\vert \mathbf{x}) = \sigma( W_{[:,k]}\cdot\mathbf{x} )\\

p(x_j\vert \mathbf{h}) = \sigma( W_{[j,:]}\cdot\mathbf{h} )

\end{align}

\]

Applications

Energy-based models have a wide range of applications, including image processing, sequence modeling, and reinforcement learning.

They can be used for tasks like denoising, inpainting, and segmentation in image processing, where the energy function can be designed to favor smoother, more coherent images.

In sequence modeling, EBMs can capture the probability of sequences of words or characters, aiding in tasks like language modeling and text generation.

EBMs can also represent the energy as a cost associated with states and actions, helping to learn policies that minimize this cost in reinforcement learning.

A classification setup like object recognition or sequence labeling can be considered as an energy-based task, where we just need to find the Y input that minimizes the output E(X, Y) (hence maximizes probability).

Here are some examples of applications of energy-based models:

- Denoising of images

- Object recognition

- Sequence labeling

These applications take advantage of the fact that energy-based models can compare two elements and find the one with the higher energy, making them simpler to learn and more efficient to use.

Variants and Alternatives

EBMs have alternatives like variational autoencoders (VAEs), which are known for their ability to learn complex probability distributions.

These alternatives, such as generative adversarial networks (GANs), can also generate new data samples that resemble existing data.

Normalizing flows, another technique, can also be used for generative modeling and has its own set of advantages over EBMs.

Disadvantages

Training energy-based models can be a real challenge due to the intractability of the partition function.

This means that it can be difficult to calculate the probability of a particular state, which is a crucial aspect of many machine learning tasks.

Designing an energy function for a specific problem is a non-trivial task that requires careful consideration.

Getting stuck in local minima during optimization is a common issue with energy-based models, which can hinder their performance.

See what others are reading: Pixel Based Websites

Alternatives

If you're considering using EBMs, you might want to explore other options. EBMs compete with techniques such as variational autoencoders (VAEs), generative adversarial networks (GANs) or normalizing flows.

These alternatives can be useful for specific tasks, but they each have their own strengths and weaknesses.

VAEs are particularly good at learning probabilistic distributions, while GANs excel at generating realistic data samples.

Implementation and Tools

Implementing an energy-based model requires a deep understanding of the underlying math, but with the right tools, it can be a manageable task.

PyTorch is a popular deep learning framework that supports the implementation of energy-based models, thanks to its flexible tensor computation and automatic differentiation capabilities.

To get started, you'll need to define the energy function, which can be done using PyTorch's built-in tensor operations.

In practice, this involves specifying the energy function's parameters and using PyTorch's autograd system to compute gradients.

See what others are reading: Tensor Data Preprocessing Input

TensorBoard

TensorBoard can help us understand the training dynamic and spot potential issues. It's a valuable tool for monitoring the training process.

We can load the TensorBoard to see the contrastive divergence and regularization converge quickly to 0. This is a good sign, but it doesn't necessarily mean the training is complete.

The training continues even when the loss is close to zero, which can be confusing. This happens because our "training" data changes with the model by sampling.

The progress of training is best measured by looking at the samples across iterations, and the score for random images that decreases constantly over time.

Sampling Buffer

The sampling buffer is a training trick that significantly reduces the sampling cost. It involves storing the samples of the last couple of batches in a buffer and reusing those as the starting point of the MCMC algorithm for the next batches.

To implement the sampling buffer, we need to store the samples of the last couple of batches in a buffer. This reduces the sampling cost because the model requires a significantly lower number of steps to converge to reasonable samples.

We re-initialize 5% of our samples from scratch, using random noise between -1 and 1. The remaining 95% are randomly picked from our buffer. This allows novel samples as well as reusing previous samples.

The buffer is used in the function sample_new_exmps, which returns a new batch of "fake" images. These fake images have been generated but are not actually part of the dataset.

In the function generate_samples, we implemented the MCMC for images. The hyperparameters of step_size, steps, and the noise standard deviation σ are specifically set for MNIST, and need to be fine-tuned for a different dataset.

The MCMC algorithm is performed for 60 iterations to improve the image quality and come closer to samples from qθ(x).

Recommended read: Ai and Ml Images

Analysis and Results

In the analysis of the energy-based model, we see that it was able to generalize using out-of-distribution datasets, outperforming flow-based and autoregressive models.

The EBM model was relatively resistant to adversarial perturbations, behaving better than models explicitly trained against them with training for classification.

Experimental results showed that the EBM model generated high-quality images relatively quickly on image datasets such as CIFAR-10 and ImageNet 32x32.

Experimental Results

The Experimental Results of Energy-Based Models (EBMs) are quite impressive. They can generate high-quality images relatively quickly on image datasets such as CIFAR-10 and ImageNet 32x32.

One of the standout features of EBMs is their ability to combine features learned from one type of image for generating other types of images. This is a significant advantage over other models.

EBMs also outperform flow-based and autoregressive models when it comes to generalizing using out-of-distribution datasets. They're able to adapt to new data in a way that other models can't.

Interestingly, EBMs are relatively resistant to adversarial perturbations. This means they behave better under attack than models that are explicitly trained against them with training for classification.

Discover more: What Is One of the Key Challenges Faced by Genai

Instability

Instability is a major concern for energy-based models, particularly when it comes to image generation. Divergence in these models can lead to a high probability of noise images.

The sampling algorithm fails when the model creates many local maxima, causing the energy surface to "diverge" and become useless for MCMC sampling. This results in noise images that obtain minimal probability scores.

A common trick to stabilize the model is to reload stable checkpoints from an earlier epoch. If the model is detected to be diverging, training is stopped, and the model is loaded from the previous epoch where it was still stable.

Sensitive hyperparameters include step_size, steps, and the noise standard deviation in the sampler, as well as the learning rate and feature dimensionality in the CNN model. Careful tuning of these parameters is crucial to prevent model instability.

See what others are reading: Getty Images Nvidia Generative Ai Istock

Conclusion

Energy-based models for generative modeling have their limitations, particularly when it comes to high-dimensional data like images.

Training energy-based models using contrastive divergence and sampling via MCMC is necessary due to the impracticality of calculating normalization over the whole dataset.

The training process for these models can be unstable, requiring multiple tricks to stabilize it.

Training time for energy-based models is relatively long, as each iteration involves sampling new "fake" images, even with a sampling buffer.

These models are just one approach to generative modeling, and other methods like VAE, GAN, and NF will be explored in future lectures and assignments.

Frequently Asked Questions

Is diffusion an energy-based model?

Diffusion models can be interpreted as energy-based models, where the data distribution is defined by an energy function. This allows for the explicit combination of multiple diffusion models to generate an image.

What is energy in machine learning?

In machine learning, energy refers to a measure of the cost or probability of a given configuration of latent variables and inputs. It's a key concept in inference, where the goal is to find a low-energy configuration or sample from possible configurations according to a Gibbs distribution.

Sources

- https://atcold.github.io/NYU-DLSP20/en/week07/07-1/

- https://deepai.org/machine-learning-glossary-and-terms/energy-based-models

- https://ayandas.me/blogs/2020-08-13-energy-based-models-one.html

- https://en.wikipedia.org/wiki/Energy-based_model

- https://uvadlc-notebooks.readthedocs.io/en/latest/tutorial_notebooks/tutorial8/Deep_Energy_Models.html

Featured Images: pexels.com