Generative Adversarial Networks (GANs) are a type of AI that can create new, synthetic data that's similar to real data. This is done by pitting two neural networks against each other in a game of cat and mouse.

The generator network creates fake data, while the discriminator network tries to distinguish between real and fake data. This process is repeated until the generator creates data that's indistinguishable from real data.

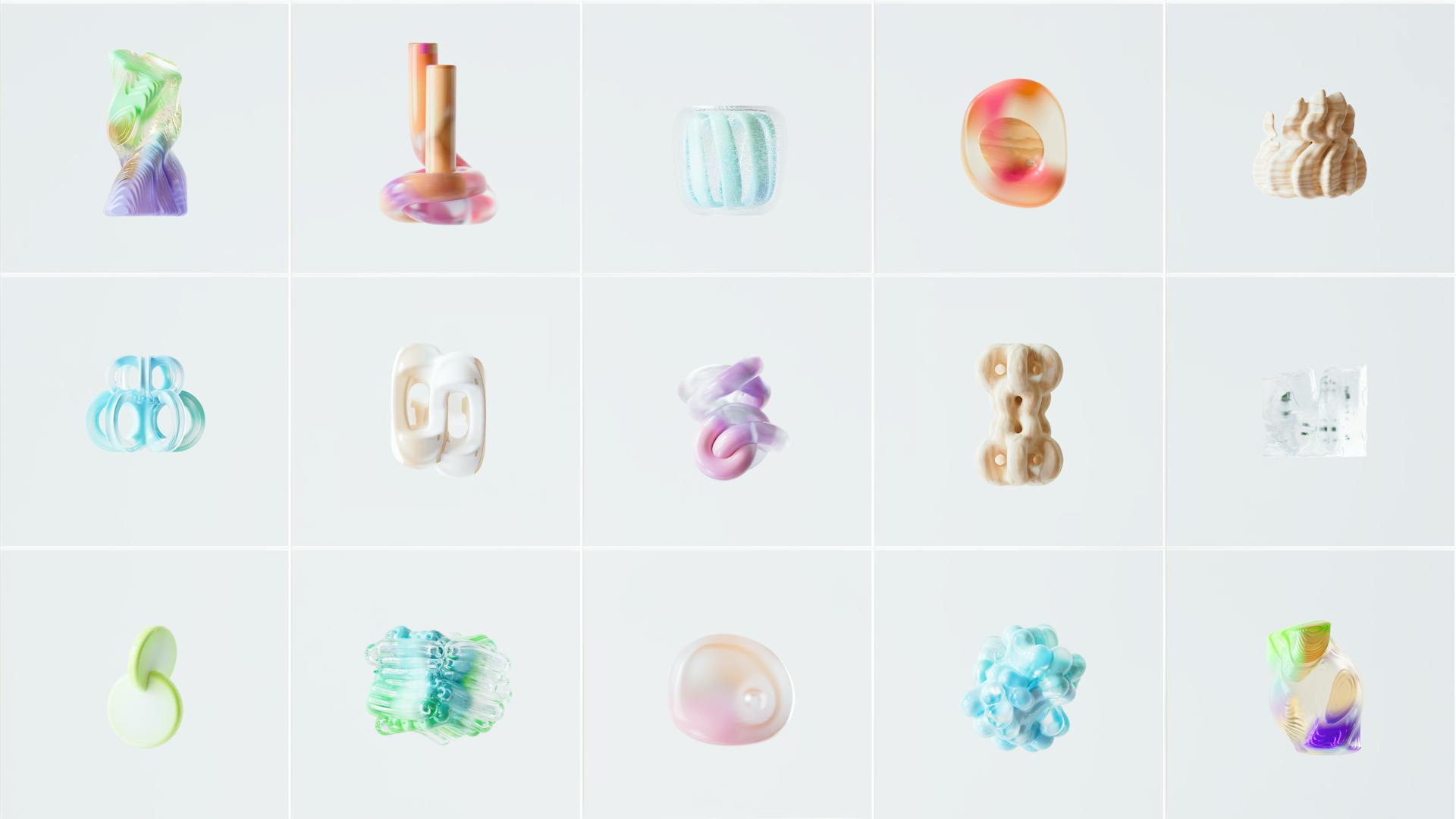

GANs have many potential applications, including image and video generation, data augmentation, and even art. They've been used to generate realistic images of faces, objects, and even entire scenes.

One of the key benefits of GANs is their ability to learn from a small dataset, making them particularly useful for tasks where data is scarce.

For another approach, see: Generative Adversarial Networks Training

What Is

A Generative Adversarial Network (GAN) is a type of neural network used for unsupervised learning. This means it can create new data without being explicitly told what to generate.

Intriguing read: Neural Network vs Generative Ai

GANs are made up of two neural networks: a Generator and a Discriminator. The Generator attempts to produce realistic data, while the Discriminator tries to distinguish between real and fake data.

The training process for GANs is a competition between the Generator and the Discriminator. The Generator tries to create better and more realistic data, while the Discriminator tries to differentiate between real and fake data.

GANs can be broken down into three parts: Generative, Adversarial, and Networks. The Generative part learns a generative model, which describes how data is generated in terms of a probabilistic model. The Adversarial part involves setting one thing up against another, such as comparing the generative result with the actual images in the data set. The Networks part uses deep neural networks as artificial intelligence (AI) algorithms for training purposes.

Here are some key characteristics of GANs:

- GANs are a type of generative model based on deep learning.

- They consist of a Generator that produces data and a Discriminator that evaluates its realism.

- The Generator takes a random noise vector as input and generates new data based on that input.

- The Discriminator has two output values – 1 or 0 – depending on whether the data is real or fake.

GANs have been used in various applications, including image synthesis, style transfer, and text-to-image synthesis. They have also revolutionized generative modeling.

Mathematical Background

The original GAN is defined as a game between a generator and a discriminator, where the generator's strategy set is the set of all probability measures on the probability space (Ω, μref).

The generator's goal is to match its output distribution to the reference distribution μref as closely as possible.

The generator's task is to approach μG ≈ μref, that is, to match its own output distribution as closely as possible to the reference distribution.

The discriminator's strategy set is the set of Markov kernels μD:Ω → P[0,1], where P[0,1] is the set of probability measures on [0,1].

The discriminator's task is to output a value close to 1 when the input appears to be from the reference distribution, and to output a value close to 0 when the input looks like it came from the generator distribution.

The GAN game is a zero-sum game, with the objective function L(μG, μD) defined as the expectation of the logarithm of the discriminator's output for inputs from both the reference and generator distributions.

The generator aims to minimize the objective function, while the discriminator aims to maximize it.

You might like: Generative Ai Strategy

Training and Evaluation

Training a Generative Adversarial Network (GAN) involves training the generator and discriminator simultaneously in a minimax game. The generator tries to create realistic samples to fool the discriminator, while the discriminator tries to classify real and generated samples correctly.

The evaluation of a GAN can be challenging, but common methods include using a combination of qualitative and quantitative measures. Qualitative measures involve visually inspecting and comparing the generated samples to real ones, while quantitative measures involve using metrics such as the Inception score, Frechet Inception Distance (FID), and Wasserstein distance to evaluate the generated samples' quality numerically.

To train a GAN, you can use a custom training loop, specifying options such as the mini-batch size, number of epochs, and Adam optimization parameters. It's also important to add noise to the real data by randomly flipping the labels assigned to the real images to balance the learning of the discriminator and the generator.

Additional reading: Generative Ai in Real Estate

Training and Evaluating

Training a Generative Adversarial Network (GAN) is a complex process that requires careful consideration of several factors. The two main components of a GAN, the generator and discriminator, are trained simultaneously in a minimax game where the generator tries to create realistic samples to fool the discriminator, while the discriminator tries to classify real and generated samples correctly.

Training the discriminator involves using both real and fake data to teach the binary classifier to distinguish between the two. The discriminator's goal is to correctly identify whether the input is real or fake, and its loss function is typically calculated using binary cross-entropy.

The generator, on the other hand, is trained to produce synthetic data that can deceive the discriminator. Its loss function is typically calculated using a combination of the generator's ability to produce realistic samples and the discriminator's ability to correctly identify them.

During training, the generator and discriminator are updated simultaneously in an adversarial process. The generator generates fake data, which is then fed to the discriminator, and the discriminator's loss is calculated based on its ability to correctly identify the fake data. The generator's loss is then calculated based on the discriminator's loss, and the generator's weights are updated to try to minimize its loss.

On a similar theme: Which Term Describes the Process of Using Generative Ai

To monitor the training progress, it's essential to track the loss functions of both the generator and discriminator. This can provide insights into the training process and help identify potential problems, such as mode collapse or poor convergence.

Some common metrics used to evaluate the performance of a GAN include the Inception score, Frechet Inception Distance (FID), and Wasserstein distance. These metrics can provide a quantitative measure of the quality of the generated samples and help identify areas for improvement.

Here are some common metrics used to evaluate the performance of a GAN:

By carefully monitoring the training process and using a combination of qualitative and quantitative metrics, you can train a GAN that produces high-quality, realistic samples.

Loading the Dataset

Loading the Dataset is a crucial step in the training and evaluation process. A CIFAR-10 dataset is created for training with a specific code that specifies a root directory.

This code also turns on train mode and downloads the dataset if needed. It applies the specified transform to the data. Subsequently, it generates a 32-batch DataLoader.

This DataLoader shuffles the training set of data. This is essential for preventing the model from learning patterns based on the order of the data.

For your interest: Learning Generative Ai

Vanishing Gradient

The vanishing gradient problem is a significant challenge in training generative adversarial networks (GANs). It occurs when the generator has access to a restricted strategy set, which takes up a vanishingly small proportion of its entire strategy set.

In practice, this means the generator only has access to measures of form μ μ Z∘ ∘ Gθ θ − − 1{\displaystyle \mu _{Z}\circ G_{\theta }^{-1}}, where Gθ θ {\displaystyle G_{\theta }} is a function computed by a neural network with parameters θ θ {\displaystyle \theta }, and μ μ Z{\displaystyle \mu _{Z}} is an easily sampled distribution.

The discriminator also has a restricted strategy set, which can lead to unstable convergence. This is because the equilibrium point is no longer guaranteed when they have a restricted strategy set.

To improve convergence stability, some training strategies start with an easier task and gradually increase the difficulty during training, essentially applying a curriculum learning scheme.

A different take: What Is a Best Practice When Using Generative Ai

Variants and Applications

Generative Adversarial Networks (GANs) have been used to up-scale low-resolution 2D textures in old video games by recreating them in 4k or higher resolutions via image training.

Some prominent GAN variants include those that can generate high-resolution images from low-resolution inputs, allowing for improved image quality in various applications.

GANs have been employed in a wide range of applications, including image generation, data augmentation, style transfer, super-resolution, and image-to-image translation.

GANs can modify the style of images, such as converting photos into the style of famous paintings or changing day scenes to night scenes.

Here are some of the widely recognized uses of GANs:

- Image Synthesis and Generation

- Image-to-Image Translation

- Text-to-Image Synthesis

- Data Augmentation

- Data Generation for Training

Variants

There is a veritable zoo of GAN variants.

Some of the most prominent ones include the Conditional GAN, which is used for tasks like image-to-image translation.

The Generative Adversarial Network (GAN) has many different applications, but its variants are what make it so versatile.

A notable variant is the Deep Convolutional GAN (DCGAN), which is particularly useful for generating high-quality images.

GAN variants have been used in a wide range of fields, from art to medicine, and have shown great promise in many areas.

The StyleGAN is another variant that has gained popularity, especially in the field of computer vision.

Explore further: Generative Ai for Cybersecurity

Conditional

Conditional GANs are a type of Generative Adversarial Network that allows the generator network to be conditioned on an additional input vector.

This conditioning can generate images corresponding to a specific class, category, or attribute. The architecture of a cGAN is similar to that of a traditional GAN, with a condition vector concatenated with the input noise vector fed into the generator.

The discriminator network is also modified to take in both the generated image and the condition vector as inputs. This allows for greater control over the generation process, as the generator can be conditioned on specific attributes or classes.

For example, a conditional GAN can be used to generate images of a specific class, such as cats or dogs. In 2017, a conditional GAN learned to generate 1000 image classes of ImageNet.

Conditional GANs are particularly useful in applications such as image editing or synthesis, where the goal is to generate images with specific properties or characteristics. This can be achieved by conditioning the generator on specific attributes or classes.

Intriguing read: Getty Images Nvidia Generative Ai Istock

Some examples of applications of cGANs include image-to-image translation, where the goal is to generate images of one domain that match the style or content of another domain, and text-to-image synthesis, where the goal is to generate images based on textual descriptions.

Here are some key advantages of cGANs:

- Greater control over the generation process

- Ability to generate images with specific properties or characteristics

- Useful in applications such as image editing or synthesis

- Can be used for image-to-image translation and text-to-image synthesis

Autoencoder

An autoencoder is a type of neural network that can be used for dimensionality reduction and generative modeling.

The idea behind an autoencoder is to train a neural network to learn a compressed representation of the input data, often referred to as a latent vector.

An autoencoder typically consists of an encoder and a decoder, where the encoder maps the input data to a lower-dimensional space, and the decoder maps the latent vector back to the original input data.

Autoencoders can be trained to reconstruct the input data with high accuracy, making them useful for tasks such as anomaly detection and data denoising.

The adversarial autoencoder (AAE) is a variant of the autoencoder that incorporates a discriminator to improve the quality of the generated data.

Utility Class

A utility class is a crucial component in building a Generative Adversarial Network (GAN). It helps to define the architecture of the discriminator, which is a critical component of the GAN.

In PyTorch, a utility class is used to define the discriminator architecture. The class Discriminator is descended from nn.Module, making it a PyTorch module.

The discriminator architecture is composed of linear layers, batch normalization, dropout, convolutional, LeakyReLU, and sequential layers. This architecture is used to determine the validity of an image, i.e., whether it is real or artificial.

The output of the discriminator is a probability value that indicates the likelihood of the input image being real. This probability value is a key component in training the GAN.

A utility class can be used to define different types of discriminators, such as a convolutional discriminator or a linear discriminator. Each type of discriminator has its own architecture and is used for specific tasks.

See what others are reading: Chatgpt Openai's Generative Ai Chatbot Can Be Used for

Here is an example of how a utility class can be used to define a discriminator:

```python

class Discriminator(nn.Module):

def __init__(self):

super(Discriminator, self).__init__()

self.conv1 = nn.Conv2d(1, 64, kernel_size=3)

self.conv2 = nn.Conv2d(64, 128, kernel_size=3)

self.conv3 = nn.Conv2d(128, 256, kernel_size=3)

self.fc1 = nn.Linear(256*3*3, 128)

self.fc2 = nn.Linear(128, 1)

self.leaky_relu = nn.LeakyReLU(0.2)

self.dropout = nn.Dropout(0.3)

def forward(self, img):

img = self.leaky_relu(self.conv1(img))

img = self.leaky_relu(self.conv2(img))

img = self.leaky_relu(self.conv3(img))

img = img.view(-1, 256*3*3)

img = self.leaky_relu(self.fc1(img))

img = self.dropout(img)

img = self.fc2(img)

return img

```

This utility class can be used to define different types of discriminators by changing the architecture of the class.

Additional reading: How Multimodal Used in Generative Ai

Comparison of Large Language Models

Large Language Models (LLMs) have emerged as a cornerstone in the advancement of artificial intelligence, transforming our interaction with technology and our ability to process and generate human language.

LLMs have been instrumental in improving language understanding and generation capabilities, allowing for more accurate and context-specific responses. This has far-reaching implications for various industries and applications.

The comparison of LLMs highlights their varying strengths and weaknesses, with some exceling in specific tasks such as question-answering or text summarization.

Broaden your view: Generative Ai with Large Language Models

Implementation and Usage

The implementation of a Generative Adversarial Network (GAN) involves a series of steps that can be tricky to follow, but don't worry, we've got you covered.

The code for a DC-GAN architecture with a generator and discriminator is remarkably simple, implementing a minimax game where the generator creates realistic-looking images and the discriminator tries to distinguish between real and fake images.

Here are the key components of the generator model: dense layers, transposed convolutional layers, batch normalization, and a final layer with a 'tanh' activation function.

The discriminator model consists of convolutional layers followed by dense layers, processing the input images and gradually down-sampling them using LeakyReLU activations and batch normalization.

The loss functions are designed to penalize the discriminator for misclassifying real images and for being fooled by the generator, while the generator is penalized if the discriminator can easily tell its images are fake.

Here are the main functions involved in the training loop:

- train_step: generates fake images, evaluates them with the discriminator, and updates the generator and discriminator parameters.

- train: calls the train_step function for each batch of real images in the dataset, for a specified number of epochs.

- generate_and_save_images: generates images from random noise, reshapes and scales them to visual range, and saves and displays them using Matplotlib.

Implementation of

Implementation of InfoGAN and DC-GAN involves understanding the architecture and components of each model.

The InfoGAN game is defined as follows: three probability spaces define an InfoGAN game, including the space of reference images, the fixed random noise generator, and the fixed random information generator.

A DC-GAN architecture with a generator and discriminator can be implemented to generate fake images resembling the MNIST dataset digits. The generator learns to create realistic-looking images, while the discriminator learns to distinguish between real and fake images.

The generator model consists of a series of dense layers and transposed convolutional layers, starting with a dense layer that reshapes the noise input into a 7x7 image with 256 channels.

The discriminator model consists of convolutional layers followed by dense layers, processing the input images and gradually down-sampling them using convolutional layers with LeakyReLU activations and batch normalization.

The objective function of the InfoGAN game is L(G,Q,D) = LGAN(G,D) - λI^ (G,Q), where LGAN(G,D) is the original GAN game objective, and I^ (G,Q) is the mutual information between the informative label part c and the generator's output G(z,c).

Intriguing read: Generative Ai Architecture Design

The DC-GAN's training loop involves calling the train_step function for each batch of real images in the dataset, for a specified number of epochs. The train_step function generates random noise, passes it through the generator to produce fake images, and updates the generator and discriminator parameters using the Adam optimizer.

Here is a summary of the components involved in the DC-GAN architecture:

Importing Libraries

To get started with implementing a Generative Adversarial Network (GAN) in PyTorch, you'll need to import the required libraries. This includes importing torch, torch.nn, torch.optim, and torchvision.

The torch library is the foundation of PyTorch, providing a dynamic computation graph and automatic differentiation.

For the CIFAR-10 image dataset, you'll also need to import torchvision.datasets, torchvision.transforms, and torch.utils.data.

Broaden your view: Transfer Learning Pytorch

Parameters for Later Use

In the implementation of a Generative Adversarial Network (GAN), it's essential to define parameters that will be used in later processes.

The latent space's dimensionality is represented by latent_dim, which determines the complexity of the generated data.

To optimize the GAN's performance, we need to specify the learning rate of the optimizer, denoted as lr.

The Adam optimizer's coefficients, beta1 and beta2, also play a crucial role in the optimization process.

The total number of training epochs is represented by num_epochs, which affects the overall training time and model performance.

Here's a summary of the key parameters to keep in mind:

A Utility Class

A Utility Class is a crucial part of building a GAN, and it's essential to define it correctly. The Generator class in PyTorch inherits from nn.Module and is comprised of a sequential model with various layers.

The Generator class is used to synthesize an image from a latent vector, which is the generator's output. The architecture of the Generator class uses a series of learned transformations to turn the initial random noise in the latent space into a meaningful image.

A key aspect of the Generator class is its ability to take a latent vector and produce an image. The class is designed to be flexible and can be modified to suit the specific needs of your project.

For another approach, see: Vector Database Generative Ai

The Discriminator class, on the other hand, is used to determine the validity of an image. It is composed of linear layers, batch normalization, dropout, convolutional, LeakyReLU, and sequential layers. The Discriminator class is also inherited from nn.Module and is designed to be highly effective in distinguishing between real and artificial images.

Here are some common layers used in the Generator and Discriminator classes:

- Tanh layer: used for activation

- Linear layer: used for fully connected layers

- Convolutional layer: used for image processing

- Batch normalization layer: used for normalization

- Reshaping layer: used for image resizing

- Upsampling layer: used for image upsampling

- LeakyReLU layer: used for activation

By using a utility class, you can easily build and modify the Generator and Discriminator classes to suit your project's needs. This approach also helps to keep your code organized and maintainable.

Advantages and Disadvantages

GANs have some amazing advantages that make them super useful. They can generate new, synthetic data that resembles some known data distribution, which can be useful for data augmentation, anomaly detection, or creative applications.

One of the biggest advantages of GANs is their ability to produce high-quality, photorealistic results in image synthesis, video synthesis, music synthesis, and other tasks. This is especially useful in applications where realistic results are crucial.

Here are some of the key advantages of GANs:

- Synthetic data generation

- High-quality results

- Unsupervised learning

- Versatility

However, GANs also have some significant disadvantages that need to be considered. For example, they can be difficult to train, with the risk of instability, mode collapse, or failure to converge.

Advantages

GANs have several advantages that make them a powerful tool in various applications.

GANs can generate new, synthetic data that resembles some known data distribution, which can be useful for data augmentation, anomaly detection, or creative applications.

One of the key benefits of GANs is their ability to produce high-quality, photorealistic results in image synthesis, video synthesis, music synthesis, and other tasks.

GANs can also be trained without labeled data, making them suitable for unsupervised learning tasks, where labeled data is scarce or difficult to obtain.

GANs are versatile and can be applied to a wide range of tasks, including image synthesis, text-to-image synthesis, image-to-image translation, anomaly detection, data augmentation, and others.

Here are some of the key advantages of GANs:

- Synthetic data generation

- High-quality results

- Unsupervised learning

- Versatility

Disadvantages of

GANs can be finicky to train, with a risk of instability, mode collapse, or failure to converge. This can be frustrating for developers, especially when working with high-stakes applications.

Training GANs requires significant computational resources and can be slow to train, especially for high-resolution images or large datasets. This can be a major bottleneck in many projects.

Curious to learn more? Check out: Train Generative Ai

Overfitting is another issue with GANs, where they produce synthetic data that's too similar to the training data, lacking diversity. This can lead to synthetic data that's not very useful.

GANs can reflect the biases and unfairness present in the training data, leading to discriminatory or biased synthetic data. This is a major concern, especially in applications where fairness and transparency are crucial.

Here are some of the key disadvantages of GANs:

- Training Instability

- Computational Cost

- Overfitting

- Bias and Fairness

- Interpretability and Accountability

Future of Generative Adversarial Networks

Generative Adversarial Networks (GANs) have already shown their potential in various applications, including image and video synthesis, natural language processing, and drug discovery. They're going to find even more exciting applications in the future.

GANs can generate realistic 3D models of objects and environments for use in virtual and augmented reality applications. This will result in more immersive and lifelike experiences in fields like gaming and architectural design.

Fashion and design will also benefit from GANs, which can create new designs and patterns for clothing, accessories, and home decor. This will facilitate more creative and efficient design processes, as well as personalized products based on individual preferences.

For more insights, see: How Generative Ai Will Transform Knowledge Work

In healthcare, GANs can help improve disease diagnosis and treatment by analyzing and synthesizing medical images. They can also assist drug discovery and development by generating new molecules with desired properties.

Here are some potential future applications of GANs:

- Virtual reality and augmented reality

- Fashion and design

- Healthcare

- Robotics

- Marketing and advertising

- Art and music

As GANs continue to improve, their applications will undoubtedly expand, providing exciting new opportunities for data generation and other fields.

Frequently Asked Questions

Are GANs still relevant in 2024?

Yes, GANs remain highly relevant in 2024, with ongoing improvements in stability and applications across various industries. Their innovative capabilities continue to drive advancements in fields like image generation and data augmentation.

Does Gen AI use GANs?

GANs were the most popular generative AI algorithm until recently, but Gen AI may not rely solely on GANs due to advancements in diffusion-based models and transformers.

Why is GAN better than cnn?

GANs outperform CNNs in generating new data that follows a pattern, whereas CNNs excel at recognizing existing patterns. If you're looking to create realistic images or text, GANs might be the better choice.

Sources

- https://en.wikipedia.org/wiki/Generative_adversarial_network

- https://www.geeksforgeeks.org/generative-adversarial-network-gan/

- https://medium.com/@marcodelpra/generative-adversarial-networks-dba10e1b4424

- https://www.mathworks.com/help/deeplearning/ug/train-generative-adversarial-network.html

- https://www.leewayhertz.com/generative-adversarial-networks/

Featured Images: pexels.com