As a developer, you're likely no stranger to the concept of a model lifecycle. However, generative AI models have their own unique characteristics that set them apart from traditional machine learning models.

A generative AI model lifecycle typically begins with the data preparation phase, where you gather and preprocess the data that will be used to train the model. This phase is crucial, as it lays the foundation for the model's performance and accuracy.

In the training phase, you fine-tune the model's parameters using the prepared data. This is where the model learns to generate new, synthetic data that resembles the original data. According to a study, a well-trained generative AI model can achieve an accuracy rate of up to 90% in certain applications.

Once the model is trained, it's time to evaluate its performance and make any necessary adjustments. This phase is critical in determining the model's effectiveness and identifying areas for improvement.

Expand your knowledge: Generative Ai in Performance Testing

Project Lifecycle

The project lifecycle of a generative AI model is a complex and iterative process. It's not a linear process, but rather a cycle that requires balancing business objectives, technical constraints, and ethical considerations.

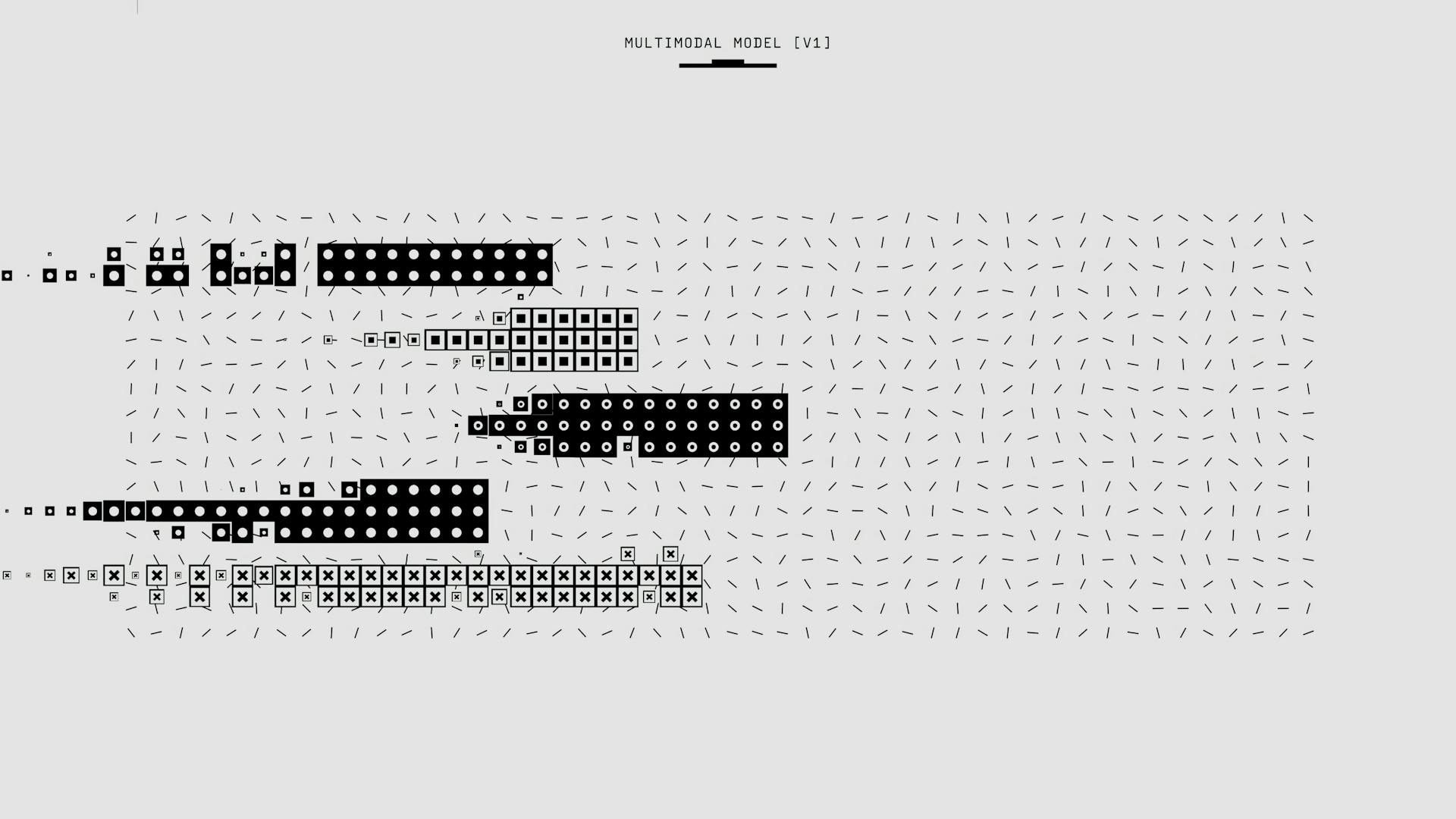

The lifecycle is structured into four main stages: Scope, Select, Adapt and Align Model, and Application Integration. Each stage involves a distinct set of activities, from high-level strategic planning to detailed technical adjustments.

Here are the four stages of the generative AI project lifecycle:

- Scope: Define the use case and set clear objectives.

- Select: Choose the right model that aligns with your needs or train your own.

- Adapt and Align Model: Refine, fine-tune, and align the model to the specific requirements.

- Application Integration: Deploy and integrate the model into real-world applications.

Defining the

Defining the aim of your generative AI project is crucial to its success. A genAI model is built to address a specific business need, such as automating customer support or producing high-resolution images for T-shirts.

To avoid getting lost in the sea of opportunities, start from the problem you want to solve. This will help you keep your focus and ensure that your project stays on track.

The goal of defining the aim is to identify a high-impact, feasible use case that aligns with your organization’s strategic goals. This involves thinking about the areas where generative AI can add value, the data you have available, and how you can put it all together.

Suggestion: Generative Ai Project Ideas

Here are the key steps to define the aim of your project:

- Identify the problem you want to solve

- Think about the areas where generative AI can add value

- Consider the data you have available

- Align your project with your organization’s strategic goals

By following these steps, you can ensure that your project is well-defined and focused on delivering value to your organization.

What Is a?

A generative AI model is a type of artificial intelligence that can create a wide variety of data, including images, videos, audio, text, and 3D models. It does this by learning patterns from existing data.

This creative ability is a result of training generative AI models on vast collections of existing data, something that has become possible only in the last decade.

Generative AI models have a rich and evolving history, marked by significant advancements in machine learning and artificial intelligence research.

They started as basic probabilistic models like Markov Chains back in the mid-20th century.

The current process of generative AI model creation, along with their structure, has led to significant advancements in this field.

A unique perspective: How to Write an Artificial Intelligence Program

Introduction

The project lifecycle is a crucial aspect of software development, and it's essential to understand how generative AI can enhance it. Generative AI models have achieved a valuation of USD 19.13 million in 2022.

In the coding market, generative AI is expected to exhibit robust growth, with a compound annual growth rate (CAGR) of 25.2% from 2023 to 2030. This growth is driven by the ability of generative AI to automate and enhance various aspects of coding.

Generative AI plays a vital role in overseeing intricate software development life cycles within applications related to machine learning, deep learning, and data analysis. By automating traditional SDLC tasks, generative AI reduces the need for manual involvement, resulting in shorter development cycles and increased productivity.

Related reading: Generative Ai for Software Development

Building the Model

Training a generative AI model requires feeding collected data into it, which is then evaluated on highly specific metrics that demand a lot of computing power, time, and energy reserves.

Choosing the right model architecture is crucial, as it depends on the problem domain and dataset. Common architectures include Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and Autoregressive models.

Choosing a Pre-Trained Model

Choosing a pre-trained model can be a daunting task, but it's a crucial step in building your model. Pre-trained models like OpenAI's GPT, Google's T5, and Meta's LLaMA are excellent starting points for common use cases such as text generation, summarization, and chatbots.

These models come with the advantage of requiring minimal data and fine-tuning to achieve good results, making them ideal for customer support chatbots, creative content generation, and knowledge-based Q&A systems. They're also great for use cases that involve proprietary data or specific contextual understanding, such as legal, medical, or financial domains.

Here are some popular pre-trained models to consider:

For more advanced tasks, consider newer variants with built-in retrieval mechanisms, such as RAG, that can access and incorporate external data sources.

Planning and Requirements

Planning and Requirements is a crucial phase in building the model, and it's essential to get it right from the start. This phase involves delineating the project's scope, objectives, and feasibility within the software development life cycle.

Traditional planning and requirement analysis can be problematic due to their linear and sequential processes, leading to misunderstandings and scope changes. Embracing an agile or iterative approach ensures continuous feedback, testing, and adaptation of requirements throughout the entire project lifecycle.

Gen AI can help with planning and requirements by generating, validating, and optimizing requirements based on natural language inputs. It can also identify and resolve inconsistencies, ambiguities, and gaps in the requirements, contributing to overall clarity and coherence.

To facilitate collaboration and communication within stakeholder and developer networks, Gen AI can provide feedback, suggestions, and best practices to enhance the quality and clarity of the requirements and align them with industry standards.

Here are some key strategies for effective planning and requirements:

- Instruction-based prompts can clearly define the task in the input, guiding the model to produce more accurate and contextually relevant outputs.

- Few-shot prompts can provide a few examples within the input to guide the model, while zero-shot prompts use descriptive language to specify the task when no examples are provided.

- Agile or iterative approaches can ensure continuous feedback, testing, and adaptation of requirements throughout the project lifecycle.

Data Collection and Preprocessing

Collecting and preprocessing data is a crucial step in building a generative AI model. Training on massive and diverse datasets is key to successful development.

Data provenance and quality can be difficult to track, even in commercially available third-party datasets. This may lead to risks for the GenAI model, including data poisoning and copyright infringement.

A large and diverse dataset is essential for training a robust GenAI model. Unfortunately, it's not always possible to ensure the quality and integrity of the data.

Data poisoning can occur when malicious data is introduced into the training dataset, compromising the model's performance. This highlights the importance of carefully curating and preprocessing the data.

Preprocessing data involves cleaning, transforming, and formatting the data to prepare it for training. This step is critical in ensuring the model receives high-quality data.

Expand your knowledge: Generative Ai Training

Gemini Stable Version

A stable version of a Gemini model is one that doesn't change and continues to be available until its discontinuation date. You can identify the version of a stable model by the three-digit number appended to the model name, such as gemini-1.5-pro-001.

To use the stable version of a Gemini model, append the three-digit version number to the model with a hyphen (-). For example, to specify version one of the stable gemini-1.5-pro model, you would append -001 to the model's name.

Google releases stable versions at a regular cadence. You can switch from one stable version to another as long as the other version is still available. When you do this, run your tuning jobs again because there might be prompt, output, and other differences between the versions.

The following table shows the available stable model versions for generally available Gemini models:

Don't use a stable version after its discontinuation date; switch to a newer, available stable version.

Additional reading: Stable Diffusion Generative Ai

Model Evaluation and Refinement

Model evaluation is a crucial step in the generative AI model lifecycle, ensuring the model performs as expected in your use case. Evaluate models based on accuracy and performance, considering whether they achieve acceptable performance on similar tasks, and assess their scalability to handle large datasets and high query volumes.

To evaluate model performance, use sample data or public benchmarks, and consider whether the model requires large amounts of fine-tuning data. You can also check for limitations in model size, response latency, and hardware requirements.

Recommended read: Generative Ai with Large Language Models

Here are some key metrics to consider when evaluating a model:

- Accuracy and Precision: Does the model predict correctly?

- Robustness: How does the model perform under slight variations in inputs?

- Bias and Fairness: Are there demographic biases in outputs? Are certain groups disadvantaged?

- Toxicity: Is the model generating harmful or offensive content?

- Efficiency: How fast does the model generate responses? What are the computational requirements?

- HHH Metric: Measures if the model is Helpful, Honest, and Harmless — an emerging standard for ethical AI evaluation.

Continuous refinement is also necessary, as user expectations evolve, new edge cases emerge, and the broader environment changes. This can involve retraining on new data, implementing ongoing RLHF cycles, and updating prompt strategies based on feedback.

Recommended read: Google Announces New Generative Ai Search Capabilities for Doctors

When to Do It

If a pre-trained model doesn't fit the bill, it's time to consider training your own. You'll want to start by evaluating the quality and quantity of your data, ensuring it's representative of the target domain and includes edge cases.

Data preparation is crucial for training a model from scratch. This means using data augmentation techniques to diversify your training set if your dataset is small.

You should also consider the computational resources required for training large models. Training a model requires substantial GPU or TPU resources, so you may want to look into cloud-based solutions like AWS SageMaker or Google Cloud for distributed training.

Take a look at this: Google Cloud Skills Boost Generative Ai

If you're dealing with a complex task like legal document drafting, you may want to fine-tune a pre-trained model on domain-specific data rather than starting from scratch. This can help reduce training time and improve performance.

To fine-tune a model, you'll need to choose the right hyperparameters, such as learning rate, batch size, and number of epochs, based on your dataset size and compute availability.

Iterative Refinement

Iterative refinement is an ongoing process that requires continuous evaluation and adaptation of your model. This process is crucial to ensure your model performs as expected in your use case.

As user expectations evolve, new edge cases emerge, and the broader environment changes, your model needs to adapt to these changes. This can involve retraining on new data, implementing ongoing RLHF (Reinforcement Learning from Human Feedback) cycles, and updating prompt strategies based on feedback.

Retraining on new data is a common approach to iterative refinement. For example, if a model designed for financial sentiment analysis starts producing biased outputs due to evolving market terminology, introducing new training data can help improve performance.

You might like: New Generative Ai

Implementing ongoing RLHF cycles is another effective way to refine your model. This involves training the model using feedback from human evaluators, which can help reduce toxic outputs, biases, and unsafe behavior.

Updating prompt strategies based on feedback is also an essential aspect of iterative refinement. By regularly evaluating and refining your model, you can ensure it remains aligned with human values and user expectations.

Here are some key considerations for iterative refinement:

- Retrain on new data to adapt to changing user expectations and edge cases

- Implement ongoing RLHF cycles to reduce toxic outputs and biases

- Update prompt strategies based on feedback to ensure alignment with human values

By following these steps, you can ensure your model remains refined and effective over time.

Model Deployment and Integration

Model deployment and integration are crucial steps in the Generative AI model lifecycle. This stage involves optimizing the model for inference, integrating it with existing systems, building user-facing interfaces, and implementing ongoing monitoring and management strategies.

To deploy a model, you can use automation to streamline and simplify the implementation of AI models, utilizing tools like Gen AI to improve the overall deployment procedure. This can help minimize the risk of human errors, promote consistency and accuracy in the workflow, and save time and resources.

Key considerations for model deployment include ensuring scalability, reliability, and seamless integration into the operational workflow. This requires close collaboration between data scientists, software engineers, and DevOps teams. Some best practices for deployment include automating the deployment process, simplifying the deployment procedure, and providing a secure and reliable service.

Here are some key steps for integrating a model with frontend and backend systems:

- Build RESTful or GraphQL APIs that interface with the model.

- Implement request batching and throttling to handle high traffic.

- Use frameworks like Flask, FastAPI, or Node.js to connect the model with the user interface.

- Ensure real-time communication between frontend (e.g., web or mobile app) and the backend model.

- Implement data encryption and secure access mechanisms.

- Use anonymization and pseudonymization techniques for sensitive data.

Application Integration

Application Integration is a critical stage in the Generative AI project lifecycle. It's where your customized model transitions from development to a fully deployed, production-ready solution. This stage involves optimizing the model for inference, integrating it with existing systems, building user-facing interfaces, and implementing ongoing monitoring and management strategies.

To integrate your model with existing systems, you'll need to create APIs that interface with the model. This can be done using RESTful or GraphQL APIs, which are designed to handle high traffic and provide a seamless user experience. For example, integrating a product recommendation engine into an e-commerce site might involve creating an API that connects to the model, setting up the backend infrastructure, and designing a user-friendly UI that displays the recommendations seamlessly on the product pages.

A fresh viewpoint: How Are Modern Generative Ai Systems Improving User Interaction

Key steps for integration include building APIs, connecting the frontend and backend, and implementing data encryption and secure access mechanisms. You'll also need to include feedback mechanisms to capture user preferences and improve the model iteratively. For instance, a chatbot might use a feedback mechanism to collect user input and display model outputs, while also capturing user preferences to improve its responses over time.

Here are some key considerations for integration:

- Model latency in real-time applications can be addressed using model quantization and distillation techniques.

- Ethical and compliance risks can be mitigated by implementing Responsible AI (RLHF) and bias monitoring tools.

- Integration complexity can be reduced by using modular architectures and well-defined APIs.

- Cost management can be optimized by choosing cost-effective cloud providers and optimizing models for cost.

Ultimately, successful application integration requires close collaboration between data scientists, software engineers, and DevOps teams to achieve smooth operation and deployment. By following best practices and considering key considerations, you can ensure that your Generative AI solution is not only functional but also scalable, reliable, and seamlessly embedded into the operational workflow.

Available Versions

A stable version of a Gemini model is available until its discontinuation date and can be identified by a three-digit number appended to the model name.

You can switch from one stable version to another as long as the other version is still available, but you'll need to re-run your tuning jobs due to potential differences in prompts, output, and other aspects.

The Gemini 1.5 Flash model is available in two stable versions: gemini-1.5-flash-002 and gemini-1.5-flash-001, with release dates of September 24, 2024, and May 24, 2024, respectively.

The Gemini 1.5 Pro model is also available in two stable versions: gemini-1.5-pro-002 and gemini-1.5-pro-001, with release dates of September 24, 2024, and May 24, 2024.

To specify a stable version of a Gemini model, append the three-digit version number to the model name with a hyphen, like this: gemini-1.5-pro-001.

Here is a list of available stable Gemini model versions:

The text-embedding model is available in two stable versions: text-embedding-005 and text-embedding-004, with release dates of November 18, 2024, and May 14, 2024, respectively.

A unique perspective: Is Speech to Text Generative Ai

Sources

- https://medium.com/@sahin.samia/the-complete-guide-to-the-generative-ai-project-lifecycle-from-ideation-to-implementation-365dee86cb96

- https://ai.plainenglish.io/the-lifecycle-of-generative-ai-from-scope-to-application-integration-6d6ec6c7d007

- https://www.velvetech.com/blog/generative-ai-models/

- https://cloud.google.com/vertex-ai/generative-ai/docs/learn/model-versions

- https://www.xenonstack.com/blog/generative-ai-software-development

Featured Images: pexels.com