Multi-agent reinforcement learning (MARL) is a rapidly growing field that allows multiple agents to learn from each other and their environment.

In MARL, agents can learn to cooperate or compete with each other, leading to more complex and realistic scenarios.

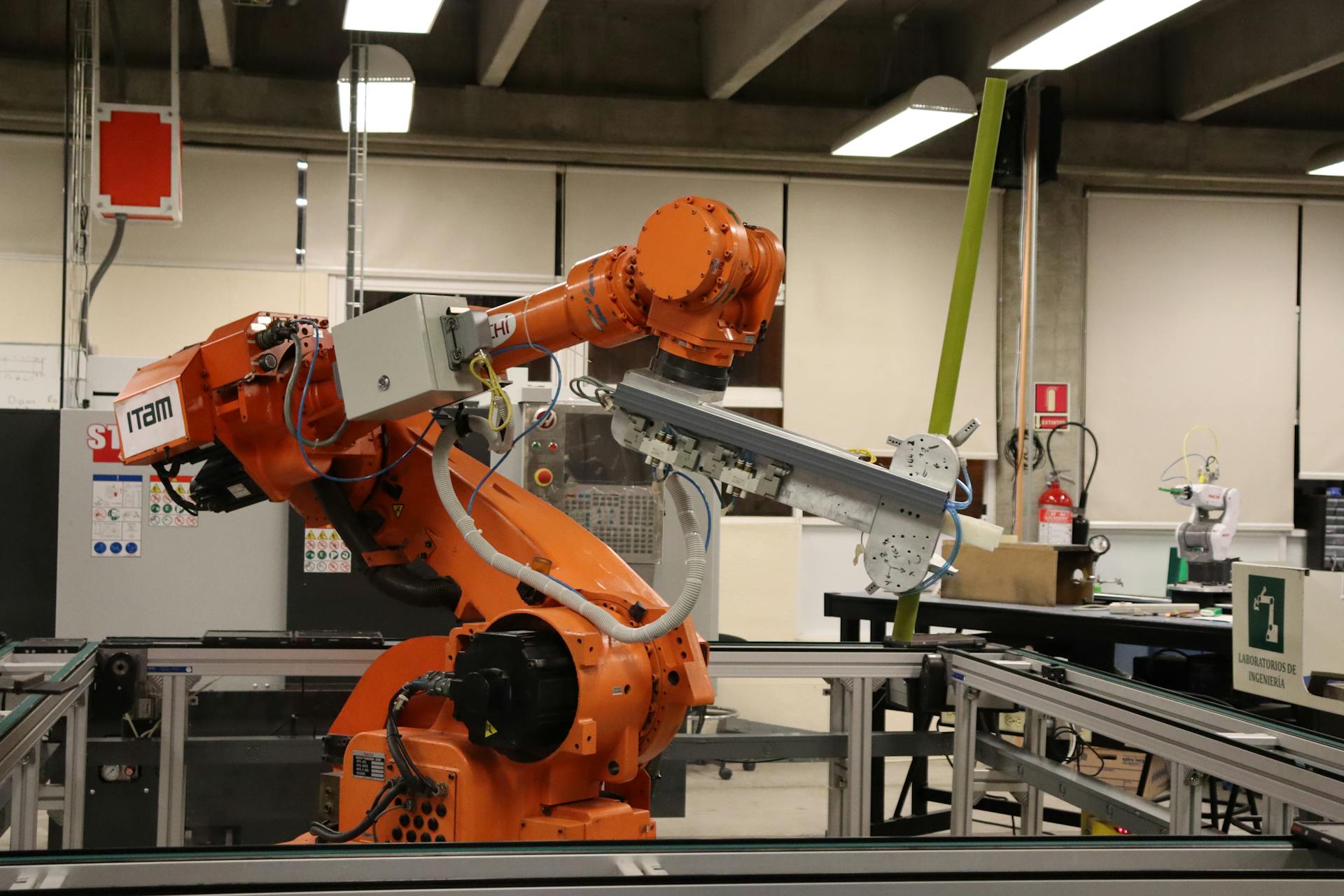

One key application of MARL is in robotics, where multiple robots can learn to work together to accomplish tasks.

For example, in a warehouse setting, robots can learn to pick and place items together, increasing efficiency and productivity.

Another application of MARL is in game theory, where agents can learn to negotiate and make deals with each other.

Readers also liked: What Are Genai Use Cases Agents Chatbots

Definition and Basics

Multi-agent reinforcement learning is modeled as a Markov decision process (MDP), which includes a set of environment states S and a set of actions for each agent Ai.

In this model, the probability of transition from state s to state s' under joint action a is represented by Pa→ → (s,s′). This probability is crucial in understanding how the environment responds to the agents' actions.

You might enjoy: Rl Agent

The immediate joint reward after a transition from s to s' with joint action a is denoted by R→ → a→ → (s,s′). This reward is essential in evaluating the effectiveness of the agents' actions.

A partially observable setting is characterized by each agent accessing an observation that only has part of the information about the current state. In such settings, the core model is the partially observable stochastic game in the general case, and the decentralized POMDP in the cooperative case.

Definition

In multi-agent reinforcement learning, the environment is modeled as a Markov decision process (MDP). This means it's comprised of a set of environment states, S.

A key aspect of MDP is the set of actions for each agent. There's one set of actions, Ai, for each agent i, where i ranges from 1 to N. This means there are N sets of actions in total.

The probability of transition from one state to another under a joint action is denoted as Pa→ → (s,s′). This probability is calculated based on the current state s and the joint action a→ → taken at time t.

Worth a look: Social Media Agent as in Artificial Intelligence

The immediate joint reward after a transition from state s to state s′ with joint action a→ → is denoted as R→ → a→ → (s,s′). This reward is a crucial part of the MDP model.

Here are the key components of an MDP in multi-agent reinforcement learning:

- S: a set of environment states

- Ai: a set of actions for each agent i

- Pa→ → (s,s′): the probability of transition from state s to state s′ under joint action a→ →

- R→ → a→ → (s,s′): the immediate joint reward after transition from state s to state s′ with joint action a→ →

Types of Multi-Agent RL

Autocurricula are a type of reinforcement learning concept that's particularly relevant in multi-agent experiments, where agents improve their performance and change their environment, affecting themselves and other agents.

In adversarial settings, autocurricula are especially apparent, such as in the Hide and Seek game, where teams of seekers and hiders adapt their strategies to counter each other.

Autocurricula can be compared to the stages of evolution on Earth, where each stage builds upon the previous one, like photosynthesizing life forms producing oxygen, which allowed for the evolution of oxygen-breathing life forms.

Cooperative multi-agent reinforcement learning (MARL) focuses on agents working together to achieve a common goal while maximizing a shared reward.

You might enjoy: Genai Agents

Examples of cooperative MARL include multi-robot systems, where each agent's actions contribute to the group's overall success.

Competitive MARL, on the other hand, involves agents trying to maximize their own rewards while minimizing their opponents' rewards, often seen in zero-sum games like chess and Go.

Mixed-interest MARL combines cooperative and competitive dynamics, where agents have partially aligned and partially conflicting goals, often seen in trading, traffic, and multi-player video games.

Centralized training with decentralized execution (CTDE) is a popular approach in MARL, where agents have access to global information during training but act based on local observations during execution.

For your interest: Generative Ai Agents

Social Dilemmas and Cooperation

Social dilemmas are a fundamental aspect of multi-agent reinforcement learning. They occur when the interests of multiple agents are misaligned, leading to conflicts and challenges in achieving cooperation.

In game theory, social dilemmas are well-studied, with classic examples like the prisoner's dilemma, chicken, and stag hunt. However, MARL research takes a different approach, focusing on how agents learn to cooperate through trial and error.

One key aspect of social dilemmas is the distinction between cooperation and competition. In pure competition settings, agents' rewards are opposite, while in pure cooperation settings, they are identical. Mixed-sum settings, on the other hand, combine elements of both.

Social dilemmas can be explored using various techniques, including modifying environment rules and adding intrinsic rewards. These methods aim to induce cooperation in agents, but the conflict between individual and group needs remains a subject of active research.

Sequential social dilemmas, introduced in 2017, model more complex scenarios where agents take multiple actions over time. This concept attempts to capture the nuances of human social dilemmas, where cooperation and defection are not always clear-cut.

To tackle large-scale cooperation, researchers are exploring the use of MARL in real-world scenarios, such as robotics and self-driving cars. These applications require agents to coordinate and cooperate to achieve common goals, highlighting the importance of social dilemma research.

Here are some examples of social dilemmas:

- Prisoner's dilemma

- Chicken

- Stag hunt

- Sequential social dilemmas

These examples illustrate the complexities of social dilemmas and the need for effective cooperation mechanisms. By understanding and addressing these challenges, researchers can develop more robust and efficient multi-agent systems.

Multi-Agent RL Settings

Multi-Agent RL Settings can be categorized into three main types: pure competition, pure cooperation, and mixed-sum settings. In pure competition settings, agents are in direct opposition to each other, with each agent's win coming at the expense of the other.

The Deep Blue and AlphaGo projects demonstrate how to optimize performance in these settings. Autocurricula can still occur, even with self-play, as multiple layers of learning may occur.

In pure cooperation settings, agents work together towards a common goal, and all agents receive identical rewards. This eliminates social dilemmas, and agents may converge to specific "conventions" when coordinating with each other.

Some examples of pure cooperation settings include recreational cooperative games like Overcooked and real-world scenarios in robotics. Agents in these settings have identical interests and can communicate effectively.

Mixed-sum settings, on the other hand, combine elements of both cooperation and competition. This can lead to communication and social dilemmas, making it a more complex and realistic scenario.

Here's a summary of the different settings:

- Pure Competition: Agents are in direct opposition to each other.

- Pure Cooperation: Agents work together towards a common goal and receive identical rewards.

- Mixed-Sum: Agents have diverging but not exclusive interests, and social dilemmas can occur.

Pure Competition Settings

In pure competition settings, two agents are locked in a zero-sum game, where one agent's win comes at the other's expense. This is the case in traditional games like chess and Go, as well as two-player variants of modern games like StarCraft.

Each agent is incentivized to win, with no prospect of communication or social dilemmas. This simplicity strips away many complexities, but not all.

Autocurricula remain a complexity in pure competition settings, as agents' policies are improved through self-play, leading to multiple layers of learning.

Mixed-Sum Settings

Mixed-Sum Settings are common in real-world scenarios involving multiple agents, where each agent has both converging and diverging interests. For example, self-driving cars planning their paths have a shared interest in avoiding traffic collisions, but each car also wants to reach its destination as quickly as possible.

In mixed-sum settings, we can explore classic matrix games like the prisoner's dilemma and more complex sequential social dilemmas. We can also use recreational games like Among Us, Diplomacy, and StarCraft II to study these scenarios.

Mixed-sum settings can give rise to communication and social dilemmas, which are crucial to understand in multi-agent environments. These dilemmas can be complex and challenging to resolve, but studying them can help us develop more effective strategies for multi-agent systems.

In the context of multi-agent environments, mixed-sum settings can be used to study the interactions between different agents and groups. For example, in the simple_tag environment, there are two teams of agents: the chasers and the evaders. The chasers are rewarded for touching evaders, while the evaders are penalized for being touched.

Here's a breakdown of the key characteristics of mixed-sum settings in multi-agent environments:

- Action space: The action space defines the possible actions that agents can take in the environment.

- Reward domain: The reward domain defines the rewards that agents can receive in the environment.

- Done domain: The done domain defines the conditions under which the environment is considered done.

- Observation domain: The observation domain defines the information that agents can observe in the environment.

In the simple_tag environment, the action space is 2D continuous forces that determine the acceleration of agents. The reward domain is based on the interactions between the chasers and the evaders, with the chasers receiving a reward for touching an evader and the evader being penalized for being touched. The done domain is based on the terminated flag, which is set when the episode reaches the maximum number of steps. The observation domain includes the position, velocity, and relative positions of agents and obstacles.

Consider reading: Knowledge Based Genai

Partial Observability

In Multi-Agent settings, agents often don't have complete access to the environment's state or the actions of other agents.

This introduces uncertainty in decision-making, making it a Partially Observable Markov Decision Process (POMDP).

Each agent may only observe part of the environment, which means they need to infer the hidden information and act based on incomplete data.

This adds complexity to policy learning since agents must learn to handle this uncertainty while predicting the behavior of others.

Algorithms and Techniques

RIAL/DIAL explores the idea of training agents to be prudent in sending messages and learning efficient communication by combining DRQN with independent Q-learning for action and communication selection.

The Action Selector Network and Communication Network are two key components of RIAL, taking the agent's observation and received messages as input and outputting the action to be taken in the environment and determining what message the agent should send to other agents based on its current observation, respectively.

DIAL introduces a differentiable communication channel between agents, allowing them to learn how to communicate effectively through backpropagation.

Networked MARL has been explored through various algorithms, including QD-Learning, which uses consensus innovations for multi-agent reinforcement learning, and Fully Decentralized Multi-Agent Reinforcement Learning with Networked Agents, which uses a decentralized approach to learn from each other.

Here are some notable algorithms and techniques in Networked MARL:

- QD-Learning: A Collaborative Distributed Strategy for Multi-Agent Reinforcement Learning Through Consensus Innovations

- Fully Decentralized Multi-Agent Reinforcement Learning with Networked Agents

- Value Propagation for Decentralized Networked Deep Multi-agent Reinforcement Learning

- Multi-agent Reinforcement Learning for Networked System Control

- F2A2: Flexible fully-decentralized approximate actor-critic for cooperative multi-agent reinforcement learning

- Scalable Reinforcement Learning of Localized Policies for Multi-Agent Networked Systems

- Finite-Sample Analysis For Decentralized Batch Multi-Agent Reinforcement Learning With Networked Agents

Solving MDPs

Solving MDPs is a crucial step in Reinforcement Learning (RL), and most approaches fall under two categories. Most real-life scenarios deal with the interaction of multiple agents in a mixed cooperative/competitive setting, which cannot be solved easily with Single-Agent RL methods.

To solve Single Agent MDPs, the aim is to maximize cumulative rewards over time. In Multi-Agent scenarios, each agent learns how to maximize its reward and also maintain its contributions to maximizing global rewards.

Most approaches to RL fall under two categories, which include solving Single Agent MDPs and Multi-Agent scenarios. In Multi-Agent scenarios, MARL algorithms involve agents making decisions and choosing actions that are more efficient and effective in various robotic applications.

The credit assignment problem in multi-agent scenarios involves determining the contribution of each agent's action to the overall team goal. This problem becomes particularly complex in cooperative settings where agents must work together to maximize a shared reward.

To address this challenge, Multi-Agent Proximal Policy Optimization (MAPPO) is an extension of the PPO algorithm for multi-agent scenarios. MAPPO employs a CTDE approach where agents share information during the learning phase, but act independently during execution.

Here are some key differences between Single-Agent and Multi-Agent RL:

The credit assignment problem is a significant challenge in Multi-Agent RL, and MAPPO addresses it by using a centralized critic that evaluates the global state and provides feedback to each agent's policy. This helps to stabilize learning and mitigate non-stationarity.

In contrast, Single-Agent RL is relatively simple and focused on maximizing cumulative rewards. However, Multi-Agent RL is highly complex due to agent interactions, non-stationarity, coordination, and communication.

Transforms

Transforms are a powerful tool in TorchRL, allowing us to append any necessary modifications to our environment.

These transforms can modify the input/output of our environment in a desired way, making it easier to work with complex scenarios. They can be especially useful in multi-agent contexts, where it's crucial to provide explicit keys for modification.

In TorchRL, we can use transforms like RewardSum to sum rewards over an episode. This is done by telling the transform where to find the reset keys for each reward key, which is typically done by setting the "_reset" tensor dictionary key.

The transformed environment will inherit the device and metadata of the wrapped environment, and transform these depending on the sequence of transforms it contains. This means that we can build a chain of transforms to achieve complex modifications.

The check_env_specs() function is a useful tool that runs a small rollout and compares its output against the environment specs. If no error is raised, we can be confident that the specs are properly defined.

Rollout

A rollout in the context of reinforcement learning is a way to visualize and understand how an environment responds to different actions. It's like taking a snapshot of the environment at different points in time.

You can call env.rollout(n_steps) to get an overview of what the environment inputs and outputs look like. This will give you a batch size of (n_rollout_steps), meaning all the tensors in it will have this leading dimension.

The output of a rollout can be divided into two main parts: the root and the next. The root is accessible by running rollout.exclude("next") and includes all the keys that are available after a reset is called at the first timestep. These keys can be accessed by indexing the n_rollout_steps dimension.

In the next part, you'll find the same structure as the root, but with some minor differences. For example, done and observations will be present in both root and next, while action will only be available in root and reward will only be available in next.

Here's a breakdown of the structure of the rollout output:

- Root: includes all the keys available after a reset, accessible by running rollout.exclude("next")

- Next: includes the same structure as the root, but with done and observations available in both

By understanding the structure of a rollout, you can better grasp how the environment responds to different actions and how your policy is performing.

Training Utilities

In the training process, we often rely on helper functions to simplify the code and make it more manageable.

These helper functions are typically simple and don't contain any complex logic, making them easy to understand and maintain.

They are designed to be used in the training loop, where they can be called upon to perform specific tasks.

The training loop is a crucial part of the training process, and these helper functions play a vital role in making it more efficient.

By breaking down the complex process into smaller, more manageable parts, these helper functions help us avoid errors and improve the overall quality of our code.

Communication and Coordination

Communication and Coordination are crucial aspects of Multi-Agent RL. In MARL, agents need to communicate effectively to achieve a common goal, but communication constraints can hinder this process.

Limited bandwidth, unreliable channels, and partial observability are just a few examples of communication constraints that can impact agent coordination.

TarMAC, a learned communication architecture, focuses on improving efficiency and communication effectiveness among agents. It employs a targeted communication strategy, allowing agents to selectively communicate with specific peers.

Autoencoder-based methods can also be used to ground multi-agent communication in the environment. This involves associating linguistic symbols with entities or concepts in the environment, enabling agents to understand and respond to each other's messages.

The system is modeled with every speaker having two modules: a speaker and a listener. The speaker module uses an image encoder to embed the pixels into a low-dimensional feature, which is then used to generate the next subsequent message.

In MARL, coordination is essential for achieving a common goal. However, as the number of agents increases, the joint action space grows exponentially, making traditional RL techniques less efficient.

Here are some key papers on coordination in MARL:

- ZSC-Eval: An Evaluation Toolkit and Benchmark for Multi-agent Zero-shot Coordination by Xihuai Wang et al.

- Collaborating with Humans without Human Data by DJ Strouse et al.

- Coordinated Multi-Agent Imitation Learning by Le H M et al.

- Reinforcement social learning of coordination in networked cooperative multiagent systems by Hao J et al.

- Coordinating multi-agent reinforcement learning with limited communication by Zhang et al.

- Coordination guided reinforcement learning by Lau Q P et al.

- Coordination in multiagent reinforcement learning: a Bayesian approach by Chalkiadakis et al.

- Coordinated reinforcement learning by Guestrin et al.

- Reinforcement learning of coordination in cooperative multi-agent systems by Kapetanakis et al.

Applications and Limitations

Multi-agent reinforcement learning has been applied to a wide range of fields, from science to industry. One notable example is the use of multi-agent reinforcement learning in broadband cellular networks, such as 5G.

Researchers have also explored its application in content caching and packet routing. For instance, a study on "MuZero with Self-competition for Rate Control in VP9 Video Compression" demonstrated its potential in video compression.

Some of the notable applications of multi-agent reinforcement learning include:

- MUZero with Self-competition for Rate Control in VP9 Video Compression by Amol Mandhane, Anton Zhernov, Maribeth Rauh, Chenjie Gu, et al. arXiv 2022.

- MAgent: A Many-Agent Reinforcement Learning Platform for Artificial Collective Intelligence by Zheng L et al. NIPS 2017 & AAAI 2018 Demo. (Github Page)

- Collaborative Deep Reinforcement Learning for Joint Object Search by Kong X, Xin B, Wang Y, et al. arXiv, 2017.

- Multi-Agent Stochastic Simulation of Occupants for Building Simulation by Chapman J, Siebers P, Darren R. Building Simulation, 2017.

- Safe, Multi-Agent, Reinforcement Learning for Autonomous Driving by Shalev-Shwartz S, Shammah S, Shashua A. arXiv, 2016.

- Applying multi-agent reinforcement learning to watershed management by Mason, Karl, et al. Proceedings of the Adaptive and Learning Agents workshop at AAMAS, 2016.

- Traffic light control by multiagent reinforcement learning systems by Bakker, Bram, et al. Interactive Collaborative Information Systems, 2010.

- Multiagent reinforcement learning for urban traffic control using coordination graphs by Kuyer, Lior, et al. oint European Conference on Machine Learning and Knowledge Discovery in Databases, 2008.

- A multi-agent Q-learning framework for optimizing stock trading systems by Lee J W, Jangmin O. DEXA, 2002.

- Multi-agent reinforcement learning for traffic light control by Wiering, Marco. ICML. 2000.

Applications

Multi-agent reinforcement learning has been applied to a variety of use cases in science and industry. It's been used to improve broadband cellular networks, such as 5G, for faster and more reliable connections.

One notable application is in content caching, which helps reduce latency and improve user experience. This is especially important for applications that require low-latency, such as online gaming.

Packet routing is another area where multi-agent reinforcement learning has shown promise. By optimizing packet routing, network administrators can reduce congestion and improve overall network efficiency.

Computer vision is also an area where multi-agent reinforcement learning has been successfully applied. Researchers have used it to improve object detection and tracking in complex environments.

Network security is a critical area where multi-agent reinforcement learning can be used to detect and respond to cyber threats. This can help prevent data breaches and protect sensitive information.

Here are some specific examples of applications of multi-agent reinforcement learning:

- MuZero with Self-competition for Rate Control in VP9 Video Compression by Amol Mandhane, Anton Zhernov, Maribeth Rauh, Chenjie Gu, et al. (arXiv 2022)

- MAgent: A Many-Agent Reinforcement Learning Platform for Artificial Collective Intelligence by Zheng L et al. (NIPS 2017 & AAAI 2018 Demo)

- Collaborative Deep Reinforcement Learning for Joint Object Search by Kong X, Xin B, Wang Y, et al. (arXiv, 2017)

- Multi-Agent Stochastic Simulation of Occupants for Building Simulation by Chapman J, Siebers P, Darren R. (Building Simulation, 2017)

These examples demonstrate the versatility and potential of multi-agent reinforcement learning in a wide range of applications.

Limitations

Multi-agent deep reinforcement learning is a complex field, and like any complex field, it has its limitations. One of the main challenges is that the environment is not stationary, which means the Markov property is violated.

Transitions and rewards no longer only depend on the current state of an agent, making it harder to predict outcomes. This is a key difference from traditional reinforcement learning, where the environment is static.

In multi-agent systems, each agent interacts with the others, creating a dynamic environment. This requires a more sophisticated approach to learning and decision-making.

The field draws on techniques from reinforcement learning, deep learning, and game theory to address these challenges. However, these inherent difficulties are a significant hurdle to overcome.

Here are some of the key limitations of multi-agent deep reinforcement learning:

- Reinforcement learning

- Multi-agent systems

- Deep learning

- Game theory

Tools and Resources

If you're interested in diving deeper into multi-agent reinforcement learning, there are several excellent resources available.

One of the most comprehensive books on the subject is "Multi-Agent Reinforcement Learning: Foundations and Modern Approaches" by Stefano V. Albrecht, Filippos Christianos, Lukas Schäfer, published in 2023.

For those looking for a more in-depth academic exploration, Yaodong Yang's 2021 PhD Thesis, "Many-agent Reinforcement Learning", is a great place to start.

Jakob N Foerster's 2018 PhD Thesis, "Deep Multi-Agent Reinforcement Learning", offers a detailed look at the application of deep learning in multi-agent systems.

If you're interested in a more general overview of multi-agent systems, Shoham Y and Leyton-Brown K's 2008 book "Multiagent systems: Algorithmic, game-theoretic, and logical foundations" is a great resource.

The following books and theses are worth checking out:

- Multi-Agent Reinforcement Learning: Foundations and Modern Approaches by Stefano V. Albrecht, Filippos Christianos, Lukas Schäfer, 2023.

- Many-agent Reinforcement Learning by Yaodong Yang, 2021. PhD Thesis.

- Deep Multi-Agent Reinforcement Learning by Jakob N Foerster, 2018. PhD Thesis.

- Multi-Agent Machine Learning: A Reinforcement Approach by H. M. Schwartz, 2014.

- Multiagent Reinforcement Learning by Daan Bloembergen, Daniel Hennes, Michael Kaisers, Peter Vrancx. ECML, 2013.

- Multiagent systems: Algorithmic, game-theoretic, and logical foundations by Shoham Y, Leyton-Brown K. Cambridge University Press, 2008.

Research and Future Work

Research in multi-agent reinforcement learning has made significant progress in recent years. Key research papers have explored various approaches, such as Deep Recurrent Q-Learning for Partially Observable MDPs and Independent Learning in the StarCraft Multi-Agent Challenge.

Some notable papers include QMIX: Monotonic Value Function Factorisation for Deep Multi-Agent Reinforcement Learning and Learning to Communicate with Deep Multi-Agent Reinforcement Learning. These papers have demonstrated the effectiveness of different techniques in multi-agent environments.

Researchers have also explored the use of model-based approaches, such as Model-based Multi-agent Reinforcement Learning: Recent Progress and Prospects by Xihuai Wang, Zhicheng Zhang, and Weinan Zhang. Additionally, reviews of the field have been published, such as A Survey and Critique of Multiagent Deep Reinforcement Learning by Pablo Hernandez-Leal, Bilal Kartal, and Matthew E. Taylor.

AI Alignment

AI alignment is a pressing concern in the field of artificial intelligence, where researchers are exploring ways to prevent conflicts between human intentions and AI agent actions.

Multi-agent reinforcement learning has been used to study AI alignment, simulating conflicts between humans and AI agents to identify variables that could prevent these conflicts.

In a MARL setting, the relationship between agents can be compared to the relationship between a human and an AI agent, highlighting the need for careful consideration of AI alignment in AI development.

Research in this area aims to prevent conflicts by changing variables in the system, demonstrating the importance of ongoing research in AI alignment.

Key Research Papers

Research in multi-agent reinforcement learning has led to the development of various key papers that have shaped the field. One such paper is "Deep Recurrent Q-Learning for Partially Observable MDPs" which explores the use of deep recurrent Q-learning for partially observable Markov decision processes.

For more insights, see: Multi-task Learning

The paper "Is Independent Learning All You Need in the StarCraft Multi-Agent Challenge?" suggests that independent learning can be a viable approach in multi-agent challenges like the StarCraft challenge. This is a promising finding, as it could simplify the development of multi-agent systems.

Researchers have also found that Proximal Policy Optimization (PPO) can be surprisingly effective in cooperative, multi-agent games, as demonstrated in "The Surprising Effectiveness of PPO in Cooperative, Multi-Agent Games". This is a notable finding, as PPO is a widely used algorithm in single-agent reinforcement learning.

Other key papers in the field include "Multi-Agent Actor-Critic for Mixed Cooperative-Competitive Environments" and "Value-Decomposition Networks For Cooperative Multi-Agent Learning". These papers have contributed to our understanding of multi-agent reinforcement learning and its applications.

Here are some of the key research papers in the field of multi-agent reinforcement learning:

- "Deep Recurrent Q-Learning for Partially Observable MDPs"

- "Is Independent Learning All You Need in the StarCraft Multi-Agent Challenge?"

- "The Surprising Effectiveness of PPO in Cooperative, Multi-Agent Games"

- "Multi-Agent Actor-Critic for Mixed Cooperative-Competitive Environments"

- "Value-Decomposition Networks For Cooperative Multi-Agent Learning"

- "QMIX: Monotonic Value Function Factorisation for Deep Multi-Agent Reinforcement Learning"

- "Learning to Communicate with Deep Multi-Agent Reinforcement Learning"

- "Learning to Schedule Communication in Multi-agent Reinforcement Learning"

- "TarMAC: Targeted Multi-Agent Communication"

- "Learning to Ground Multi-Agent Communication with Autoencoders"

Frequently Asked Questions

How does multi-agent work?

Multi-agent systems work by combining multiple AI agents that collaborate to achieve a common goal, each contributing their unique properties to create a cohesive outcome

What is an example of a multi-agent system?

A multi-agent system is a complex system that coordinates multiple entities, such as generators, storage, and consumers, to achieve a common goal. For example, a Smart Power Grid integrates renewable sources and manages electricity distribution across the grid.

Sources

- https://en.wikipedia.org/wiki/Multi-agent_reinforcement_learning

- https://vinaylanka.medium.com/multi-agent-reinforcement-learning-marl-1d55dfff6439

- https://pytorch.org/rl/stable/tutorials/multiagent_competitive_ddpg.html

- https://github.com/LantaoYu/MARL-Papers

- https://towardsdatascience.com/ive-been-thinking-about-multi-agent-reinforcement-learning-marl-and-you-probably-should-be-too-8f1e241606ac

Featured Images: pexels.com