An RL agent is a type of artificial intelligence that can learn and make decisions on its own. It's a crucial component in many applications, including robotics, finance, and healthcare.

RL agents are trained using reinforcement learning, a type of machine learning where an agent learns by interacting with an environment and receiving feedback in the form of rewards or penalties. The goal is to maximize the cumulative reward over time.

RL agents can be categorized into two main types: on-policy and off-policy. On-policy agents learn from their experiences, while off-policy agents can learn from past experiences, even if they didn't lead to a reward. This distinction is important because it affects how the agent interacts with the environment.

You might enjoy: Social Media Agent as in Artificial Intelligence

What Is RL Agent

An RL agent is a program that makes decisions about actions to take and receives feedback in the form of rewards or penalties. It's a key component of the reinforcement learning framework.

In simple terms, an RL agent is like a player in a game like Pong, where it learns to make decisions by interacting with the environment and receiving rewards or penalties. The goal of the agent is to maximize the expected amount of reward.

The RL agent is also known as the "agent" in RL 101, and its main task is to make "intelligent" decisions that help it achieve its goal. This goal is to maximize the expected amount of reward.

An RL agent can be thought of as having several key components, including the state, actions, rewards, and goal. The state could be a couple of last frames (images of the screen), the actions could be "move the pad up", "move the pad down", and "don't move at all", the rewards could be +1 if you win the game else -1, and the goal is to become a great player at the game of Pong.

Here are some common problems that RL agents face:

- The sparse rewards problem (+-1 only at the end of the episode)

- The credit assignment problem (which actions led to win/loss?)

- The problem of effectively communicating our goals to the agent

- The problem of exploration vs exploitation

How RL Agent Works

An RL agent works by taking steps to navigate its environment, such as selecting a tab to navigate to a webpage. This is done through a reinforcement learning algorithm that assigns positive values to desired actions and negative values to undesired behaviors.

The agent's goal is to seek long-term and maximum overall rewards to achieve an optimal solution. This process involves devising a method of rewarding desired actions and punishing negative behaviors.

To prepare for the RL process, you need to define the problem and set clear goals and objectives, including the reward structure. This will help you decide what data you need and what algorithm to select.

Data collection and initialization are crucial steps in the RL process, where you gather initial data, define the environment, and set up necessary parameters for the experiment. You'll also need to preprocess and engineer features from the data.

The RL algorithm selection process involves choosing the appropriate algorithm based on the problem and environment, and configuring core settings, such as the balance of exploration and exploitation. This balance is essential for the agent to learn effectively.

During training, the agent interacts with the environment, takes actions, receives rewards, and updates its policy. You'll need to adjust hyperparameters and repeat the process to ensure the agent learns effectively.

Evaluation is a critical step in the RL process, where you assess the agent's performance using metrics and observe its performance in applicable scenarios. This ensures the agent meets the defined goals and objectives.

Model tuning and optimization involve adjusting hyperparameters, refining the algorithm, and retraining the agent to improve performance further. This process is essential for achieving optimal results.

Types of RL Agent

Reinforcement learning can be broadly categorized into three types: model-free, model-based, and hybrid. Each type has its specific use cases and methods.

In model-free reinforcement learning, the agent learns to make decisions solely based on trial and error, without any prior knowledge of the environment. This approach is often used in situations where the environment is complex and difficult to model.

If this caught your attention, see: Reinforcement Learning

Model-based reinforcement learning, on the other hand, involves the agent learning a model of the environment and using it to make decisions. This approach is often used in situations where the environment is relatively simple and can be accurately modeled.

Hybrid reinforcement learning combines elements of both model-free and model-based approaches, offering a flexible and adaptable way of learning. This approach is often used in situations where the environment is complex and dynamic.

Additional reading: Grokking Deep Reinforcement Learning

RL Agent Applications

RL agents are being used in various domains, including gaming, robotics, and finance.

RL has achieved superhuman performance in cases like chess and video games. A notable example is AlphaGo, which plays the board game Go by using a hybrid of deep neural networks and Monte Carlo Tree Search.

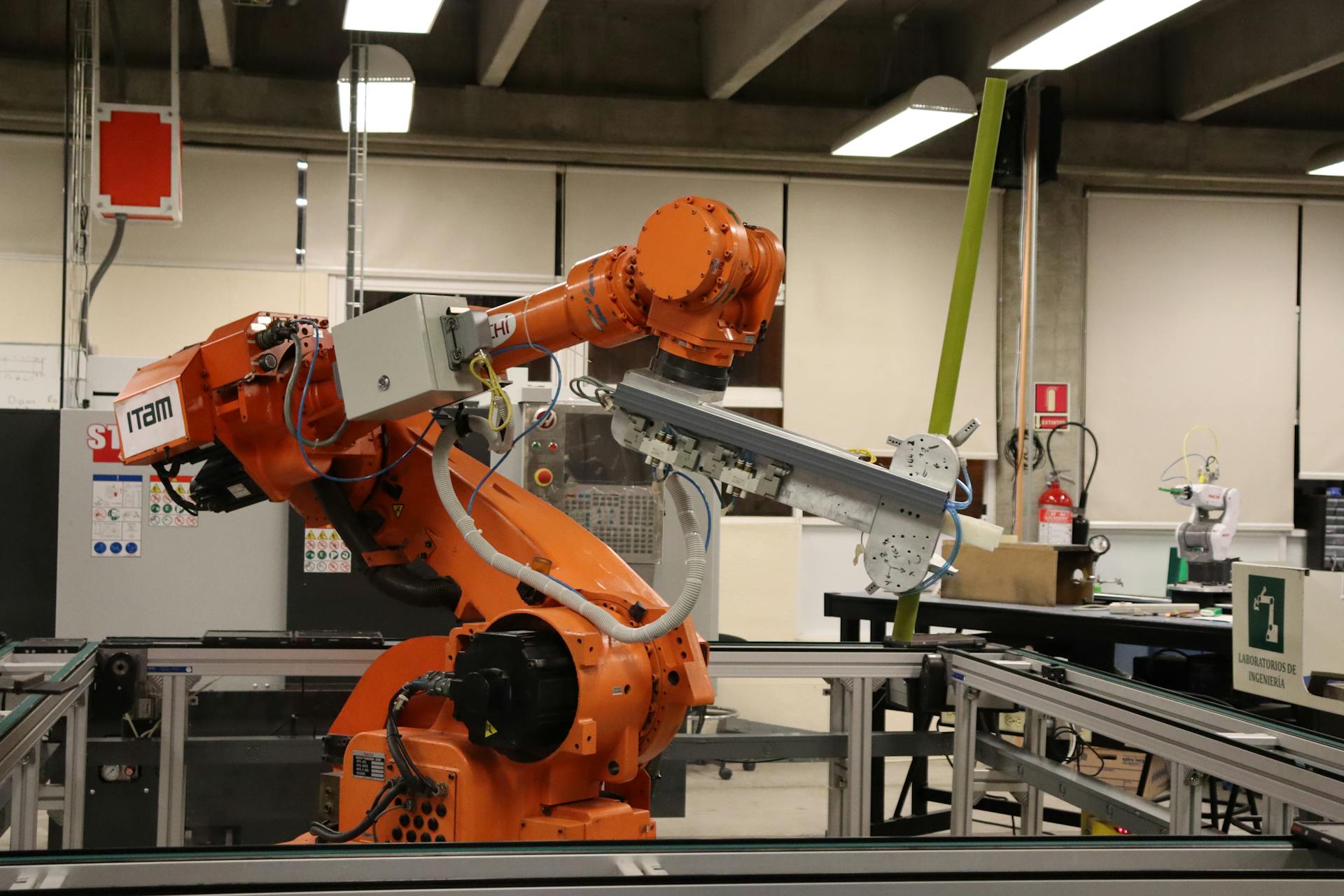

In robotics, RL helps in training robots to perform tasks like grasping objects and navigating obstacles. The trial-and-error learning process allows robots to adapt to real-world uncertainties and improve their performance over time.

Explore further: Multi Agent Rl

RL can optimize treatment plans, manage clinical trials, and personalize medicine in healthcare. It can also suggest interventions that maximize patient outcomes by continuously learning from patient data.

Autonomous vehicles use RL-trained models to respond to obstacles, road conditions, and dynamic traffic patterns. They immediately apply these models to adapt to current driving conditions while also feeding data back into a centralized continual training process.

Here are some examples of RL success stories:

- Robotics: RL provides an efficient way to build general-purpose robots, which can learn tasks a human teacher can't demonstrate.

- AlphaGo: An RL-based Go agent defeated the greatest human Go player by learning from experience, playing thousands of games with professional players.

- Autonomous Driving: RL finds application in vehicle path planning and motion prediction, which are critical tasks for autonomous driving systems.

RL is also being used in other areas, such as:

- Abstractive text summarization engines

- Dialog agents (text, speech) that can learn from user interactions and improve with time

- Learning optimal treatment policies in healthcare

- RL-based agents for online stock trading

RL Agent Benefits and Challenges

RL agents have several benefits, including their ability to operate in complex environments and optimize for long-term goals. They can learn without human supervision and are adaptable to dynamic environments.

RL agents can trade off short-term rewards for long-term benefits, making them useful for problems where the outcome is unknown until a large number of sequential actions are taken. This is especially true in environments with high latency, such as complex physical environments.

Intriguing read: Generative Ai Agents

Here are some key benefits and challenges of RL agents:

- Focuses on the problem as a whole

- Does not need a separate data collection step

- Works in dynamic, uncertain environments

- Operates in complex environments

- Is optimized for long-term goals

- Doesn't require much attention

However, RL agents also have several challenges, including their need for extensive experience and the delayed rewards they receive. This can make it difficult for the agent to discover the optimal policy, especially in environments where the outcome is unknown until a large number of sequential actions are taken.

Benefits of

Reinforcement learning offers numerous benefits that make it an attractive approach for complex problems. RL is closer to artificial general intelligence (AGI), as it possesses the ability to seek a long-term goal while exploring various possibilities autonomously.

One of the key benefits of RL is that it focuses on the problem as a whole, rather than breaking it down into subtasks. This allows RL to maximize the long-term reward, rather than just excelling at specific subtasks.

RL also doesn't need a separate data collection step, which significantly reduces the burden on the supervisor in charge of the training process. This is because training data is obtained via the direct interaction of the agent with the environment.

In RL, time matters, and the experience that the agent collects is not independently and identically distributed (i.i.d.). This means that RL algorithms are inherently adaptive and built to respond to changes in the environment.

Here are some key benefits of reinforcement learning at a glance:

- Operates in complex environments, both static and dynamic.

- Can learn without human supervision.

- Is optimized for long-term goals, focused on maximizing cumulative rewards.

Overall, RL's ability to adapt to changing environments and optimize for long-term goals makes it a powerful tool for tackling complex problems.

Challenges

RL agents need extensive experience to learn, which can be a problem in environments with high latency or complex state spaces.

The rate of data collection is limited by the environment's dynamics, making it difficult to train RL agents quickly.

Delayed rewards can make it hard for agents to discover the optimal policy, especially in environments where the outcome is unknown until a large number of actions are taken.

RL agents can trade off short-term rewards for long-term gains, but this makes it challenging to assign credit to previous actions for the final outcome.

Curious to learn more? Check out: What Are Genai Use Cases Agents Chatbots

Lack of interpretability is a significant issue with RL agents, making it difficult for observers to understand the reasons behind their actions.

Here are some of the challenges that make RL adoption difficult:

- RL agent needs extensive experience.

- Delayed rewards.

- Lack of interpretability.

These challenges can make RL agents less reliable and harder to trust, especially in high-risk environments.

RL Agent Techniques

RL Agent Techniques are crucial for an agent to learn and make decisions efficiently. Model-based reinforcement learning is one such technique that involves creating a model of the environment to plan actions and predict future states. This approach can be more efficient than model-free methods, as it allows the agent to simulate different strategies internally before acting.

Some popular model-based methods include Dyna-Q, which combines Q-learning with planning, and Monte Carlo Tree Search (MCTS), which simulates many possible future actions and states to build a search tree. Dyna-Q is particularly useful when real-world interactions are expensive or time-consuming, while MCTS excels in decision-making scenarios with a clear structure, such as board games like chess.

Hybrid reinforcement learning combines approaches to leverage their respective strengths, helping balance the trade-offs between sample efficiency and computational complexity. Techniques like Guided Policy Search (GPS) and Integrated Architectures are examples of hybrid approaches that can be used in RL agents.

For your interest: Deep Reinforced Learning

Model-Free

Model-Free RL is a type of reinforcement learning where the agent learns directly from interactions with the environment, without trying to understand or predict the environment.

The agent doesn't need to have a model of the world to navigate it, which makes it a powerful technique for complex environments. For example, a Roomba robotic vacuum uses model-free RL to learn where obstacles are and clean more effectively.

Value-based methods are a common approach to model-free RL, where a Q-value represents the expected future rewards for taking a given action in a given state. Q-learning is a popular method that updates Q-values with each iteration to reflect better strategies.

Policy gradient methods focus on improving the strategy (or policy) the agent uses to choose actions, rather than estimating the value of actions in each state. These methods are useful in situations where actions can be any value, such as walking in any direction across a field.

Expand your knowledge: Policy Gradients

Here's a comparison of value-based and policy gradient methods:

Policy gradient methods can handle more complex decision-making and a continuum of choices, but usually need more computing power to work effectively.

Hybrid

Hybrid reinforcement learning combines approaches to leverage their respective strengths, helping to balance the trade-offs between sample efficiency and computational complexity.

Hybrid techniques can be particularly useful in complex environments where one approach alone may not be sufficient. For instance, Guided Policy Search (GPS) alternates between supervised learning and reinforcement learning, using supervised learning to train a policy based on data generated from a model-based controller.

GPS helps in transferring knowledge from model-based planning to direct policy learning, which can be beneficial in scenarios where the model-based approach is less accurate. Integrated architectures also integrate various model-based and model-free components in a single framework, adapting to different aspects of a complex environment.

World models, on the other hand, involve building a compact and abstract representation of the environment, which the agent uses to simulate future states. This technique reduces the need for real-world interactions, making it a useful approach in scenarios where interactions are expensive or time-consuming.

Here are some examples of hybrid reinforcement learning techniques:

- Guided Policy Search (GPS)

- Integrated architectures

- World models

Unsupervised

Unsupervised learning is a powerful technique that helps agents make sense of complex data without any guidance. It's particularly useful for tasks like customer segmentation, where similar data points are clustered together to identify patterns.

Unlabeled data is the key to unsupervised learning, and it allows agents to find associations between items and reduce data complexity for easier processing.

Customer segmentation is a great example of unsupervised learning in action, as it helps businesses understand their customers and tailor their marketing efforts accordingly.

Unsupervised learning can also be used to build recommendation systems, which suggest products or services to users based on their past behavior and preferences.

Discover more: Genai Agents

RL Agent Techniques

RL Agent Techniques are notoriously hard to get right, and it's not uncommon for implementations to be plagued by issues like the "But it works on my machine" problem. This is especially true for Deep Q-Networks, which require a massive amount of data and compute to work.

Debugging RL projects can be a nightmare, and it's not just because of the complexity of the algorithms. In fact, I've personally struggled with implementing a DQN agent, and I'm still debugging it.

One of the main challenges with RL is that it's sample inefficient, meaning it requires a huge amount of data and compute to work. This is evident in the original DQN agent, which required around 200 million frames to train on a single Atari game.

To give you a better idea of the compute needed for RL agents, check out OpenAI's blog on the topic. It's a fascinating read that will give you a sense of just how much power is required to train these agents.

Here are some popular RL agent techniques that you should know about:

- Deep Q-Networks (DQN): a type of RL agent that uses a neural network to approximate the action-value function.

- Policy Gradient Methods: a class of RL algorithms that learn a policy directly from experience.

- Actor-Critic Methods: a combination of policy gradient and value function methods that learn both the policy and the value function.

Keep in mind that these techniques are not mutually exclusive, and many RL agents use a combination of these methods to achieve good results.

Examples of

Reinforcement learning is a powerful tool that has been successfully applied in various fields. Robotics is one area where RL has made a significant impact, particularly in robotic path planning.

A robot's ability to find a short, smooth, and navigable path between two locations, void of collisions and compatible with the dynamics of the robot, is a complex task that RL can efficiently solve. RL provides an efficient way to build general-purpose robots that can adapt to uncertain environments.

RL has also been used in the game of Go, where a computer program called AlphaGo defeated the greatest human Go player in 2016. AlphaGo learned by experience, playing thousands of games with professional players. The latest RL-based Go agent can even learn by playing against itself, an advantage that human players don't have.

Autonomous driving is another area where RL finds application, particularly in vehicle path planning and motion prediction. Vehicle path planning requires several low and high-level policies to make decisions over varying temporal and spatial scales.

Here are some examples of RL applications:

- Robotics: robotic path planning

- Go: AlphaGo

- Autonomous driving: vehicle path planning and motion prediction

RL can operate in a situation if a clear reward can be applied, as seen in gaming and resource management. In gaming, RL can achieve superhuman performance in numerous games, such as Pac-Man. A learning algorithm playing Pac-Man might be able to move in one of four possible directions, and receive rewards for collecting pellets, fruit, and power pellets.

RL is also used in robotics, providing robots with the ability to learn tasks a human teacher can't demonstrate, adapt a learned skill to a new task, and achieve optimization even when analytic formulation isn't available.

RL Agent Learning Methods

Reinforcement learning is its own branch of machine learning, but it has some similarities to other types of machine learning. One of the main similarities is with supervised learning, where developers give algorithms specified goals and define reward functions and punishment functions.

In reinforcement learning, developers must explicitly program the algorithm with a clear understanding of what is beneficial and what is not. This level of explicit programming is higher than in unsupervised learning, where the algorithm learns by cataloging its own observations.

Reinforcement learning has a predetermined end goal, such as optimizing a system or completing a video game. This is in contrast to unsupervised learning, which doesn't have a specified output.

The four main types of machine learning are supervised, unsupervised, semi-supervised, and reinforcement learning. Here's a brief overview of each:

- Supervised learning: Algorithms train on a body of labeled data.

- Unsupervised learning: Developers turn algorithms loose on fully unlabeled data.

- Semi-supervised learning: Developers enter a relatively small set of labeled training data as well as a larger corpus of unlabeled data.

- Reinforcement learning: Situates an agent in an environment with clear parameters defining beneficial activity and nonbeneficial activity.

Reinforcement learning is more self-directed than supervised learning algorithms, once the parameters are set. This makes it a powerful tool for optimizing complex systems.

RL Agent Future and Trends

Reinforcement Learning (RL) agents are making significant strides in recent years, and their future looks bright. Deep reinforcement learning uses deep neural networks to model the value function or the agent's policy, freeing users from tedious feature engineering.

RL agents are no longer limited to simple environments, thanks to the power of deep learning. With millions of trainable weights, models can learn optimal policies in complex environments.

Traditionally, RL is applied to one task at a time, making learning complex behaviors inefficient and slow. This is changing with the development of multi-task learning scenarios, where multiple agents can share the same representation of the system and improve each other's performance.

A3C (Asynchronous Advantage Actor-Critic) is an exciting development in this area, where related tasks are learned concurrently by multiple agents. This is driving RL closer to Artificial General Intelligence (AGI), where a meta-agent learns how to learn.

Reinforcement learning is projected to play a bigger role in the future of AI, with various industries exploring its potential. Marketing and advertising firms are already using RL algorithms for recommendation engines, and manufacturers are using it to train next-generation robotic systems.

Transfer learning techniques are improving the efficiency of RL agents, enabling them to use past-learned skills on different problems. This decreases the time it takes to train a system, making RL more practical for real-world applications.

Deep learning reinforcement learning continues to improve, with these systems becoming more independent and flexible. This is paving the way for RL to enhance AI-based digital customer experiences, alongside other technologies like AI simulation, generative AI, and federated machine learning.

Additional reading: Andrew Ng Agentic Workflows

RL Agent Getting Started

Getting started with building and testing RL agents can be a bit overwhelming, but don't worry, I've got you covered.

To start with the basics, you can refer to "Reinforcement Learning-An Introduction" by Richard Sutton and Andrew Barto. This book is a great resource for understanding the fundamental concepts of RL.

For a more visual learning experience, David Silver's video lectures on RL are an excellent choice. They're engaging, informative, and perfect for beginners.

If you're looking for a hands-on approach, you can try building a simple RL agent using Policy Gradients from raw pixels by following Andrej Karpathy's blog. This will get you started with Deep Reinforcement Learning in just 130 lines of Python code.

For more complex projects, you can leverage platforms like DeepMind Lab, Project Malmo, or OpenAI gym. These tools offer rich simulated environments and a wide range of features to help you build and compare reinforcement learning algorithms.

Here are some key resources to get you started:

- Richard Sutton and Andrew Barto's book: "Reinforcement Learning-An Introduction"

- David Silver's video lectures on RL

- Andrej Karpathy's blog on Policy Gradients from raw pixels

- DeepMind Lab

- Project Malmo

- OpenAI gym

Sources

- https://www.synopsys.com/glossary/what-is-reinforcement-learning.html

- https://gordicaleksa.medium.com/how-to-get-started-with-reinforcement-learning-rl-4922fafeaf8c

- https://www.techtarget.com/searchenterpriseai/definition/reinforcement-learning

- https://towardsdatascience.com/reinforcement-learning-101-e24b50e1d292

- https://www.grammarly.com/blog/ai/what-is-reinforcement-learning/

Featured Images: pexels.com