Unet Conditional Normalizing Flows for Realistic Image Generation is a powerful technique for generating high-quality images. This approach combines the strengths of U-Net architectures with the flexibility of conditional normalizing flows.

By leveraging the U-Net's ability to capture spatial hierarchies and the normalizing flows' capacity to model complex distributions, Unet Conditional Normalizing Flows can produce highly realistic images.

On a similar theme: Conditional Random Fields

Generative Model

Generative models are a crucial part of image modeling, and one of the key techniques used in this field is normalizing flows. Normalizing flows allow us to model complex distributions by transforming a simple distribution into a more complex one through a series of invertible transformations.

The performance of generative models can be measured by their bits per dimension (BPD) score, which indicates how efficiently a model can compress an image. According to the article, using variational dequantization improves upon standard dequantization in terms of BPD, with a difference of 0.04 BPD.

The inference time and sampling time of a model can also impact its performance. For example, the simple model has an inference time of 20 ms, while the multiscale model has an inference time of 23 ms. However, the multiscale model has a notably faster sampling time of 15 ms.

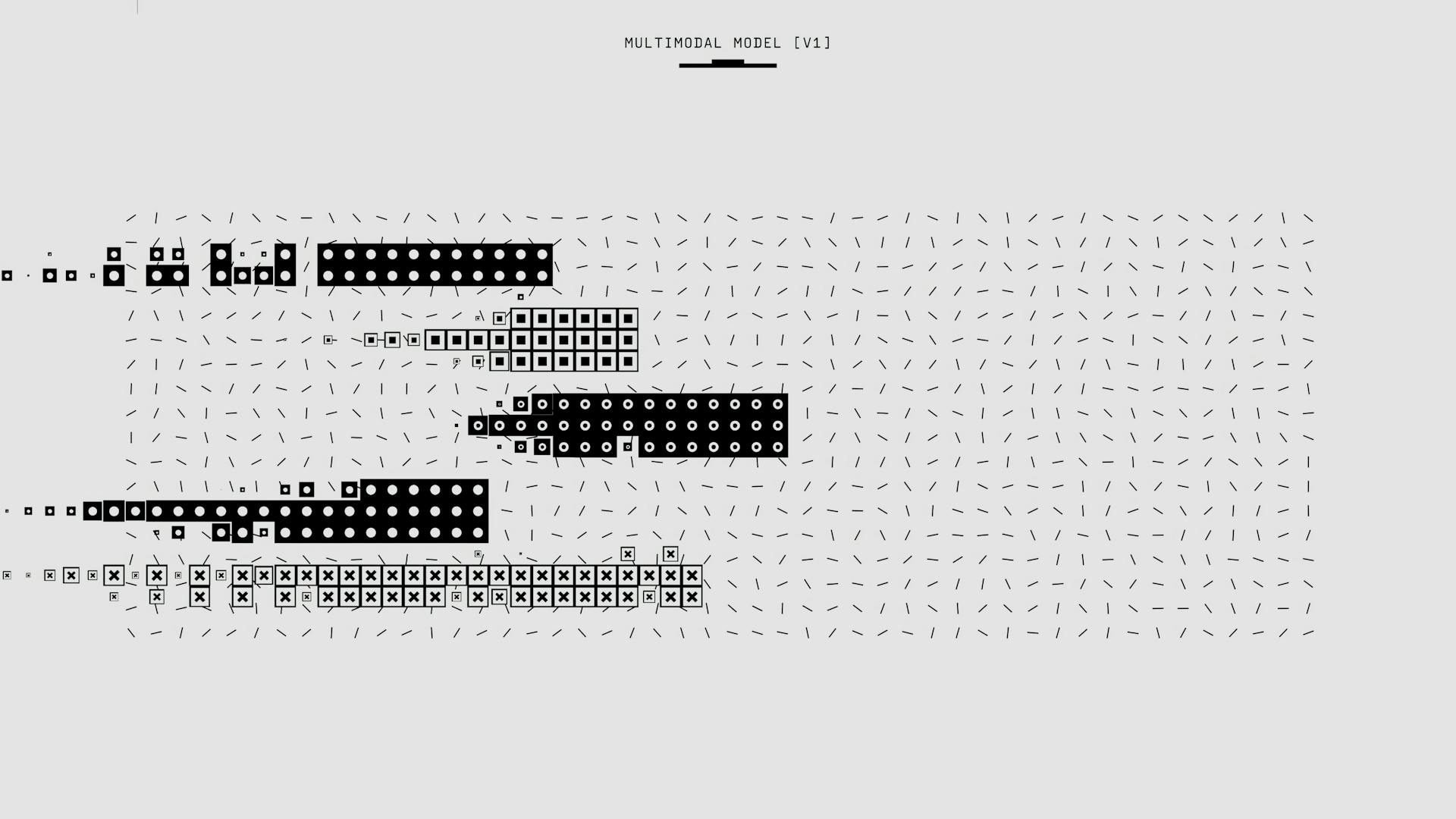

Here's a comparison of the three models' quantitative results:

The samples from the multiscale model show a clear difference from the simple model, with the multiscale model able to learn full, global relations that form digits.

Expand your knowledge: Flow-based Generative Model

Generative Model

Using variational dequantization can improve upon standard dequantization in terms of bits per dimension, with a difference of 0.04bpd.

The multi-scale architecture is not only stronger for density modeling but also more efficient, with a notable improvement in inference time and sampling time despite having more parameters.

The simple model has only learned local, small correlations, while the multi-scale model was able to learn full, global relations that form digits.

The outputs of normalizing flows are sharp, naturally modeling complex, multi-modal distributions, unlike VAEs which have independent decoder output noise.

Here's a comparison of the models on their quantitative results:

Coupling Layers

Coupling Layers are a crucial component of Generative Models, allowing them to learn complex patterns in data.

In a Generative Model, Coupling Layers are used to transform the input data into a more manageable form, making it easier for the model to learn and generate new data.

By splitting the input data into smaller chunks and processing each chunk independently, Coupling Layers can effectively capture the dependencies between different features in the data.

Expand your knowledge: Normalization Data Preprocessing

Architecture

Deep normalizing flows operate on the same dimensions as the input, which can be computationally costly, especially for high-dimensional inputs.

One way to address this issue is by applying a multi-scale architecture, which is commonly used in image domain normalizing flows.

This architecture splits off half of the latent dimensions after the first N flow transformations and directly evaluates them on the prior.

The remaining half is run through N more flow transformations, and depending on the input size, it's split again or stopped altogether.

The two key operations involved in this setup are Squeeze and Split, which are explained in more detail below.

To build a multi-scale flow, we define the squeeze and split operation and then construct our own multi-scale flow architecture.

Deep normalizing flows like Glow and Flow++ often apply a split operation directly after squeezing, but shallow flows require more careful placement of the split operation.

Our setup is inspired by the original RealNVP architecture, which is shallower than more recent state-of-the-art architectures.

For the MNIST dataset, we apply the first squeeze operation after two coupling layers but don't apply a split operation yet.

We apply two more coupling layers before finally applying a split flow and squeeze again, with the last four coupling layers operating on a scale of 7x7x8.

The dimensions of the coupling layers on the squeezed input are often scaled by 2 to keep the number of parameters reasonable.

Explore further: What Is Multi Head Attention

Training and Analysis

We'll start by training the flow models. We provide pre-trained models that contain the validation and test performance, and run-time information. These pre-trained models can be a huge time-saver, especially since flow models are computationally expensive.

To get the most out of these pre-trained models, it's best to rely on them for a first run through the notebook. This will give you a chance to see how the models perform without having to spend hours training them from scratch.

In the last part of the notebook, we'll train all the models we have implemented above, and try to analyze the effect of the multi-scale architecture and variational dequantization.

Analysing the

Analyzing the flows is a crucial step in understanding the performance of the flow models. We provide pre-trained models that contain the validation and test performance, and run-time information.

These pre-trained models can be a great starting point, as flow models are computationally expensive and can be a challenge to train from scratch. Relying on them for a first run through the notebook is a good advice.

In the last part of the notebook, we will train all the models we have implemented above, and try to analyze the effect of the multi-scale architecture and variational dequantization.

Discussion and Conclusions

In limited-view photoacoustic imaging, the resolution of deep near-vertical structures in a blood vessel system is a fundamental challenge.

Conventional regularization methods based on sparsity in some transformed domain are typically too generic and practically unable to fill the sensitivity gap.

A straightforward alternative is to use problem-specific information, which is where learned prior regularization comes in. This approach can be beneficial for highly ill-posed problems such as limited-view photoacoustic imaging.

The MAP estimate based on conditional deep priors has shown potential in recovering the vertical features of a blood vessel image, outperforming marginal deep priors in both in- and out-of-distribution data.

In order to avoid misleading reconstruction affected by "hallucinations", it's essential to further assess the strong bias imposed by this type of learned priors.

Visualization

Visualization is a powerful tool in understanding how UNet conditional normalizing flows work. By visualizing the latents in different levels of the multi-scale flow, we can see that early split variables have a smaller effect on the image, but still contain local noise patterns.

The flow learns to separate higher-level information in the final variables, while the early split ones contain local noise patterns. This is evident in the borders of the digits, where small differences can be spotted.

Visualizing dequantization helps us understand how the flow handles sharp edges and borders. The dequantization distribution in MNIST images shows a strong bias towards 0 (black), with a sharp border.

Density Modeling and Sampling

Density modeling and sampling are crucial aspects of image modeling. The goal is to accurately represent and generate images.

Using variational dequantization improves upon standard dequantization in terms of bits per dimension. The difference may seem small at 0.04bpd, but it's a significant step for generative models.

The inference time for variational dequantization is longer due to the additional complexity, but this doesn't affect the sampling time. In fact, inverting variational dequantization is the same as dequantization, which simply involves finding the next lower integer.

Broaden your view: Variational Inference with Normalizing Flows

Here's a comparison of the models' quantitative results:

The multi-scale architecture shows notable improvements in both inference time and sampling time, despite having more parameters. This makes it a more efficient option.

Visualization of Latents

Visualization of latents can reveal how generative models store information in different layers.

By analyzing the variables split at early layers, we can see that they indeed have a smaller effect on the image. Still, small differences can be spotted when looking carefully at the borders of the digits.

For instance, in the middle, the top part of the 3 has different thicknesses for different samples, although all of them represent the same coarse structure. This shows that the flow learns to separate higher-level information in the final variables.

The early split variables contain local noise patterns, which can be seen in the variations of the hole at the top of the 8 for different samples.

Dequantization

Dequantization is a technique used in visualization to model the noise in images. It uses a uniform distribution for the noise, which effectively leads to images being represented as hypercubes with sharp borders.

This approach can be difficult to model with smooth transformations, especially when dealing with real-world images that have independent Gaussian noise on pixels. Sharp borders are hard to model, and a flow would rather prefer smooth, prior-like distributions.

The original dequantization class can be inherited to implement variational dequantization, a more sophisticated approach that learns a flexible distribution over the noise. This approach, proposed by Ho et al., uses a second normalizing flow that takes the image as external input and learns a flexible distribution over the noise.

Variational dequantization can be used as a substitute for dequantization, and it has been shown to learn a much smoother distribution with a Gaussian-like curve. This is in contrast to the dequantization distribution, which shows a strong bias towards 0 (black) and a sharp border.

Dequantization

Dequantization is a crucial component of conditional normalizing flows, and it's essential to understand how it works. Dequantization uses a uniform distribution for the noise u, effectively representing images as hypercubes with sharp borders.

This can be problematic, as modeling such sharp borders is not easy for flows, which use smooth transformations to convert them into a Gaussian distribution. The issue is particularly challenging when dealing with real-world images, which often exhibit independent Gaussian noise on pixels.

To address this issue, dequantization has been extended to more sophisticated, learnable distributions beyond uniform in a variational framework. This approach is called Variational Dequantization and has been proposed by Ho et al. The learning objective for Variational Dequantization is to minimize the difference between the log probability of the input x and the expected log probability of the noise u.

Variational Dequantization can be used as a substitute for dequantization, and it has been shown to perform well in various experiments. Inheriting the original dequantization class, Variational Dequantization can be implemented using a second normalizing flow that takes x as external input and learns a flexible distribution over u.

Images

Images are a crucial aspect of normalizing flows, especially when it comes to modeling complex data distributions. A normalizing flow maps an input image to an equally sized latent space, allowing for density estimation and sampling new points.

The standard metric used in generative models, including normalizing flows, is bits per dimensions (bpd). Bpd describes how many bits we would need to encode a particular example in our modeled distribution, with lower values indicating a more likely example.

To calculate the bits per dimension score, we rely on the negative log-likelihood and change the log base to account for binary vs exponential values. For images, this involves dividing the log likelihood by the height, width, and channel number.

The test_step function in normalizing flows makes use of importance sampling, which differs from the training and validation step. This is particularly important when modeling discrete images in continuous space.

Here's a comparison of different models on their quantitative results:

Variational dequantization improves upon standard dequantization in terms of bits per dimension, with a difference of 0.04bpd. However, it takes longer to evaluate the probability of an image due to variational dequantization, which also leads to a longer training time.

The multi-scale architecture shows a notable improvement in bits per dimension score, with a drop of 0.04bpd compared to the simple and vardeq models. Additionally, the inference time and sampling time improved despite having more parameters.

Frequently Asked Questions

What is the equation for normalizing flow?

A normalizing flow model uses the equation X=fθ(Z) to map input Z to output X, where fθ is a deterministic and invertible function. This invertible mapping allows for easy computation of the inverse function Z=f−1θ(X).

Sources

- https://uvadlc-notebooks.readthedocs.io/en/latest/tutorial_notebooks/tutorial11/NF_image_modeling.html

- https://github.com/janosh/awesome-normalizing-flows

- https://lightning.ai/docs/pytorch/stable//notebooks/course_UvA-DL/09-normalizing-flows.html

- https://www.aimsciences.org/article/doi/10.3934/fods.2024028

- https://slim.gatech.edu/Publications/Public/Conferences/NIPS/2021/orozco2021NIPSpicp/deep_inverse_2021.html

Featured Images: pexels.com