Incorporating human oversight into AI workflows can significantly improve their accuracy and reliability. A study found that AI models with human feedback can achieve up to 95% accuracy in certain tasks.

This is because humans can provide a crucial check on AI's decisions, helping to prevent errors and biases. For instance, in the case of medical diagnosis, human oversight can help prevent AI from misdiagnosing conditions due to limited training data.

Human-in-the-loop workflows can also help to increase the efficiency of AI systems. By automating routine tasks and allowing humans to focus on high-level decision-making, organizations can reduce processing times and improve overall productivity.

Curious to learn more? Check out: Can Generative Ai Replace Humans

Benefits of Human Involvement

Humans play a crucial role in the LLM human in the loop approach, especially when it comes to achieving high accuracy in complex tasks.

Analytical thinking is a key human capability that enables humans to analyze data, detect discrepancies, and apply contextual knowledge to evaluate the LLM's output critically.

Human experts can provide invaluable insights in niche domains, rectifying errors that may elude the LLM due to their nuanced understanding of the subject matter.

The "human in the loop" approach has been successfully employed in various real-world scenarios, including an insurance firm that achieved a high 90% accuracy rate after months of human training and involvement.

Human reviewers can handle intricate cases, validate claim details, and start the claims procedure, showcasing the efficacy of the human in the loop approach.

In fact, human intervention is often necessary to achieve the last 20% of accuracy, which is critical in tasks that require precision, such as processing client claims.

Here are some key benefits of human involvement in the LLM human in the loop approach:

- Improved accuracy: Human experts can provide critical insights and correct errors that may elude the LLM.

- Enhanced contextual understanding: Humans can apply contextual knowledge to evaluate the LLM's output critically.

- Increased precision: Human intervention is often necessary to achieve high accuracy in complex tasks.

By combining human capabilities with machine learning algorithms, we can achieve what neither a human being nor a machine can achieve on their own.

Here's an interesting read: Human in the Loop Approach

Improving Safety and Precision

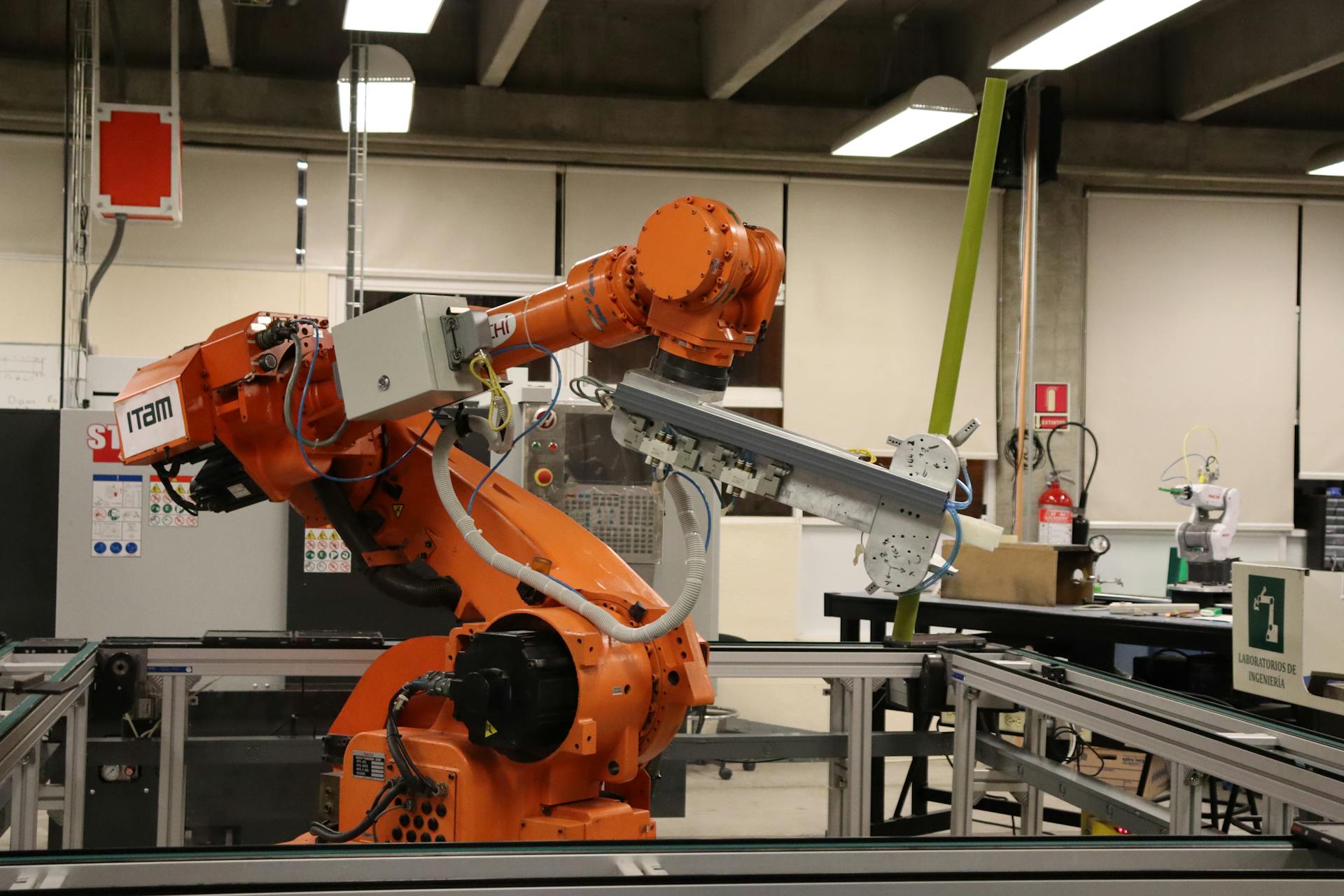

Manufacturing critical parts for vehicles or airplanes requires human-level precision to ensure safety and accuracy. Machine Learning can be helpful for inspections, but it's still best to have the system monitored by humans.

In situations where AI needs to deliver precision, like in manufacturing, human oversight is crucial. This ensures that equipment meets standards and prevents any errors that could compromise safety.

AI systems can occasionally hallucinate, providing incorrect or misleading information. This can erode customer trust and damage a brand if unchecked.

Human oversight is necessary to prevent the dissemination of erroneous information. By integrating a human-in-the-loop workflow, businesses can mitigate the risks inherent to AI technology.

In systems without human oversight, mistakes can lead to escalations or unresolved customer issues. This can damage customer relationships and the brand.

Human-AI Collaboration

Humans and LLMs are poised to maintain a symbiotic partnership, with humans contributing critical thinking and specialized knowledge to achieve optimal accuracy.

In this partnership, humans will play a more concentrated role in training and supervision as LLM architectures progress, and the "human in the loop" methodology will remain the most dependable avenue for realizing the complete potential of LLM accuracy.

To guide the model and get the desired outputs, humans can use prompt engineering, which involves carefully designing text prompts to give the model detailed instructions, examples, and guardrails.

Some prompt engineering techniques include few-shot learning, role specification, step-by-step instructions, and do's and don'ts.

Here are some examples of prompt engineering techniques:

- Few-shot learning: Include examples of the desired input-output mapping directly in the prompt to "teach" the model what to do

- Role specification: Specify the role or persona the model should embody in the prompt, such as "You are a helpful assistant" or "You are an experienced Python programmer"

- Step-by-step instructions: Break down a complex task into a series of explicit steps in the prompt for the model to follow

- Do's and don'ts: List out things the model should and should not do in the prompt, such as "Do not mention competitors" or "Use a friendly tone"

Even with human guidance and prompt engineering, LLMs can still produce outputs that are incorrect, inconsistent, biased, or misaligned, so it's essential to have safeguards in place to catch and filter problematic outputs.

Some common output filtering techniques include keyword blocking, sentiment analysis, fact checking, plagiarism detection, and semantic similarity.

Here's an example of how automated filtering can work:

The specifics of each filtering function will depend on your particular use case and requirements.

Evaluation and Improvement

Evaluators can be deployed on Humanloop to support both testing new versions of your Prompts and Tools during development and for monitoring live apps that are already in production.

Offline Evaluations involve combining Evaluators with predefined Datasets to evaluate your application as you iterate in your prompt engineering workflow, or to test for regressions in a CI environment.

A test Dataset is a collection of Datapoints, which are roughly analogous to unit tests or test cases in traditional programming.

To continuously monitor and improve your model, you should look for patterns in the feedback to identify areas where the model consistently falls short.

You can use a dashboard to track the model's key performance metrics over time, such as the percentage of outputs that pass automated filters, average human rating scores across different dimensions, and the percentage of outputs that require manual edits.

Here are some key performance metrics to track:

- Percentage of outputs that pass automated filters

- Average human rating scores across different dimensions

- Percentage of outputs that require manual edits

- Number of customer complaints or support tickets related to the generated content

- Engagement metrics like click-through rates and conversion rates

By monitoring these metrics, you can spot trends and proactively address issues before they become major problems.

Human-in-the-Loop (HITL) learning is an iterative process where humans provide feedback on model outputs, which is then used to fine-tune the model's performance over time.

The HITL flow involves starting with an initial set of training examples, fine-tuning the model, generating outputs for evaluation, and collecting human feedback.

You can repeat this process over multiple rounds to arrive at a high-quality fine-tuned model.

It's essential to have a clear definition of what you want the LLM to achieve, and how you will measure success, including key outputs, success metrics, and a framework for evaluating the model's performance.

Some common output filtering techniques include keyword blocking, sentiment analysis, fact checking, plagiarism detection, and semantic similarity.

Implementing HITL

Implementing HITL involves a basic process that includes defining your task, collecting initial training examples, fine-tuning the model, and having human raters evaluate the outputs. This process is repeated over multiple rounds to continuously improve the model.

To get started, you'll need to define your task, outputs, and success metrics. You'll also need to collect an initial set of training examples, which can be as small as 500 products for a product description task. Fine-tune the base LLM on these examples to create a task-specific model, then generate outputs from the model for a subset of inputs.

Broaden your view: Ai Llm Training

The key steps in implementing HITL are:

- We start with an initial set of training examples.

- We fine-tune the model, generate outputs for evaluation, and collect human feedback.

- We expand the training set with the human-scored and human-edited examples.

- We repeat this process over multiple rounds to arrive at a high-quality fine-tuned model.

Last Ditch Containment

Last Ditch Containment is a common challenge in implementing Human-in-the-Loop (HITL) technology. Some customers will still ask to speak with a live agent, driven by customer habits and expectations rather than technology or process.

This type of escalation can be significantly reduced by informing the customer of the wait time and proposing a review by a human advisor in the meantime. The Gen AI agent can do this instead of instantly escalating to a live agent.

If the advisor can help the Gen AI agent resolve the issue quickly, the escalation can be avoided entirely. This delivers a faster resolution without disrupting the flow of the interaction.

Not every customer will accept this deflection, but it can make a big difference in reducing escalations and making HITL more effective.

Installing AI in the Workplace

Installing AI in the workplace can be a straightforward process, especially with the right tools. You can use a software that has the HITL process already factored in, like Levity's automation software.

Many companies don't have sufficient data to reach perfect performance from the start, but they can still get reasonable results with HITL. Every single intervention counts for continuous training.

Using a software that simplifies the installation process can save you a lot of time and effort. This way, you can focus on implementing HITL and seeing its benefits.

Frequently Asked Questions

What is the purpose of the end-to-end interactive human-in-the-loop dashboard?

The end-to-end interactive human-in-the-loop dashboard is designed to combine human expertise with AI capabilities, allowing for more accurate and efficient model training. By providing a platform for human feedback and collaboration, it enables the creation of more robust and reliable AI models.

What is the benefit of using a tool like Humanloop in terms of accessing AI models?

Humanloop enables more accurate AI models by allowing humans to correct and refine model outputs, reducing errors and improving overall performance

Sources

- https://www.asapp.com/blog/ensuring-generative-ai-agent-success-with-human-in-the-loop

- https://www.ninetwothree.co/blog/human-in-the-loop-for-llm-accuracy

- https://humanloop.com/docs/v5/guides/evals/overview

- https://www.capellasolutions.com/blog/keeping-ai-in-check-human-guardrails-for-llm-workflows

- https://levity.ai/blog/human-in-the-loop

Featured Images: pexels.com