Genai models, a subset of artificial intelligence, face unique challenges that hinder their development and deployment. One major challenge is the lack of explainability, which makes it difficult to understand how these models arrive at their decisions.

This is particularly concerning in high-stakes applications, such as healthcare and finance, where transparency is crucial. As the article highlights, the opacity of genai models can lead to a lack of trust among users.

The complexity of genai models is another significant challenge, making it difficult to train and optimize them. According to the article, genai models often require massive amounts of data and computational resources to function effectively.

The high energy consumption of genai models is also a pressing concern, with some models requiring as much energy as a small town.

A unique perspective: Energy-based Model

Adversarial Attacks and Security

GANs have a unique vulnerability to adversarial attacks, which can trick the discriminator into thinking a fake sample is real. This is because the discriminator is essentially a binary classifier that returns probabilities.

In a GAN, the generator network directly produces fake samples, and the discriminator network attempts to distinguish between samples drawn from the training data and those drawn from the generator. The discriminator's goal is to correctly identify fake samples, but an adversarial attack can manipulate this process.

To mitigate these risks, robust data anonymization techniques and encryption methods can be implemented to protect sensitive information. This is especially important during the experimental phase of generative AI tools, as over 43% of executive leaders are currently testing them.

Adversarial Networks

GANs, or Generative Adversarial Networks, are a type of machine learning framework that pits two neural networks against each other in a zero-sum game. They were invented by Jan Goodfellow and his colleagues at the University of Montreal in 2014.

The two neural networks in a GAN are the generator and discriminator. The generator creates fake input or samples from a random vector, while the discriminator takes a given sample and decides if it's fake or real.

The discriminator is a binary classifier that returns probabilities, with numbers closer to 0 indicating a higher likelihood of the prediction being fake, and numbers closer to 1 indicating a higher likelihood of the prediction being real.

GANs are often implemented as CNNs, especially when working with images. The adversarial nature of GANs lies in a game theoretic scenario where the generator network must compete against the discriminator network.

Here's a brief overview of the GAN architecture:

- Generator: creates fake input or samples from a random vector

- Discriminator: takes a given sample and decides if it's fake or real

GANs are considered successful when a generator creates a fake sample that can fool a discriminator and humans. But the game doesn't stop there; it's time for the discriminator to be updated and get better.

Data Privacy and Security

Generative AI systems require massive datasets, which raises concerns about data privacy and security. Sensitive information could be exposed or misused during the AI training process.

Over 43% of executive leaders are currently testing generative AI tools, making data privacy a critical issue during this experimental phase.

Companies that build or fine-tune large language models must ensure that personally identifiable information (PII) isn't embedded in the language models. This is crucial for compliance with privacy laws.

It can be more difficult for a consumer to locate and request removal of PII from generative AI models compared to traditional search engines.

Worth a look: Generative Ai with Large Language Models

Harmful Content Distribution

Generative AI systems can create content automatically based on text prompts by humans. They can generate enormous productivity improvements, but they can also be used for harm, either intentional or unintentional.

An AI-generated email sent on behalf of the company could inadvertently contain offensive language. This highlights the need for careful consideration in using generative AI to augment, not replace humans or processes.

Generative AI should be used to ensure content meets the company's ethical expectations and supports its brand values.

Additional reading: What Are the Four Commonly Used Genai Applications

Bias and Fairness

Bias and Fairness is a pressing concern for generative AI models. Companies working on AI need to have diverse leaders and subject matter experts to identify unconscious bias in data and models.

AI models can perpetuate and even amplify biases present in the training data, leading to unfair and discriminatory outcomes. This is a significant challenge that needs to be addressed.

Incorporating diverse datasets during training can help minimize biases. Regularly auditing AI models for bias and implementing fairness checks is also essential.

More than 70% of executives are hesitant to fully adopt generative AI due to concerns like bias. This highlights the importance of addressing bias and fairness in AI development.

Involving interdisciplinary teams, including ethicists, in the development process can help ensure ethical considerations are addressed. This approach is crucial for creating fair and unbiased AI models.

You might like: Ai Training Models

Data Quality and Provenance

Data quality and provenance are crucial aspects of generative AI systems. The accuracy of these systems depends on the corpus of data they use and its provenance.

Generative AI systems consume tremendous volumes of data, which can be inadequately governed, of questionable origin, or contain bias. Scott Zoldi, chief analytics officer at FICO, warns that a lot of the data used by these systems is truly garbage, presenting a basic accuracy problem.

To mitigate this issue, FICO treats synthetic data produced by generative AI as walled-off data for test and simulation purposes only, and does not allow it to inform the model going forward. This approach ensures that the data is contained and does not compromise the accuracy of the system.

If this caught your attention, see: What Challenges Does Generative Ai Face

Synthetic Data Generation

Acquiring enough high-quality data for machine learning models is a significant challenge. It's a time-consuming and costly process, often making it impossible to get the data needed for training.

NVIDIA is making breakthroughs in generative AI technologies, including a neural network trained on videos of cities to render urban environments.

Synthetically created data can be used to develop self-driving cars, which can use generated virtual world training datasets for tasks like pedestrian detection.

A unique perspective: Training Tutorial Hugging Face

Data Provenance

Data provenance is a crucial aspect of generative AI systems. Generative AI systems consume tremendous volumes of data that could be inadequately governed, of questionable origin, used without consent, or contain bias.

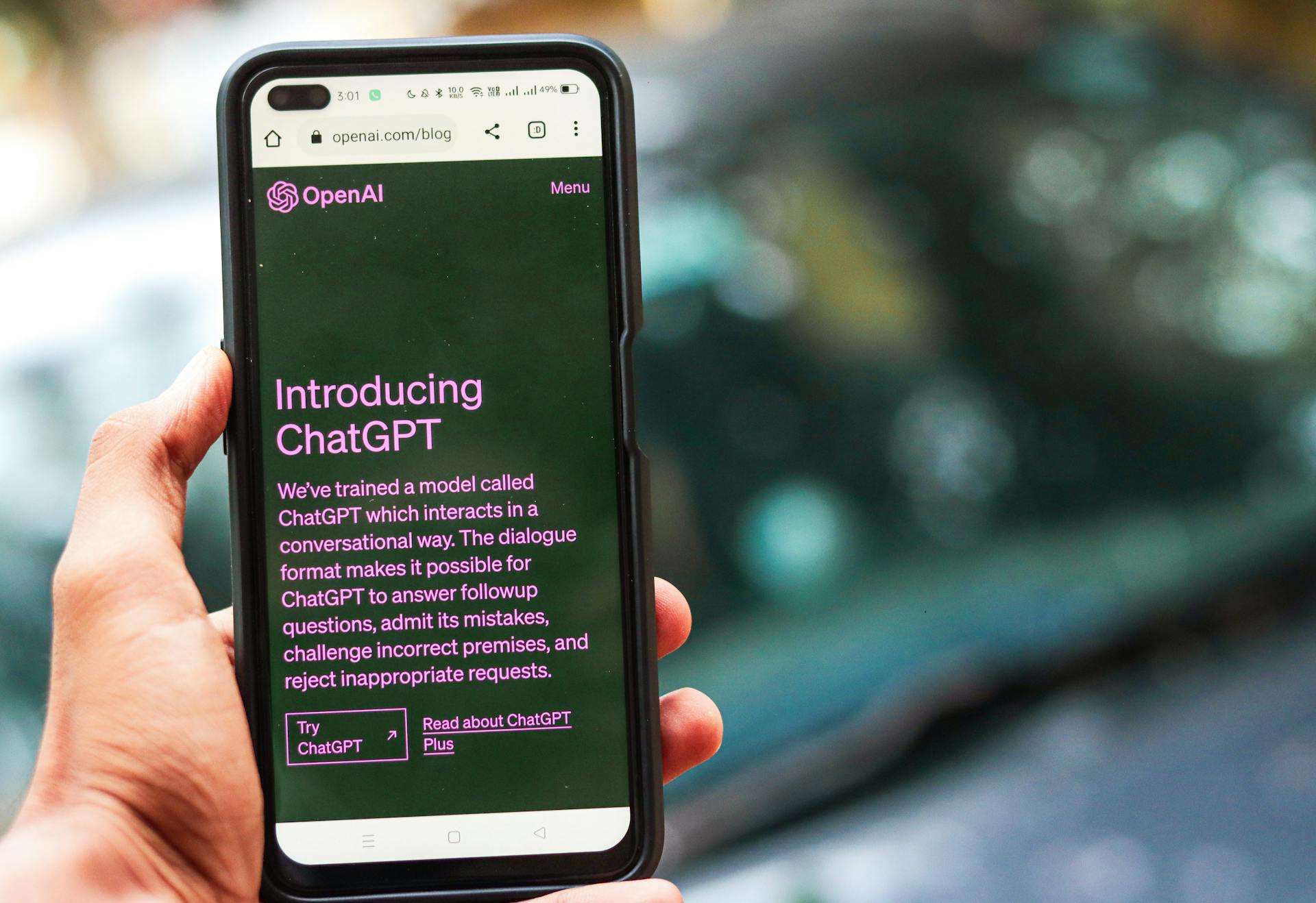

The accuracy of a generative AI system depends on the corpus of data it uses and its provenance. ChatGPT-4 is mining the internet for data, and a lot of it is truly garbage, presenting a basic accuracy problem on answers to questions to which we don't know the answer.

FICO, a company that has been using generative AI for more than a decade, treats generated data as synthetic data, labeling it as such so their team knows where the data is allowed to be used. This synthetic data is contained within the company's system and not allowed to be used in the wild.

Data provenance issues can be amplified by social influencers or the AI systems themselves, adding to the potential for inaccuracy.

Limitations and Challenges

Generative AI models are still in their infancy, and as such, they have several limitations and challenges that need to be addressed.

One of the key limitations is that generative AI relies on pre-existing data to learn and identify patterns, which can lead to limited range of outputs. This means that if the training dataset is limited in scope, so too will the generated images be.

Generative AI is also limited by the quality of its training data, which can affect the accuracy of the generated output. Poor quality or low quantity training data can lead to inaccurate or incomplete output.

Another limitation is that generative AI requires a lot of computational power to generate realistic images or text, which can be expensive and time-consuming. This can be a significant challenge for businesses that want to use generative AI for marketing purposes.

Generative AI has difficulty understanding context when presented with new information or scenarios outside of its training parameters. This means that generative AI cannot draw conclusions or make decisions based on complex situations.

Here are some of the key limitations and challenges faced by generative AI models:

- Biased data can lead to AI outputs that are discriminatory or unfair.

- Incomplete data can hinder performance.

- Transparency is crucial for customers to know when they're interacting with AI.

- Privacy is paramount to protect sensitive customer information.

- Accountability is essential to define clear protocols for when the AI makes a mistake.

- Adversarial attacks can manipulate the input data fed to the AI, causing it to generate misleading outputs.

- Agent trust is required to ensure that agents understand the AI's reasoning.

- Effective collaboration between agents and AI is necessary for exceptional customer service.

- Technical integration with existing contact center infrastructure is required for smooth data flow and functionality.

- Agent training is essential to leverage the capabilities of the AI system.

- Cost considerations are crucial to ensure a positive return on investment.

Workforce and Governance

The workforce and governance challenges posed by generative AI are significant. Over 80% of organizations struggle to establish effective AI governance frameworks, according to a BCG report. This highlights the need for clear policies, procedures, and accountability mechanisms for AI use.

To address the lack of skilled workforce, businesses can invest in training and upskilling existing employees in AI and related fields. This includes developing generative AI skills such as prompt engineering.

A comprehensive governance framework should be developed to ensure compliance with relevant laws and standards. This includes implementing anonymization and encryption, adhering to data governance policies, and using diverse datasets to avoid bias in AI models.

Workforce Roles and Morale

AI is transforming the workforce, taking on tasks like writing, coding, and content creation, which can lead to worker displacement and replacement. The pace of this change has accelerated with generative AI technologies.

Businesses are investing in preparing employees for the new roles created by generative AI, such as prompt engineering. This is an essential step to minimize the negative impacts of AI adoption and prepare companies for growth.

The future of work is changing, and companies need to adapt to this shift. As Nick Kramer, vice president of applied solutions at consultancy SSA & Company, noted, the existential challenge for generative AI is its impact on organizational design, work, and individual workers.

By investing in employee development, companies can help workers transition to new roles and thrive in a changing work environment.

Skilled Workforce Shortage

A skilled workforce shortage is a major challenge in effectively deploying generative AI. A shortage of skilled professionals with expertise in AI and machine learning hinders the effective deployment of generative AI.

Investing in training and upskilling existing employees in AI and related fields is crucial. Collaborating with educational institutions to develop AI-specific programs and certifications is a viable solution.

The potential productivity gains from generative AI could boost global GDP by 7% over the next decade, according to a July 2023 report by Goldman Sachs. This highlights the importance of addressing the skilled workforce shortage.

Consider hiring specialized AI talent or partnering with AI-focused firms to overcome the shortage. This investment in human capital is necessary to unlock the full potential of generative AI.

Governance and Compliance

Governance and compliance are crucial aspects of implementing generative AI in the workplace. Over 80% of organizations struggle to establish effective AI governance frameworks, according to a BCG report.

Developing a comprehensive governance framework is key to ensuring AI use is ethical and compliant. This framework should include clear policies, procedures, and accountability mechanisms.

Regularly reviewing and updating governance practices is essential to adapt to new challenges. This ensures that AI applications remain compliant with relevant laws and standards.

Here are some key considerations for governance and compliance:

Staying informed about regulatory developments is crucial to ensure compliance. This involves regularly reviewing and updating governance practices to adapt to new challenges.

By prioritizing governance and compliance, organizations can ensure that their generative AI applications are used responsibly and effectively.

Scalability and Cost

Scaling generative AI models can be a challenge, especially for smaller organizations.

Utilizing cloud-based AI platforms that offer scalable resources and services is a possible solution. These platforms can help organizations handle large volumes of data and complex tasks.

High computational costs are another challenge generative AI models face. Training and running these models require substantial computational power.

A fresh viewpoint: Generative Ai Challenges

Optimizing AI models to be more efficient in terms of computational resources can help reduce costs. Leverage cloud computing and AI-as-a-Service (AIaaS) solutions to reduce the financial burden.

Using more energy-efficient hardware and algorithms can also help manage costs better while scaling AI initiatives. This can be a cost-effective way to maintain competitive advantages.

Copyright and Liability

Generative AI tools are trained on massive databases from multiple sources, including the internet, which can lead to unknown data sources and problematic situations.

Companies using these tools must validate their outputs to avoid reputational and financial risks. This is especially crucial for industries like banking and pharmaceuticals.

Reputational and financial risks can be massive if a company's product is based on another company's intellectual property without proper clearance.

Companies must look to validate outputs from the models until legal precedents provide clarity around IP and copyright challenges.

Frequently Asked Questions

What is one key challenge faced by GenAI models in terms of consistency?

One key challenge faced by GenAI models in terms of consistency is ensuring reliable performance across diverse environments. This is often hindered by unpredictability and bias in complex algorithms.

Sources

- https://www.altexsoft.com/blog/generative-ai/

- https://www.techtarget.com/searchenterpriseai/tip/Generative-AI-ethics-8-biggest-concerns

- https://lingarogroup.com/blog/the-limitations-of-generative-ai-according-to-generative-ai

- https://www.joinhgs.com/us/en/insights/hgs-digital-blogs/generative-ai-challenges

- https://www.guvi.in/blog/challenges-and-possibilities-of-generative-ai/

Featured Images: pexels.com